1. Setup a development system

This guide describes the requirements and the steps necessary in order to get started with the development of the OpenNMS project.

1.1. Operating System / Environment

To build/compile OpenNMS it is necessary to run a *nix system. You do not need to run it physically, a virtual machine is sufficient, but the choice is yours. We recommend one of the following:

-

Linux Mint with Cinnamon Desktop environment

-

Mac OS X

| This documentation assumes that you chose a debian based desktop environment. |

1.2. Installation

The next chapter describes the full setup of your environment in order to meet the pre-requirements. Simply follow these instructions, they may vary depending on your Operating System.

# add OpenNMS as repository to install icmp and such

echo "deb http://debian.opennms.org stable main" > /etc/apt/sources.list.d/opennms.list

echo "deb-src http://debian.opennms.org stable main" >> /etc/apt/sources.list.d/opennms.list

# Add pgp key

wget -O - https://debian.opennms.org/OPENNMS-GPG-KEY | apt-key add -

# overall update

apt-get update

# install stuff

apt-get install -y software-properties-common

apt-get install -y git-core

apt-get install -y nsis

# install Oracle Java 8 JDK

# this setup is based on: http://www.webupd8.org/2014/03/how-to-install-oracle-java-8-in-debian.html

add-apt-repository -y ppa:webupd8team/java

apt-get update

apt-get install -y oracle-java8-installer

apt-get install -y oracle-java8-set-default

# install and configure PostgreSQL

apt-get install -y postgresql

echo "local all postgres peer" > /etc/postgresql/9.3/main/pg_hba.conf

echo "local all all peer" >> /etc/postgresql/9.3/main/pg_hba.conf

echo "host all all 127.0.0.1/32 trust" >> /etc/postgresql/9.3/main/pg_hba.conf

echo "host all all ::1/128 trust" >> /etc/postgresql/9.3/main/pg_hba.conf

# restart postgres to use new configs

/etc/init.d/postgresql restart

# install OpenNMS basic dependencies

apt-get install -y maven

apt-get install -y jicmp jicmp6

apt-get install -y jrrd

# clone opennms

mkdir -p ~/dev/opennms

git clone https://github.com/OpenNMS/opennms.git ~/dev/opennmsAfter this you should be able to build OpenNMS:

cd ~/dev/opennms

./clean.pl

./compile.pl -DskipTests

./assemble.pl -p dirFor more information on how to build OpenNMS from source check this wiki Install from Source.

After OpenNMS successfully built, please follow the wiki Running OpenNMS.

1.3. Tooling

We recommend the following toolset:

-

DB-Tool: DBeaver or Postgres Admin - pgAdmin

-

Graphing: yEd

-

Other: atom.io

1.4. Useful links

1.4.1. General

-

https://www.github.com/OpenNMS/opennms: The source code hosted on GitHub

-

http://wiki.opennms.org: Our Wiki, especially the start page is of interest. It points you in the right directions.

-

http://issues.opennms.org: Our issue/bug tracker.

-

https://github.com/opennms-forge/vagrant-opennms-dev: A vagrant box to setup a virtual box to build OpenNMS

-

https://github.com/opennms-forge/vagrant-opennms: A vagrant box to setup a virtual box to run OpenNMS

2. Minion development

2.1. Introduction

This guide is intended to help developers get started with writing Minion related features. It is not intented to be an exhaustive overview of the Minion architecture or feature set.

2.2. Container

This section details the customizations we make to the standard Karaf distribution for the Minion container.

2.2.1. Clean Start

We clear the cache on every start by setting karaf.clean.cache = true in order to ensure that only the features listed in the featuresBoot (or installed by the karaf-extender) are installed.

2.2.2. Karaf Extender

The Karaf Extender was developed to make it easier to manage and extend the container using existing packaging tools. It allows packages to register Maven Repositories, Karaf Feature Repositories and Karaf Features to Boot by overlaying additional files, avoiding modifying any of the existing files.

Here’s an overview, used for reference, of the relevant directories that are (currently) present on a default install of the opennms-minion package:

├── etc

│ └── featuresBoot.d

│ └── custom.boot

├── repositories

│ ├── .local

│ ├── core

│ │ ├── features.uris

│ │ └── features.boot

│ └── default

│ ├── features.uris

│ └── features.boot

└── systemWhen the karaf-extender feature is installed it will:

-

Find all of the folders listed under

$karaf.home/repositoriesthat do not start with a '.' and sort these by name. -

Gather the list of Karaf Feature Repository URIs from the

features.urisfiles in the repositories. -

Gather the list of Karaf Feature Names from the

features.bootfiles in the repositories. -

Gather the list of Karaf Feature Names form the files under

$karaf.etc/featuresBoot.dthat do not start with a '.' and sort these by name. -

Register the Maven Repositories by updating the

org.ops4j.pax.url.mvn.repositorieskey for the PIDorg.ops4j.pax.url.mvn. -

Wait up to 30 seconds until all of the Karaf Feature URIs are resolvable (the Maven Repositiries may take a few moments to update after updating the configuration.)

-

Install the Karaf Feature Repository URIs.

-

Install the Karaf Features.

Features listed in the features.boot files of the Maven Repositiries will take precedence over those listed in featuresBoot.d.

|

Any existing repository registered in org.ops4j.pax.url.mvn.repositories will be overwritten.

|

2.3. Packaging

This sections describes packages for Minion features and helps developers add new features to these packages.

We currently provide two different feature packages for Minion:

- openns-minion-features-core

-

Core utilities and services required for connectivity with the OpenNMS controller

- openns-minion-features-default

-

Minion-specific service extensions

Every package bundles all of the Karaf Feature Files and Maven Dependencies into a Maven Repository with additional meta-data used by the KarafExtender.

2.3.1. Adding a new feature to the default feature package

-

Add the feature definition to

container/features/src/main/resources/features-minion.xml. -

Add the feature name in the

featureslist configuration for thefeatures-maven-plugininfeatures/minion/repository/pom.xml. -

Optionally add the feature name to

features/minion/repository/src/main/resources/features.bootif the feature should be automatically installed when the container is started.

2.4. Guidelines

This sections describes a series of guidelines and best practices when developing Minion modules:

2.4.1. Security

-

Don’t store any credentials on disk, use the

SecureCredentialVaultinstead.

2.5. Testing

This sections describes how developers can test features on the Minion container.

2.5.1. Local Testing

You can compile, assemble, and spawn an interactive shell on the Minion container using:

cd features/minion && ./runInPlace.sh2.5.2. System Tests

The runtime environment of the Minion container and features differs greatly from those provided by the unit and integration tests. For this reason, it is important to perform automated end-to-end testing of the features.

The system tests provide a framework which allows developers to instantiate a complete Docker-based Minion system using a single JUnit rule.

For further details, see the minion-system-tests project on Github.

3. Topology

3.1. Info Panel Items

| This section is under development. All provided examples or code snippet may not fully work. However they are conceptionally correct and should point in the right direction. |

Each element in the Info Panel is defined by an InfoPanelItem object.

All available InfoPanelItem objects are sorted by the order.

This allows to arrange the items in a custom order.

After the elements are ordered, they are put below the SearchBox and the Vertices in Focus list.

3.1.1. Programmatic

It is possible to add items to the Info Panel in the Topology UI by simply implementing the interface InfoPanelItemProvider and expose its implementation via OSGi.

public class ExampleInfoPanelItemProvider implements InfoPanelItemProvider {

@Override

public Collection<? extends InfoPanelItem> getContributions(GraphContainer container) {

return Collections.singleton(

new DefaultInfoPanelItem() (1)

.withTitle("Static information") (2)

.withOrder(0) (3)

.withComponent(

new com.vaadin.ui.Label("I am a static component") (4)

)

);

}

}| 1 | The default implementation of InfoPanelItem.

You may use InfoPanelItem instead if the default implementation is not sufficient. |

| 2 | The title of the InfoPanelItem.

It is shown above the component. |

| 3 | The order. |

| 4 | A Vaadin component which actually describes the custom component. |

In order to show information based on a selected vertex or edge, one must inherit the classes EdgeInfoPanelItemProvider or VertexInfoPanelItemProvider.

The following example shows a custom EdgeInfoPanelItemProvider.

public class ExampleEdgeInfoPanelItemProvider extends EdgeInfoPanelItemProvider {

@Override

protected boolean contributeTo(EdgeRef ref, GraphContainer graphContainer) { (1)

return "custom-namespace".equals(ref.getNamespace()); // only show if of certain namespace

}

@Override

protected InfoPanelItem createInfoPanelItem(EdgeRef ref, GraphContainer graphContainer) { (2)

return new DefaultInfoPanelItem()

.withTitle(ref.getLabel() + " Info")

.withOrder(0)

.withComponent(

new com.vaadin.ui.Label("Id: " + ref.getId() + ", Namespace: " + ref.getNamespace())

);

}

}| 1 | Is invoked if one and only one edge is selected. It determines if the current edge should provide the InfoPanelItem created by createInfoPanelItem. |

| 2 | Is invoked if one and only one edge is selected. It creates the InfoPanelItem to show for the selected edge. |

Implementing the provided interfaces/classes, is not enough to have it show up.

It must also be exposed via a blueprint.xml to the OSGi service registry.

The following blueprint.xml snippet describes how to expose any custom InfoPanelItemProvider implementation to the OSGi service registry and have the Topology UI pick it up.

<service interface="org.opennms.features.topology.api.info.InfoPanelItemProvider"> (1)

<bean class="ExampleInfoPanelItemProvider" /> (2)

</service>| 1 | The service definition must always point to InfoPanelItemProvider. |

| 2 | The bean implementing the defined interface. |

3.1.2. Scriptable

By simply dropping JinJava templates (with file extension .html) to $OPENNMS_HOME/etc/infopanel a more scriptable approach is available.

For more information on JinJava refer to https://github.com/HubSpot/jinjava.

The following example describes a very simple JinJava template which is always visible.

{% set visible = true %} (1)

{% set title = "Static information" %} (2)

{% set order = -700 %} (3)

This information is always visible (4)| 1 | Makes this always visible |

| 2 | Defines the title |

| 3 | Each info panel item is ordered at the end. Making it -700 makes it very likely to pin this to the top of the info panel item. |

A template showing custom information may look as following:

{% set visible = vertex != null && vertex.namespace == "custom" && vertex.customProperty is defined %} (1)

{% set title = "Custom Information" %}

<table width="100%" border="0">

<tr>

<td colspan="3">This information is only visible if a vertex with namespace "custom" is selected</td>

</tr>

<tr>

<td align="right" width="80">Custom Property</td>

<td width="14"></td>

<td align="left">{{ vertex.customProperty }}</td>

</tr>

</table>| 1 | This template is only shown if a vertex is selected and the selected namespace is "custom". |

It is also possible to show performance data.

One can include resource graphs into the info panel by using the following HTML element:

<div class="graph-container" data-resource-id="RESOURCE_ID" data-graph-name="GRAPH_NAME"></div>Optional attributes data-graph-start and data-graph-end can be used to specify the displayed time range in seconds since epoch.

{# Example template for a simple memory statistic provided by the netsnmp agent #}

{% set visible = node != null && node.sysObjectId == ".1.3.6.1.4.1.8072.3.2.10" %}

{% set order = 110 %}

{# Setting the title #}

{% set title = "System Memory" %}

{# Define resource Id to be used #}

{% set resourceId = "node[" + node.id + "].nodeSnmp[]" %}

{# Define attribute Id to be used #}

{% set attributeId = "hrSystemUptime" %}

{% set total = measurements.getLastValue(resourceId, "memTotalReal")/1000/1024 %}

{% set avail = measurements.getLastValue(resourceId, "memAvailReal")/1000/1024 %}

<table border="0" width="100%">

<tr>

<td width="80" align="right" valign="top">Total</td>

<td width="14"></td>

<td align="left" valign="top" colspan="2">

{{ total|round(2) }} GB(s)

</td>

</tr>

<tr>

<td width="80" align="right" valign="top">Used</td>

<td width="14"></td>

<td align="left" valign="top" colspan="2">

{{ (total-avail)|round(2) }} GB(s)

</td>

</tr>

<tr>

<td width="80" align="right" valign="top">Available</td>

<td width="14"></td>

<td align="left" valign="top" colspan="2">

{{ avail|round(2) }} GB(s)

</td>

</tr>

<tr>

<td width="80" align="right" valign="top">Usage</td>

<td width="14"></td>

<td align="left" valign="top">

<meter style="width:100%" min="0" max="{{ total }}" low="{{ 0.5*total }}" high="{{ 0.8*total }}" value="{{ total-avail }}" optimum="0"/>

</td>

<td width="1">

{{ ((total-avail)/total*100)|round(2) }}%

</td>

</tr>

</table>{# Example template for the system uptime provided by the netsnmp agent #}

{% set visible = node != null && node.sysObjectId == ".1.3.6.1.4.1.8072.3.2.10" %}

{% set order = 100 %}

{# Setting the title #}

{% set title = "System Uptime" %}

{# Define resource Id to be used #}

{% set resourceId = "node[" + node.id + "].nodeSnmp[]" %}

{# Define attribute Id to be used #}

{% set attributeId = "hrSystemUptime" %}

<table border="0" width="100%">

<tr>

<td width="80" align="right" valign="top">getLastValue()</td>

<td width="14"></td>

<td align="left" valign="top">

{# Querying the last value via the getLastValue() method: #}

{% set last = measurements.getLastValue(resourceId, attributeId)/100.0/60.0/60.0/24.0 %}

{{ last|round(2) }} day(s)

</td>

</tr>

<tr>

<td width="80" align="right" valign="top">query()</td>

<td width="14"></td>

<td align="left" valign="top">

{# Querying the last value via the query() method. A custom function 'currentTimeMillis()' in

the namespace 'System' is used to get the timestamps for the query: #}

{% set end = System:currentTimeMillis() %}

{% set start = end - (15 * 60 * 1000) %}

{% set values = measurements.query(resourceId, attributeId, start, end, 300000, "AVERAGE") %}

{# Iterating over the values in reverse order and grab the first value which is not NaN #}

{% set last = "NaN" %}

{% for value in values|reverse %}

{%- if value != "NaN" && last == "NaN" %}

{{ (value/100.0/60.0/60.0/24.0)|round(2) }} day(s)

{% set last = value %}

{% endif %}

{%- endfor %}

</td>

</tr>

<tr>

<td width="80" align="right" valign="top">Graph</td>

<td width="14"></td>

<td align="left" valign="top">

{# We use the start and end variable here to construct the graph's Url: #}

<img src="/opennms/graph/graph.png?resourceId=node[{{ node.id }}].nodeSnmp[]&report=netsnmp.hrSystemUptime&start={{ start }}&end={{ end }}&width=170&height=30"/>

</td>

</tr>

</table>3.2. GraphML

In OpenNMS Horizon the GraphMLTopoloyProvider uses GraphML formatted files to visualize graphs.

GraphML is a comprehensive and easy-to-use file format for graphs. It consists of a language core to describe the structural properties of a graph and a flexible extension mechanism to add application-specific data. […] Unlike many other file formats for graphs, GraphML does not use a custom syntax. Instead, it is based on XML and hence ideally suited as a common denominator for all kinds of services generating, archiving, or processing graphs.

OpenNMS Horizon does not support the full feature set of GraphML. The following features are not supported: Nested graphs, Hyperedges, Ports and Extensions. For more information about GraphML refer to the Official Documentation.

A basic graph definition using GraphML usually consists of the following GraphML elements:

-

Graph element to describe the graph

-

Key elements to define custom properties, each element in the GraphML document can define as data elements

-

Node and Edge elements

-

Data elements to define custom properties, which OpenNMS Horizon will then interpret.

A very minimalistic example is given below:

<?xml version="1.0" encoding="UTF-8"?>

<graphml xmlns="http://graphml.graphdrawing.org/xmlns"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://graphml.graphdrawing.org/xmlns

http://graphml.graphdrawing.org/xmlns/1.0/graphml.xsd">

<!-- key section -->

<key id="label" for="all" attr.name="label" attr.type="string"></key>

<key id="namespace" for="graph" attr.name="namespace" attr.type="string"></key>

<!-- shows up in the menu -->

<data key="label">Minimalistic GraphML Topology Provider</data> (1)

<graph id="minicmalistic"> (2)

<data key="namespace">minimalistic</data> (3)

<node id="node1"/> (4)

<node id="node2"/>

<node id="node3"/>

<node id="node4"/>

</graph>

</graphml>| 1 | The optional label of the menu entry. |

| 2 | The graph definition. |

| 3 | Each graph must have a namespace, otherwise OpenNMS Horizon refuses to load the graph. |

| 4 | Node definitions. |

3.2.1. Create/Update/Delete GraphML Topology

In order to create a GraphML Topology, a valid GraphML xml file must exist. Afterwards this is send to the OpenNMS Horizon REST API to create it:

curl -X POST -H "Content-Type: application/xml" -u admin:admin -d@graph.xml 'http://localhost:8980/opennms/rest/graphml/topology-name'The topology-name is a unique identifier for the Topology.

If a label property is defined for the Graphml element this is used to be displayed in the Topology UI, otherwise the topology-name defined here is used as a fallback.

To delete an already existing Topology a HTTP DELETE request must be send:

curl -X DELETE -u admin:admin 'http://localhost:8980/opennms/rest/graphml/topology-name'There is no PUT method available. In order to update an existing GraphML Topology one must first delete and afterwards re-create it.

Even if the HTTP Request was successful, it does not mean, that the Topology is actually loaded properly.

The HTTP Request states that the Graph was successfully received, persisted and is in a valid GraphML format.

However, the underlying GraphMLTopologyProvider may perform additional checks or encounters problems while parsing the file.

If the Topology does not show up, the karaf.log should be checked for any clues what went wrong.

In addition it may take a while before the Topology is actually selectable from the Topology UI.

|

3.2.2. Supported Attributes

A various set of GraphML attributes are supported and interpreted by OpenNMS Horizon while reading the GraphML file. The following table explains the supported attributes and for which GraphML elements they may be used.

The type of the GraphML-Attribute can be either boolean, int, long, float, double, or string. These types are defined like the corresponding types in the Java™-Programming language.

| Property | Required | For element | Type | Default | Description |

|---|---|---|---|---|---|

|

yes |

Graph |

|

- |

The namespace must be unique overall existing Topologies. |

|

no |

Graph |

|

- |

A description, which is shown in the Info Panel. |

|

no |

Graph |

|

|

Defines a preferred layout. |

|

no |

Graph |

|

|

Defines a focus strategy. See Focus Strategies for more information. |

|

no |

Graph |

|

- |

Refers to nodes ids in the graph.

This is required if |

|

no |

Graph |

|

|

Defines the default SZL. |

|

no |

Graph |

|

- |

Defines which Vertex Status Provider should be used, e.g. |

|

no |

Node |

|

|

Defines the icon. See Icons for more information. |

|

no |

Graph, Node |

|

- |

Defines a custom label. If not defined, the |

|

no |

Node |

|

- |

Allows referencing the Vertex to an OpenNMS node. |

|

no |

Node |

|

- |

Allows referencing the Vertex to an OpenNMS node identified by foreign source and foreign id.

Can only be used in combination with |

|

no |

Node |

|

- |

Allows referencing the Vertex to an OpenNMS node identified by foreign source and foreign id.

Can only be used in combination with |

|

no |

Node, Edge |

|

Defines a custom tooltip. If not defined, the |

|

|

no |

Node |

|

|

Sets the level of the Vertex which is used by certain layout algorithms i.e. |

|

no |

Graph, Node |

|

|

Controls the spacing between the paths drawn for the edges when there are multiple edges connecting two vertices. |

|

no |

GraphML |

|

|

Defines the breadcrumb strategy to use. See Breadcrumbs for more information. |

3.2.3. Focus Strategies

A Focus Strategy defines which Vertices should be added to focus when selecting the Topology.

The following strategies are available:

-

EMPTY No Vertex is add to focus.

-

ALL All Vertices are add to focus.

-

FIRST The first Vertex is add to focus.

-

SPECIFIC Only Vertices which id match the graph’s property

focus-idsare added to focus.

3.2.4. Icons

With the GraphMLTopoloygProvider it is not possible to change the icon from the Topology UI.

Instead if a custom icon should be used, each node must contain a iconKey property referencing an SVG element.

3.2.5. Vertex Status Provider

The Vertex Status Provider calculates the status of the Vertex.

There are multiple implementations available which can be configured for each graph: default, script and propagate.

If none is specified, there is no status provided at all.

Default Vertex Status Provider

The default status provider calculates the status based on the worst unacknowledged alarm associated with the Vertex’s node.

In order to have a status calculated a (OpenNMS Horizon) node must be associated with the Vertex.

This can be achieved by setting the GraphML attribute nodeID on the GraphML node accordingly.

Script Vertex Status Provider

The script status provider uses scripts similar to the Edge Status Provider.

Just place Groovy scripts (with file extension .groovy) in the directory $OPENNMS_HOME/etc/graphml-vertex-status.

All of the scripts will be evaluated and the most severe status will be used for the vertex in the topology’s visualization.

If the script shouldn’t contribute any status to a vertex just return null.

Propagate Vertex Status Provider

The propagate status provider follows all links from a node to its connected nodes.

It uses the status of these nodes to calculate the status by determining the worst one.

3.2.6. Edge Status Provider

It is also possible to compute a status for each edge in a given graph.

Just place Groovy scripts (with file extension .groovy) in the directory $OPENNMS_HOME/etc/graphml-edge-status.

All of the scripts will be evaluated and the most severe status will be used for the edge in the topology’s visualization.

The following simple Groovy script example will apply a different style and severity if the edge’s associated source node is down.

import org.opennms.netmgt.model.OnmsSeverity;

import org.opennms.features.topology.plugins.topo.graphml.GraphMLEdgeStatus;

if ( sourceNode != null && sourceNode.isDown() ) {

return new GraphMLEdgeStatus(OnmsSeverity.WARNING, [ 'stroke-dasharray' : '5,5', 'stroke' : 'yellow', 'stroke-width' : '6' ]);

} else {

return new GraphMLEdgeStatus(OnmsSeverity.NORMAL, []);

}If the script shouldn’t contribute any status to an edge just return null.

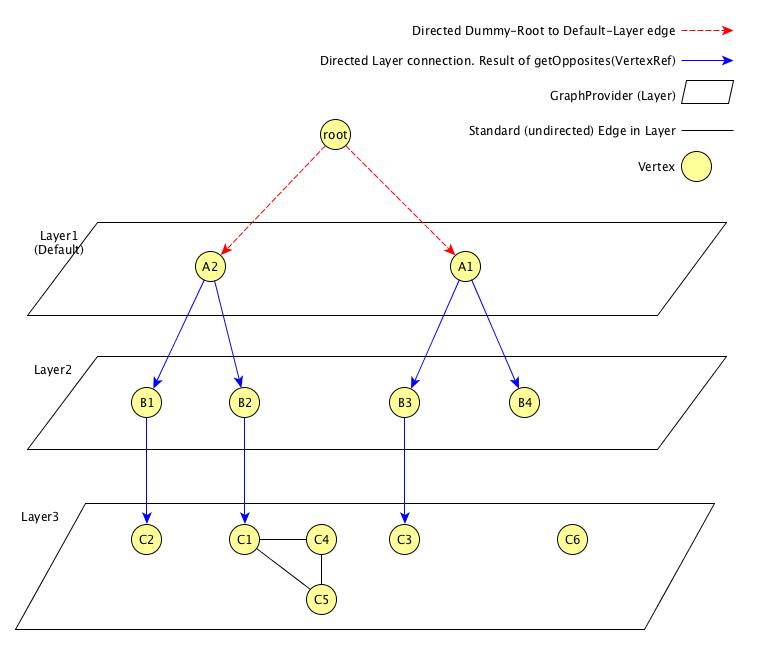

3.2.7. Layers

The GraphMLTopologyProvider can handle GraphML files with multiple graphs. Each Graph is represented as a Layer in the Topology UI. If a vertex from one graph has an edge pointing to another graph, one can navigate to that layer.

<?xml version="1.0" encoding="UTF-8"?>

<graphml xmlns="http://graphml.graphdrawing.org/xmlns"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://graphml.graphdrawing.org/xmlns

http://graphml.graphdrawing.org/xmlns/1.0/graphml.xsd">

<!-- Key section -->

<key id="label" for="graphml" attr.name="label" attr.type="string"></key>

<key id="label" for="graph" attr.name="label" attr.type="string"></key>

<key id="label" for="node" attr.name="label" attr.type="string"></key>

<key id="description" for="graph" attr.name="description" attr.type="string"></key>

<key id="namespace" for="graph" attr.name="namespace" attr.type="string"></key>

<key id="preferred-layout" for="graph" attr.name="preferred-layout" attr.type="string"></key>

<key id="focus-strategy" for="graph" attr.name="focus-strategy" attr.type="string"></key>

<key id="focus-ids" for="graph" attr.name="focus-ids" attr.type="string"></key>

<key id="semantic-zoom-level" for="graph" attr.name="semantic-zoom-level" attr.type="int"/>

<!-- Label for Topology Selection menu -->

<data key="label">Layer Example</data>

<graph id="regions">

<data key="namespace">acme:regions</data>

<data key="label">Regions</data>

<data key="description">The Regions Layer.</data>

<data key="preferred-layout">Circle Layout</data>

<data key="focus-strategy">ALL</data>

<node id="north">

<data key="label">North</data>

</node>

<node id="west">

<data key="label">West</data>

</node>

<node id="south">

<data key="label">South</data>

</node>

<node id="east">

<data key="label">East</data>

</node>

</graph>

<graph id="markets">

<data key="namespace">acme:markets</data>

<data key="description">The Markets Layer.</data>

<data key="label">Markets</data>

<data key="description">The Markets Layer</data>

<data key="semantic-zoom-level">1</data>

<data key="focus-strategy">SPECIFIC</data>

<data key="focus-ids">north.2</data>

<node id="north.1">

<data key="label">North 1</data>

</node>

<node id="north.2">

<data key="label">North 2</data>

</node>

<node id="north.3">

<data key="label">North 3</data>

</node>

<node id="north.4">

<data key="label">North 4</data>

</node>

<node id="west.1">

<data key="label">West 1</data>

</node>

<node id="west.2">

<data key="label">West 2</data>

</node>

<node id="west.3">

<data key="label">West 3</data>

</node>

<node id="west.4">

<data key="label">West 4</data>

</node>

<node id="south.1">

<data key="label">South 1</data>

</node>

<node id="south.2">

<data key="label">South 2</data>

</node>

<node id="south.3">

<data key="label">South 3</data>

</node>

<node id="south.4">

<data key="label">South 4</data>

</node>

<node id="east.1">

<data key="label">East 1</data>

</node>

<node id="east.2">

<data key="label">East 2</data>

</node>

<node id="east.3">

<data key="label">East 3</data>

</node>

<node id="east.4">

<data key="label">East 4</data>

</node>

<!-- Edges in this layer -->

<edge id="north.1_north.2" source="north.1" target="north.2"/>

<edge id="north.2_north.3" source="north.2" target="north.3"/>

<edge id="north.3_north.4" source="north.3" target="north.4"/>

<edge id="east.1_east.2" source="east.1" target="east.2"/>

<edge id="east.2_east.3" source="east.2" target="east.3"/>

<edge id="east.3_east.4" source="east.3" target="east.4"/>

<edge id="south.1_south.2" source="south.1" target="south.2"/>

<edge id="south.2_south.3" source="south.2" target="south.3"/>

<edge id="south.3_south.4" source="south.3" target="south.4"/>

<edge id="north.1_north.2" source="north.1" target="north.2"/>

<edge id="north.2_north.3" source="north.2" target="north.3"/>

<edge id="north.3_north.4" source="north.3" target="north.4"/>

<!-- Edges to different layers -->

<edge id="west_north.1" source="north" target="north.1"/>

<edge id="north_north.2" source="north" target="north.2"/>

<edge id="north_north.3" source="north" target="north.3"/>

<edge id="north_north.4" source="north" target="north.4"/>

<edge id="south_south.1" source="south" target="south.1"/>

<edge id="south_south.2" source="south" target="south.2"/>

<edge id="south_south.3" source="south" target="south.3"/>

<edge id="south_south.4" source="south" target="south.4"/>

<edge id="east_east.1" source="east" target="east.1"/>

<edge id="east_east.2" source="east" target="east.2"/>

<edge id="east_east.3" source="east" target="east.3"/>

<edge id="east_east.4" source="east" target="east.4"/>

<edge id="west_west.1" source="west" target="west.1"/>

<edge id="west_west.2" source="west" target="west.2"/>

<edge id="west_west.3" source="west" target="west.3"/>

<edge id="west_west.4" source="west" target="west.4"/>

</graph>

</graphml>3.2.8. Breadcrumbs

When multiple Layers are used it is possible to navigate between them (navigate to option from vertex' context menu).

To give the user some orientation breadcrumbs can be enabled with the breadcrumb-strategy property.

The following strategies are supported:

-

NONE No breadcrumbs are shown.

-

SHORTEST_PATH_TO_ROOT generates breadcrumbs from all visible vertices to the root layer (TopologyProvider). The algorithms assumes a hierarchical graph. Be aware, that all vertices MUST share the same root layer, otherwise the algorithm to determine the path to root does not work.

The following figure visualizes a graphml defining multiple layers (see below for the graphml definition).

From the given example, the user can select the Breadcrumb Example Topology Provider from the menu.

The user can switch between Layer 1, Layer 2 and Layer 3.

In addition for each vertex which has connections to another layer, the user can select the navigate to option from the context menu of that vertex to navigate to the according layer.

The user can also search for all vertices and add it to focus.

The following behaviour is implemented:

-

If a user navigates from one vertex to a vertex in another layer, the view is switched to that layer and adds all vertices to focus, the

source vertexpointed to. The Breadcrumb is<parent layer name> > <source vertex>. For example, if a user navigates fromLayer1:A2toLayer2:B1the view switches toLayer 2and addsB1andB2to focus. In additionLayer 1 > A2is shown as Breadcrumbs. -

If a user directly switches to another layer, the default focus strategy is applied, which may result in multiple vertices with no unique parent. The calculated breadcrumb is:

<parent layer name> > Multiple <target layer name>. For example, if a user switches toLayer 3, all vertices of that layer are added to focus (focus-strategy=ALL). No unique path to root is found, the following breadcrumb is shown instead:Layer 1 > Multiple Layer 1>Multiple Layer 2 -

If a user adds a vertex to focus, which is not in the current selected layer, the view switches to that layer and only the "new" vertex is added to focus. The generated breadcrumb shows the path to root through all layers. For example, the user adds

C3to focus, and the current layer isLayer 1, than the generated breadcrumb is as follows:Layer 1 > A1 > B3. -

Only elements between layers are shown in the breadcrumb. Connections on the same layer are ignored. For example, a user adds

C5to focus, the generated breadcrumb is as follows:Layer 1 > A2 > B2

The following graphml file defines the above shown graph. Be aware, that the root vertex shown above is generated to help calculating the path to root. It must not be defined in the graphml document.

<?xml version="1.0" encoding="UTF-8"?>

<graphml xmlns="http://graphml.graphdrawing.org/xmlns"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://graphml.graphdrawing.org/xmlns

http://graphml.graphdrawing.org/xmlns/1.0/graphml.xsd">

<key id="breadcrumb-strategy" for="graphml" attr.name="breadcrumb-strategy" attr.type="string"></key>

<key id="label" for="all" attr.name="label" attr.type="string"></key>

<key id="description" for="graph" attr.name="description" attr.type="string"></key>

<key id="namespace" for="graph" attr.name="namespace" attr.type="string"></key>

<key id="focus-strategy" for="graph" attr.name="focus-strategy" attr.type="string"></key>

<key id="focus-ids" for="graph" attr.name="focus-ids" attr.type="string"></key>

<key id="preferred-layout" for="graph" attr.name="preferred-layout" attr.type="string"></key>

<key id="semantic-zoom-level" for="graph" attr.name="semantic-zoom-level" attr.type="int"/>

<data key="label">Breadcrumb Example</data>

<data key="breadcrumb-strategy">SHORTEST_PATH_TO_ROOT</data>

<graph id="L1">

<data key="label">Layer 1</data>

<data key="namespace">acme:layer1</data>

<data key="focus-strategy">ALL</data>

<data key="preferred-layout">Circle Layout</data>

<node id="a1">

<data key="label">A1</data>

</node>

<node id="a2">

<data key="label">A2</data>

</node>

<edge id="a1_b3" source="a1" target="b3"/>

<edge id="a1_b4" source="a1" target="b4"/>

<edge id="a2_b1" source="a2" target="b1"/>

<edge id="a2_b2" source="a2" target="b2"/>

</graph>

<graph id="L2">

<data key="label">Layer 2</data>

<data key="focus-strategy">ALL</data>

<data key="namespace">acme:layer2</data>

<data key="preferred-layout">Circle Layout</data>

<data key="semantic-zoom-level">0</data>

<node id="b1">

<data key="label">B1</data>

</node>

<node id="b2">

<data key="label">B2</data>

</node>

<node id="b3">

<data key="label">B3</data>

</node>

<node id="b4">

<data key="label">B4</data>

</node>

<edge id="b1_c2" source="b1" target="c2"/>

<edge id="b2_c1" source="b2" target="c1"/>

<edge id="b3_c3" source="b3" target="c3"/>

</graph>

<graph id="Layer 3">

<data key="label">Layer 3</data>

<data key="focus-strategy">ALL</data>

<data key="description">Layer 3</data>

<data key="namespace">acme:layer3</data>

<data key="preferred-layout">Grid Layout</data>

<data key="semantic-zoom-level">1</data>

<node id="c1">

<data key="label">C1</data>

</node>

<node id="c2">

<data key="label">C2</data>

</node>

<node id="c3">

<data key="label">C3</data>

</node>

<node id="c4">

<data key="label">C4</data>

</node>

<node id="c5">

<data key="label">C5</data>

</node>

<node id="c6">

<data key="label">C6</data>

</node>

<edge id="c1_c4" source="c1" target="c4"/>

<edge id="c1_c5" source="c1" target="c5"/>

<edge id="c4_c5" source="c4" target="c5"/>

</graph>

</graphml>4. CORS Support

4.1. Why do I need CORS support?

By default, many browsers implement a same origin policy which prevents making requests to a resource, on an origin that’s different from the source origin.

For example, a request originating from a page served from http://www.opennms.org to a resource on http://www.adventuresinoss.com would be considered a cross origin request.

CORS (Cross Origin Resource Sharing) is a standard mechanism used to enable cross origin requests.

For further details, see:

4.2. How can I enable CORS support?

CORS support for the REST interface (or any other part of the Web UI) can be enabled as follows:

-

Open '$OPENNMS_HOME/jetty-webapps/opennms/WEB-INF/web.xml' for editing.

-

Apply the CORS filter to the '/rest/' path by removing the comments around the <filter-mapping> definition. The result should look like:

<!-- Uncomment this to enable CORS support --> <filter-mapping> <filter-name>CORS Filter</filter-name> <url-pattern>/rest/*</url-pattern> </filter-mapping> -

Restart OpenNMS Horizon

4.3. How can I configure CORS support?

CORS support is provided by the org.ebaysf.web.cors.CORSFilter servlet filter.

Parameters can be configured by modifying the filter definition in the 'web.xml' file referenced above.

By default, the allowed origins parameter is set to '*'.

The complete list of parameters supported are available from:

5. ReST API

A RESTful interface is a web service conforming to the REST architectural style as described in the book RESTful Web Services. This page is describes the RESTful interface for OpenNMS Horizon.

5.1. ReST URL

The base URL for Rest Calls is: http://opennmsserver:8980/opennms/rest/

For instance, http://localhost:8980/opennms/rest/alarms/ will give you the current alarms in the system.

5.2. Authentication

Use HTTP Basic authentication to provide a valid username and password. By default you will not receive a challenge, so you must configure your ReST client library to send basic authentication proactively.

5.3. Data format

Jersey allows ReST calls to be made using either XML or JSON.

By default a request to the API is returned in XML. XML is delivered without namespaces. Please note: If a namespace is added manually in order to use a XML tool to validate against the XSD (like xmllint) it won’t be preserved when OpenNMS updates that file. The same applies to comments.

To get JSON encoded responses one has to send the following header with the request: Accept: application/json.

5.4. Standard Parameters

The following are standard params which are available on most resources (noted below)

| Parameter | Description |

|---|---|

|

integer, limiting the number of results. This is particularly handy on events and notifications, where an accidental call with no limit could result in many thousands of results being returned, killing either the client or the server. If set to 0, then no limit applied |

|

integer, being the numeric offset into the result set from which results should start being returned. E.g., if there are 100 result entries, offset is 15, and limit is 10, then entries 15-24 will be returned. Used for pagination |

Filtering: All properties of the entity being accessed can be specified as parameters in either the URL (for GET) or the form value (for PUT and POST). If so, the value will be used to add a filter to the result. By default, the operation is equality, unless the |

|

|

Checks for equality |

|

Checks for non-equality |

|

Case-insensitive wildcarding ( |

|

Case-sensitive wildcarding ( |

|

Greater than |

|

Less than |

|

Greater than or equal |

|

Less than or equal |

If the value null is passed for a given property, then the obvious operation will occur (comparator will be ignored for that property).

notnull is handled similarly.

-

Ordering: If the parameter

orderByis specified, results will be ordered by the named property. Default is ascending, unless theorderparameter is set todesc(any other value will default to ascending)

5.5. Standard filter examples

Take /events as an example.

| Resource | Description |

|---|---|

|

would return the first 10 events with the rtc subscribe UEI, (10 being the default limit for events) |

|

would return all the rtc subscribe events (potentially quite a few) |

|

would return the first 10 events with an id greater than 100 |

|

would return the first 10 events that have a non-null Ack time (i.e. those that have been acknowledged) |

|

would return the first 20 events that have a non-null Ack time and an id greater than 100. Note that the notnull value causes the comparator to be ignored for eventAckTime |

|

would return the first 20 events that have were acknowledged after 28th July 2008 at 4:41am (+12:00), and an id greater than 100. Note that the same comparator applies to both property comparisons. Also note that you must URL encode the plus sign when using GET. |

|

would return the 10 latest events inserted (probably, unless you’ve been messing with the id’s) |

|

would return the first 10 events associated with some node in location 'MINION' |

5.6. HTTP Return Codes

The following apply for OpenNMS Horizon 18 and newer.

-

DELETE requests are going to return a 202 (ACCEPTED) if they are performed asynchronously otherwise they return a 204 (NO_CONTENT) on success.

-

All the PUT requests are going to return a 204 (NO_CONTENT) on success.

-

All the POST requests that can either add or update an entity are going to return a 204 (NO_CONTENT) on success.

-

All the POST associated to resource addition are going to return a 201 (CREATED) on success.

-

All the POST requests where it is required to return an object will return a 200 (OK).

-

All the requests excepts GET for the Requisitions end-point and the Foreign Sources Definitions end-point will return 202 (ACCEPTED). This is because all the requests are actually executed asynchronously and there is no way to know the status of the execution, or wait until the processing is done.

-

If a resource is not modified during a PUT request, a NOT_MODIFIED will be returned. A NO_CONTENT will be returned only on a success operation.

-

All GET requests are going to return 200 (OK) on success.

-

All GET requests are going to return 404 (NOT_FOUND) when a single resource doesn’t exist; but will return 400 (BAD_REQUEST), if an intermediate resource doesn’t exist. For example, if a specific IP doesn’t exist on a valid node, return 404. But, if the IP is valid and the node is not valid, because the node is an intermediate resource, a 400 will be returned.

-

If something not expected is received from the Service/DAO Layer when processing any HTTP request, like an exception, a 500 (INTERNAL_SERVER_ERROR) will be returned.

-

Any problem related with the incoming parameters, like validations, will generate a 400 (BAD_REQUEST).

5.7. Identifying Resources

Some endpoints deal in resources, which are identified by Resource IDs. Since every resource is ultimately parented under some node, identifying the node which contains a resource is the first step in constructing a resource ID. Two styles are available for identifying the node in a resource ID:

| Style | Description | Example |

|---|---|---|

|

Identifies a node by its database ID, which is always an integer |

|

|

Identifies a node by its foreign-source name and foreign-ID, joined by a single colon |

|

The node identifier is followed by a period, then a resource-type name and instance name. The instance name’s characteristics may vary from one resource-type to the next. A few examples:

| Value | Description |

|---|---|

|

Node-level (scalar) performance data for the node in question. This type is the only one where the instance identifier is empty. |

|

A layer-two interface as represented by a row in the SNMP |

|

The root filesystem of a node running the Net-SNMP management agent. |

Putting it all together, here are a few well-formed resource IDs:

-

node[1].nodeSnmp[] -

node[42].interfaceSnmp[eth0-04013f75f101] -

node[Servers:115da833-0957-4471-b496-a731928c27dd].dskIndex[_root_fs]

5.8. Expose ReST services via OSGi

In order to expose a ReST service via OSGi the following steps must be followed:

-

Define an interface, containing java jax-rs annotations

-

Define a class, implementing that interface

-

Create an OSGi bundle which exports a service with the interface from above

5.8.1. Define a ReST interface

At first a public interface must be created which must contain jax-rs annotations.

@Path("/datachoices") (1)

public interface DataChoiceRestService {

@POST (2)

void updateCollectUsageStatisticFlag(@Context HttpServletRequest request, @QueryParam("action") String action);

@GET

@Produces(value={MediaType.APPLICATION_JSON})

UsageStatisticsReportDTO getUsageStatistics();

}| 1 | Each ReST interface must either have a @Path or @Provider annotation.

Otherwise it is not considered a ReST service. |

| 2 | Use jax-rs annotations, such as @Post, @GET, @PUT, @Path, etc. to define the ReST service. |

5.8.2. Implement a ReST interface

A class must implement the ReST interface.

| The class may or may not repeat the jax-rs annotations from the interface. This is purely for readability. Changing or adding different jax-rs annotations on the class, won’t have any effect. |

public class DataChoiceRestServiceImpl implements DataChoiceRestService {

@Override

public void updateCollectUsageStatisticFlag(HttpServletRequest request, String action) {

// do something

}

@Override

public UsageStatisticsReportDTO getUsageStatistics() {

return null;

}

}5.8.3. Export the ReST service

At last the ReST service must be exported via the bundlecontext. This can be either achieved using an Activator or the blueprint mechanism.

<blueprint xmlns="http://www.osgi.org/xmlns/blueprint/v1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="

http://www.osgi.org/xmlns/blueprint/v1.0.0

http://www.osgi.org/xmlns/blueprint/v1.0.0/blueprint.xsd

">

<bean id="dataChoiceRestService" class="org.opennms.features.datachoices.web.internal.DataChoiceRestServiceImpl" /> (1)

<service interface="org.opennms.features.datachoices.web.DataChoiceRestService" ref="dataChoiceRestService" > (2)

<service-properties>

<entry key="application-path" value="/rest" /> (3)

</service-properties>

</service>

</blueprint>| 1 | Create the ReST implementation class |

| 2 | Export the ReST service |

| 3 | Define where the ReST service will be exported to, e.g. /rest, /api/v2, but also completely different paths can be used.

If not defined, /services is used. |

For a full working example refer to the datachoices feature.

5.9. Currently Implemented Interfaces

5.9.1. Acknowledgements

the default offset is 0, the default limit is 10 results.

To get all results, use limit=0 as a parameter on the URL (ie, GET /acks?limit=0).

|

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get a list of acknowledgements. |

|

Get the number of acknowledgements. (Returns plaintext, rather than XML or JSON.) |

|

Get the acknowledgement specified by the given ID. |

POSTs (Setting Data)

| Resource | Description |

|---|---|

|

Creates or modifies an acknowledgement for the given alarm ID or notification ID. To affect an alarm, set an |

Usage examples with curl

curl -u 'admin:admin' -X POST -d notifId=3 -d action=ack http://localhost:8980/opennms/rest/ackscurl -u 'admin:admin' -X POST -d alarmId=42 -d action=esc http://localhost:8980/opennms/rest/acks5.9.2. Alarm Statistics

It is possible to get some basic statistics on alarms, including the number of acknowledged alarms, total alarms, and the newest and oldest of acknowledged and unacknowledged alarms.

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Returns statistics related to alarms. Accepts the same Hibernate parameters that you can pass to the |

|

Returns the statistics related to alarms, one per severity. You can optionally pass a list of severities to the |

5.9.3. Alarms

the default offset is 0, the default limit is 10 results. To get all results, use limit=0 as a parameter on the URL (ie, GET /events?limit=0).

|

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get a list of alarms. |

|

Get the number of alarms. (Returns plaintext, rather than XML or JSON.) |

|

Get the alarms specified by the given ID. |

Note that you can also query by severity, like so:

| Resource | Description |

|---|---|

|

Get the alarms with a severity greater than or equal to MINOR. |

PUTs (Modifying Data)

PUT requires form data using application/x-www-form-urlencoded as a Content-Type.

| Resource | Description |

|---|---|

|

Acknowledges (or unacknowledges) an alarm. |

|

Acknowledges (or unacknowledges) alarms matching the additional query parameters. eg, |

New in OpenNMS 1.11.0

In OpenNMS 1.11.0, some additional features are supported in the alarm ack API:

| Resource | Description |

|---|---|

|

Clears an alarm. |

|

Escalates an alarm. eg, NORMAL → MINOR, MAJOR → CRITICAL, etc. |

|

Clears alarms matching the additional query parameters. |

|

Escalates alarms matching the additional query parameters. |

Additionally, when acknowledging alarms (ack=true) you can now specify an ackUser parameter.

You will only be allowed to ack as a different user IF you are PUTting as an authenticated user who is in the admin role.

5.9.4. Events

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get a list of events. The default for offset is 0, and the default for limit is 10. To get all results, use limit=0 as a parameter on the URL (ie, |

|

Get the number of events. (Returns plaintext, rather than XML or JSON.) |

|

Get the event specified by the given ID. |

PUTs (Modifying Data)

PUT requires form data using application/x-www-form-urlencoded as a Content-Type.

| Resource | Description |

|---|---|

|

Acknowledges (or unacknowledges) an event. |

|

Acknowledges (or unacknowledges) the matching events. |

POSTs (Adding Data)

POST requires XML (application/xml) or JSON (application/json) as its Content-Type.

See ${OPENNMS_HOME}/share/xsds/event.xsd for the reference schema.

|

| Resource | Description |

|---|---|

|

Publish an event on the event bus. |

5.9.5. Categories

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get all configured categories. |

|

Get the category specified by the given name. |

|

Get the category specified by the given name for the given node (similar to |

|

Get the categories for a given node (similar to |

|

Get the categories for a given user group (similar to |

POSTs (Adding Data)

| Resource | Description |

|---|---|

|

Adds a new category. |

PUTs (Modifying Data)

| Resource | Description |

|---|---|

|

Update the specified category |

|

Modify the category with the given node ID and name (similar to |

|

Add the given category to the given user group (similar to |

DELETEs (Removing Data)

| Resource | Description |

|---|---|

|

Delete the specified category |

|

Remove the given category from the given node (similar to |

|

Remove the given category from the given user group (similar to |

5.9.6. Flow API

The Flow API can be used to retrieve summary statistics and time series data derived from persisted flows.

| Unless specific otherwise, all unit of time are expressed in milliseconds. |

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Retrieve the number of flows available |

|

Retrieve basic information for the exporter nodes that have flows available |

|

Retrieve detailed information about a specific exporter node |

|

Retrieve traffic summary statistics for the top N applications |

|

Retrieve time series metrics for the top N applications |

|

Retrieve traffic summary statistics for the top N conversations |

|

Retrieve time series metrics for the top N conversations |

All of the endpoints support the following query string parameters to help filter the results:

The given filters are combined using a logical AND.

There is no support for using OR logic, or combinations thereof.

|

name |

default |

comment |

start |

-14400000 |

Timestamp in milliseconds. If > 0, the timestamp is relative to the UNIX epoch (January 1st 1970 00:00:00 AM). If < 0, the timestamp is relative to the |

end |

0 |

Timestamp in milliseconds. If <= 0, the effective value will be the current timestamp. |

ifIndex |

(none) |

Filter for flows that came in through the given SNMP interface. |

exporterNode |

(none) |

Filter for flows that came where exported by the given node. Support either node id (integer) i.e. 1, or foreign source and foreign id lookups i.e. FS:FID. |

The exporters endpoints do not support any parameters.

The applications endpoints also support:

| name | default | comment |

|---|---|---|

N |

10 |

Number of top entries (determined by total bytes transferred) to return |

includeOther |

false |

When set to |

The applications and conversations endpoints also support:

| name | default | comment |

|---|---|---|

N |

10 |

Number of top entries (determined by total bytes transferred) to return |

The series endpoints also support:

| name | default | comment |

|---|---|---|

step |

300000 |

Requested time interval between rows. |

Examples

curl -u admin:admin http://localhost:8980/opennms/rest/flows/count915curl -u admin:admin http://localhost:8980/opennms/rest/flows/applications{

"start": 1513788044417,

"end": 1513802444417,

"headers": ["Application", "Bytes In", "Bytes Out"],

"rows": [

["https", 48789, 136626],

["http", 12430, 5265]

]

}curl -u admin:admin http://localhost:8980/opennms/rest/flows/conversations{

"start": 1513788228224,

"end": 1513802628224,

"headers": ["Location", "Protocol", "Source IP", "Source Port", "Dest. IP", "Dest. Port", "Bytes In", "Bytes Out"],

"rows": [

["Default", 17, "10.0.2.15", 33816, "172.217.0.66", 443, 12166, 117297],

["Default", 17, "10.0.2.15", 32966, "172.217.0.70", 443, 5042, 107542],

["Default", 17, "10.0.2.15", 54087, "172.217.0.67", 443, 55393, 5781],

["Default", 17, "10.0.2.15", 58046, "172.217.0.70", 443, 4284, 46986],

["Default", 6, "10.0.2.15", 39300, "69.172.216.58", 80, 969, 48178],

["Default", 17, "10.0.2.15", 48691, "64.233.176.154", 443, 8187, 39847],

["Default", 17, "10.0.2.15", 39933, "172.217.0.65", 443, 1158, 33913],

["Default", 17, "10.0.2.15", 60751, "216.58.218.4", 443, 5504, 24957],

["Default", 17, "10.0.2.15", 51972, "172.217.0.65", 443, 2666, 22556],

["Default", 6, "10.0.2.15", 46644, "31.13.65.7", 443, 459, 16952]

]

}curl -u admin:admin http://localhost:8980/opennms/rest/flows/applications/series?N=3&includeOther=true&step=3600000{

"start": 1516292071742,

"end": 1516306471742,

"columns": [

{

"label": "domain",

"ingress": true

},

{

"label": "https",

"ingress": true

},

{

"label": "http",

"ingress": true

},

{

"label": "Other",

"ingress": true

}

],

"timestamps": [

1516291200000,

1516294800000,

1516298400000

],

"values": [

[9725, 12962, 9725],

[70665, 125044, 70585],

[10937,13141,10929],

[1976,2508,2615]

]

}curl -u admin:admin http://localhost:8980/opennms/rest/flows/conversations/series?N=3&step=3600000{

"start": 1516292150407,

"end": 1516306550407,

"columns": [

{

"label": "10.0.2.15:55056 <-> 152.19.134.142:443",

"ingress": false

},

{

"label": "10.0.2.15:55056 <-> 152.19.134.142:443",

"ingress": true

},

{

"label": "10.0.2.15:55058 <-> 152.19.134.142:443",

"ingress": false

},

{

"label": "10.0.2.15:55058 <-> 152.19.134.142:443",

"ingress": true

},

{

"label": "10.0.2.2:61470 <-> 10.0.2.15:8980",

"ingress": false

},

{

"label": "10.0.2.2:61470 <-> 10.0.2.15:8980",

"ingress": true

}

],

"timestamps": [

1516294800000,

1516298400000

],

"values": [

[17116,"NaN"],

[1426,"NaN"],

[20395,"NaN",

[1455,"NaN"],

["NaN",5917],

["NaN",2739]

]

}5.9.7. Flow Classification API

The Flow Classification API can be used to update, create or delete flow classification rules.

If not otherwise specified the Content-Type of the response is application/json.

|

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Retrieve a list of all enabled rules.

The request is limited to |

|

Retrieve the rule identified by |

|

Retrieve all existing groups.

The request is limited to |

|

Retrieve the group identified by |

|

Retrieve all supported tcp protocols. |

The /classifications endpoint supports the following url parameters:

The given filters are combined using a logical AND.

There is no support for using OR logic, or combinations thereof.

|

| name | default |

|---|---|

comment |

groupFilter |

(none) |

The group to filter the rules by. Should be the |

query |

(none) |

Examples

curl -X GET -u admin:admin http://localhost:8980/opennms/rest/classifications[

{

"group": {

"description": "Classification rules defined by the user",

"enabled": true,

"id": 2,

"name": "user-defined",

"priority": 10,

"readOnly": false,

"ruleCount": 1

},

"id": 1,

"ipAddress": null,

"name": "http",

"port": "80",

"position": 0,

"protocols": [

"TCP"

]

}

]curl -X GET -u admin:admin http://localhost:8980/opennms/rest/classifications/groups[

{

"description": "Classification rules defined by OpenNMS",

"enabled": false,

"id": 1,

"name": "pre-defined",

"priority": 0,

"readOnly": true,

"ruleCount": 6248

},

{

"description": "Classification rules defined by the user",

"enabled": true,

"id": 2,

"name": "user-defined",

"priority": 10,

"readOnly": false,

"ruleCount": 1

}

]curl -X GET -u admin:admin http://localhost:8980/opennms/rest/classifications/1{

"group": {

"description": "Classification rules defined by the user",

"enabled": true,

"id": 2,

"name": "user-defined",

"priority": 10,

"readOnly": false,

"ruleCount": 1

},

"id": 1,

"ipAddress": null,

"name": "http",

"port": "80",

"position": 0,

"protocols": [

"TCP"

]

}curl -X GET -H "Accept: application/json" -u admin:admin http://localhost:8980/opennms/rest/classifications/groups/1{

"description": "Classification rules defined by OpenNMS",

"enabled": false,

"id": 1,

"name": "pre-defined",

"priority": 0,

"readOnly": true,

"ruleCount": 6248

}curl -X GET -H "Accept: text/comma-separated-values" -u admin:admin http://localhost:8980/opennms/rest/classifications/groups/2name;ipAddress;port;protocol

http;;80;TCPPOSTs (Creating Data)

| Resource | Description |

|---|---|

|

Post a new rule or import rules from CSV. If multiple rules are imported (to user-defined group) from a CSV file all existing rules are deleted. |

|

Classify the given request based on all enabled rules. |

Examples

curl -X POST -H "Content-Type: application/json" -u admin:admin -d '{"name": "http", "port":"80,8080", "protocols":["tcp", "udp"]}' http://localhost:8980/opennms/rest/classificationsHTTP/1.1 201 Created

Date: Thu, 08 Feb 2018 14:44:27 GMT

Location: http://localhost:8980/opennms/rest/classifications/6616curl -X POST -H "Content-Type: application/json" -u admin:admin -d '{"protocol": "tcp", "ipAddress": "192.168.0.1", "port" :"80"}' http://localhost:8980/opennms/rest/classifications/classify{

"classification":"http"

}curl -X POST -H "Content-Type: application/json" -u admin:admin -d '{"protocol": "tcp", "ipAddress": "192.168.0.1", "port" :"8980"}' http://localhost:8980/opennms/rest/classifications/classifyHTTP/1.1 204 No Contentcurl -X POST -H "Content-Type: text/comma-separated-values" -u admin:admin -d $'name;ipAddress;port;protocol\nOpenNMS;;8980;tcp,udp\n' http://localhost:8980/opennms/rest/classifications\?hasHeader\=trueHTTP/1.1 204 No Contentcurl -X POST -H "Content-Type: text/comma-separated-values" -u admin:admin -d $'OpenNMS;;INCORRECT;tcp,udp\nhttp;;80,8080;ULF' http://localhost:8980/opennms/rest/classifications\?hasHeader\=false{

"errors": {

"1": {

"context": "port",

"key": "rule.port.definition.invalid",

"message": "Please provide a valid port definition. Allowed values are numbers between 0 and 65536. A range can be provided, e.g. \"4000-5000\", multiple values are allowed, e.g. \"80,8080\""

},

"2": {

"context": "protocol",

"key": "rule.protocol.doesnotexist",

"message": "The defined protocol 'ULF' does not exist"

}

},

"success": false

}PUTs (Updating Data)

| Resource | Description |

|---|---|

|

Update a rule identified by |

|

Retrieve the rule identified by |

|

Update a group. At the moment, only the enabled property can be changed |

DELETEs (Deleting Data)

| Resource | Description |

|---|---|

|

Deletes all rules of a given group. |

|

Delete the given group and all it’s containing rules. |

5.9.8. Foreign Sources

ReSTful service to the OpenNMS Horizon Provisioning Foreign Source definitions. Foreign source definitions are used to control the scanning (service detection) of services for SLA monitoring as well as the data collection settings for physical interfaces (resources).

This API supports CRUD operations for managing the Provisioner’s foreign source definitions. Foreign source definitions are POSTed and will be deployed when the corresponding requisition gets imported/synchronized by Provisiond.

If a request says that it gets the "active" foreign source, that means it returns the pending foreign source (being edited for deployment) if there is one, otherwise it returns the deployed foreign source.

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get all active foreign sources. |

|

Get the active default foreign source. |

|

Get the list of all deployed (active) foreign sources. |

|

Get the number of deployed foreign sources. (Returns plaintext, rather than XML or JSON.) |

|

Get the active foreign source named {name}. |

|

Get the configured detectors for the foreign source named {name}. |

|

Get the specified detector for the foreign source named {name}. |

|

Get the configured policies for the foreign source named {name}. |

|

Get the specified policy for the foreign source named {name}. |

POSTs (Adding Data)

POST requires XML using application/xml as its Content-Type.

| Resource | Description |

|---|---|

|

Add a foreign source. |

|

Add a detector to the named foreign source. |

|

Add a policy to the named foreign source. |

PUTs (Modifying Data)

PUT requires form data using application/x-www-form-urlencoded as a Content-Type.

| Resource | Description |

|---|---|

|

Modify a foreign source with the given name. |

DELETEs (Removing Data)

| Resource | Description |

|---|---|

|

Delete the named foreign source. |

|

Delete the specified detector from the named foreign source. |

|

Delete the specified policy from the named foreign source. |

5.9.9. Groups

Like users, groups have a simplified interface as well.

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get a list of groups. |

|

Get a specific group, given a group name. |

|

Get the users for a group, given a group name. (new in OpenNMS 14) |

|

Get the categories associated with a group, given a group name. (new in OpenNMS 14) |

POSTs (Adding Data)

| Resource | Description |

|---|---|

|

Add a new group. |

PUTs (Modifying Data)

| Resource | Description |

|---|---|

|

Update the metadata of a group (eg, change the |

|

Add a user to the group, given a group name and username. (new in OpenNMS 14) |

|

Associate a category with the group, given a group name and category name. (new in OpenNMS 14) |

DELETEs (Removing Data)

| Resource | Description |

|---|---|

|

Delete a group. |

|

Remove a user from the group. (new in OpenNMS 14) |

|

Disassociate a category from a group, given a group name and category name. (new in OpenNMS 14) |

5.9.10. Heatmap

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Sizes and color codes based on outages for nodes grouped by Surveillance Categories |

|

Sizes and color codes based on outages for nodes grouped by Foreign Source |

|

Sizes and color codes based on outages for nodes grouped by monitored services |

|

Sizes and color codes based on outages for nodes associated with a specific Surveillance Category |

|

Sizes and color codes based on outages for nodes associated with a specific Foreign Source |

|

Sizes and color codes based on outages for nodes providing a specific monitored service |

| Resource | Description |

|---|---|

|

Sizes and color codes based on alarms for nodes grouped by Surveillance Categories |

|

Sizes and color codes based on alarms for nodes grouped by Foreign Source |

|

Sizes and color codes based on alarms for nodes grouped by monitored services |

|

Sizes and color codes based on alarms for nodes associated with a specific Surveillance Category |

|

Sizes and color codes based on alarms for nodes associated with a specific Foreign Source |

|

Sizes and color codes based on alarms for nodes providing a specific monitored service |

5.9.11. Categories

Obtain or modify the status of a set of monitored services based on a given search criteria, based on nodes, IP interfaces, Categories, or monitored services itself.

Examples:

-

/ifservices?node.label=onms-prd-01

-

/ifservices?ipInterface.ipAddress=192.168.32.140

-

/ifservices?category.name=Production

-

/ifservices?status=A

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get all configured monitored services for the given search criteria. |

Example:

Get the forced unmanaged services for the nodes that belong to the requisition named Servers:

curl -u admin:admin "http://localhost:8980/opennms/rest/ifservices?status=F&node.foreignSource=Servers"PUTs (Modifying Data)

| Resource | Description |

|---|---|

|

Update all configured monitored services for the given search criteria. |

Example:

Mark the ICMP and HTTP services to be forced unmanaged for the nodes that belong to the category Production:

curl -u admin:admin -X PUT "status=F&services=ICMP,HTTP" "http://localhost:8980/opennms/rest/ifservices?category.name=Production"5.9.12. KSC Reports

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get a list of all KSC reports, this includes ID and label. |

|

Get a specific KSC report, by ID. |

|

Get a count of all KSC reports. |

PUTs (Modifying Data)

| Resource | Description |

|---|---|

|

Modify a report with the given ID. |

POSTs (Creating Data)

Documentation incomplete see issue: NMS-7162

DELETEs (Removing Data)

Documentation incomplete see issue: NMS-7162

5.9.13. Maps

The SVG maps use ReST to populate their data. This is the interface for doing that.

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Get the list of maps. |

|

Get the map with the given ID. |

|

Get the elements (nodes, links, etc.) for the map with the given ID. |

POSTs (Adding Data)

| Resource | Description |

|---|---|

|

Add a map. |

PUTs (Modifying Data)

| Resource | Description |

|---|---|

|

Update the properties of the map with the given ID. |

DELETEs (Removing Data)

| Resource | Description |

|---|---|

|

Delete the map with the given ID. |

5.9.14. Measurements API

The Measurements API can be used to retrieve collected values stored in RRD (or JRB) files and in Newts.

| Unless specific otherwise, all unit of time are expressed in milliseconds. |

GETs (Reading Data)

| Resource | Description |

|---|---|

|

Retrieve the measurements for a single attribute |

The following table shows all supported query string parameters and their default values.

| name | default | comment |

|---|---|---|

start |

-14400000 |

Timestamp in milliseconds. If > 0, the timestamp is relative to the UNIX epoch (January 1st 1970 00:00:00 AM). If < 0, the timestamp is relative to the |

end |

0 |

Timestamp in milliseconds. If <= 0, the effective value will be the current timestamp. |

step |

300000 |

Requested time interval between rows. Actual step may differ. |

maxrows |

0 |

When using the measurements to render a graph, this should be set to the graph’s pixel width. |

aggregation |

AVERAGE |

Consolidation function used. Can typically be |

fallback-attribute |

Secondary attribute that will be queried in the case the primary attribute does not exist. |

Step sizes

The behavior of the step parameter changes based on time series strategy that is being used.

When using persistence strategies based on RRD, the available step sizes are limited to those defined by the RRA when the file was created. The effective step size used will be one that covers the requested period, and is closest to the requested step size. For maximum accuracy, use a step size of 1.

When using Newts, the step size can be set arbitrarily since the aggregation is performed at the time of request.

In order to help prevent large requests, we limit to the step size of a minimum of 5 minutes, the default collection rate.

This value can be decreased by setting the org.opennms.newts.query.minimum_step system property.

Usage examples with curl

curl -u admin:admin "http://127.0.0.1:8980/opennms/rest/measurements/node%5B1%5D.nodeSnmp%5B%5D/CpuRawUser?start=-7200000&maxrows=30&aggregation=AVERAGE"<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<query-response end="1425588138256" start="1425580938256" step="300000">

<columns>

<values>159.5957271523179</values>

<values>158.08531037527592</values>

<values>158.45835584842285</values>

...

</columns>

<labels>CpuRawUser</labels>

<timestamps>1425581100000</timestamps>

<timestamps>1425581400000</timestamps>

<timestamps>1425581700000</timestamps>

...

</query-response>POSTs (Reading Data)

| Resource | Description |

|---|---|

|

Retrieve the measurements for one or more attributes, possibly spanning multiple resources, with support for JEXL expressions. |

Here we use a POST instead of a GET to retrieve the measurements, which allows us to perform complex queries which are difficult to express in a query string. These requests cannot be used to update or create new metrics.

An example of the POST body is available bellow.

Usage examples with curl

curl -X POST -H "Accept: application/json" -H "Content-Type: application/json" -u admin:admin -d @report.json http://127.0.0.1:8980/opennms/rest/measurements{

"start": 1425563626316,

"end": 1425585226316,

"step": 10000,

"maxrows": 1600,

"source": [

{

"aggregation": "AVERAGE",

"attribute": "ifHCInOctets",

"label": "ifHCInOctets",

"resourceId": "nodeSource[Servers:1424038123222].interfaceSnmp[eth0-04013f75f101]",

"transient": "false"

},

{

"aggregation": "AVERAGE",

"attribute": "ifHCOutOctets",

"label": "ifHCOutOctets",

"resourceId": "nodeSource[Servers:1424038123222].interfaceSnmp[eth0-04013f75f101]",

"transient": "true"

}

],

"expression": [

{

"label": "ifHCOutOctetsNeg",

"value": "-1.0 * ifHCOutOctets",

"transient": "false"

}

]

}{

"step": 300000,

"start": 1425563626316,

"end": 1425585226316,

"timestamps": [

1425563700000,

1425564000000,

1425564300000,

...

],

"labels": [

"ifHCInOctets",

"ifHCOutOctetsNeg"

],

"columns": [

{

"values": [

139.94817275747508,

199.0062569213732,

162.6264894795127,

...

]

},

{

"values": [

-151.66179401993355,

-214.7415503875969,

-184.9012624584718,

...

]

}

]

}More Advanced Expressions

The JEXL 2.1.x library is used to parse the expression string and this also allows java objects and predefined functions to be included in the expression.

JEXL uses a context which is pre-populated by OpenNMS with the results of the query. Several constants and arrays are also predefined as references in the context by OpenNMS.

| Constant or prefix | Description |

|---|---|

__inf |

Double.POSITIVE_INFINITY |

__neg_inf |

Double.NEGATIVE_INFINITY |

NaN |

Double.NaN |

__E |

java.lang.Math.E |

__PI |

java.lang.Math.PI |

__diff_time |

Time span between start and end of samples |

__i |

Index into the samples array which the present calculation is referencing |

__AttributeName (where AttributeName is the searched for attribute) |

This returns the complete double[] array of samples for AttributeName |

OpenNMS predefines a number of functions for use in expressions which are referenced by namespace:function. All of these functions return a java double value.

Pre defined functions

| Function | Description | Example |

|---|---|---|

jexl:evaluate("_formula"): |