1. Data Choices

The Data Choices module collects and publishes anonymous usage statistics to https://stats.opennms.org.

When a user with the Admin role logs into the system for the first time, they will be prompted as to whether or not they want to opt-in to publish these statistics.

Statistics will only be published once an Administrator has opted-in.

Usage statistics can later be disabled by accessing the 'Data Choices' link in the 'Admin' menu.

When enabled, the following anonymous statistics will be collected and publish on system startup and every 24 hours after:

-

System ID (a randomly generated UUID)

-

OpenNMS Horizon Release

-

OpenNMS Horizon Version

-

OS Architecture

-

OS Name

-

OS Version

-

Number of Alarms in the

alarmstable -

Number of Events in the

eventstable -

Number of IP Interfaces in the

ipinterfacetable -

Number of Nodes in the

nodetable -

Number of Nodes, grouped by System OID

-

2. User Management

Users are entities with login accounts in the OpenNMS Horizon system. Ideally each user corresponds to a person. An OpenNMS Horizon User represents an actor which may be granted permissions in the system by associating Security Roles. OpenNMS Horizon stores by default User information and credentials in a local embedded file based storage. Credentials and user details, e.g. contact information, descriptions or Security Roles can be managed through the Admin Section in the Web User Interface.

Beside local Users, external LDAP service and SSO can be configured, but are not scope in this section. The following paragraphs describe how to manage the embedded User and Security Roles in OpenNMS Horizon.

2.1. Users

Managing Users is done through the Web User Interface and requires to login as a User with administrative permissions.

By default the admin user is used to initially create and modify Users.

The User, Password and other detail descriptions are persisted in users.xml file.

It is not required to restart OpenNMS Horizon when User attributes are changed.

In case administrative tasks should be delegated to an User the Security Role named ROLE_ADMIN can be assigned.

| Don’t delete the admin and rtc user. The RTC user is used for the communication of the Real-Time Console on the start page to calculate the node and service availability. |

| Change the default admin password to a secure password. |

-

Login as a User with administrative permissions

-

Choose Configure OpenNMS from the user specific main navigation which is named as your login user name

-

Choose Configure Users, Groups and On-Call roles and select Configure Users

-

Click the Modify icon next to an existing User and select Reset Password

-

Set a new Password, Confirm Password and click OK

-

Click Finish to persist and apply the changes

-

Login with user name and old password

-

Choose Change Password from the user specific main navigation which is named as your login user name

-

Select Change Password

-

Identify yourself with the old password and set the new password and confirm

-

Click Submit

-

Logout and login with your new password

-

Login as a User with administrative permissions

-

Choose Configure OpenNMS from the user specific main navigation which is named as your login user name

-

Choose Configure Users, Groups and On-Call roles and select Configure Users

-

Use Add new user and type in a login name as User ID and a Password with confirmation or click Modify next to an existing User

-

Optional: Fill in detailed User Information to provide more context information around the new user in the system

-

Optional: Assign Security Roles to give or remove permissions in the system

-

Optional: Provide Notification Information which are used in Notification targets to send messages to the User

-

Optional: Set a schedule when a User should receive Notifications

-

Click Finish to persist and apply the changes

| By default a new User has the Security Role similar to ROLE_USER assigned. Acknowledgment and working with Alarms and Notifications is possible. The Configure OpenNMS administration menu is not available. |

-

Login as a User with administrative permissions

-

Choose Configure OpenNMS from the user specific main navigation which is named as your login user name

-

Choose Configure Users, Groups and On-Call roles and select Configure Users

-

Use the trash bin icon next to the User to delete

-

Confirm delete request with OK

2.2. Security Roles

A Security Roles is a set of permissions and can be assigned to an User. They regulate access to the Web User Interface and the ReST API to exchange monitoring and inventory information. In case of a distributed installation, the Minion or Remote Poller instances interact with OpenNMS Horizon and require specific permissions which are defined in the Security Roles ROLE_MINION and ROLE_REMOTING. The following Security Roles are available:

| Security Role Name | Description |

|---|---|

anyone |

In case the |

ROLE_ANONYMOUS |

Allows HTTP OPTIONS request to show allowed HTTP methods on a ReST resources and the login and logout page of the Web User Interface. |

ROLE_ADMIN |

Permissions to create, read, update and delete in the Web User Interface and the ReST API. |

ROLE_ASSET_EDITOR |

Permissions to just update the asset records from nodes. |

ROLE_DASHBOARD |

Allow users to just have access to the Dashboard. |

ROLE_DELEGATE |

Allows actions (such as acknowledging an alarm) to be performed on behalf of another user. |

ROLE_JMX |

Allows retrieving JMX metrics but does not allow executing MBeans of the OpenNMS Horizon JVM, even if they just return simple values. |

ROLE_MINION |

Minimal amount of permissions required for a Minion to operate. |

ROLE_MOBILE |

Allow user to use OpenNMS COMPASS mobile application to acknowledge Alarms and Notifications via the ReST API. |

ROLE_PROVISION |

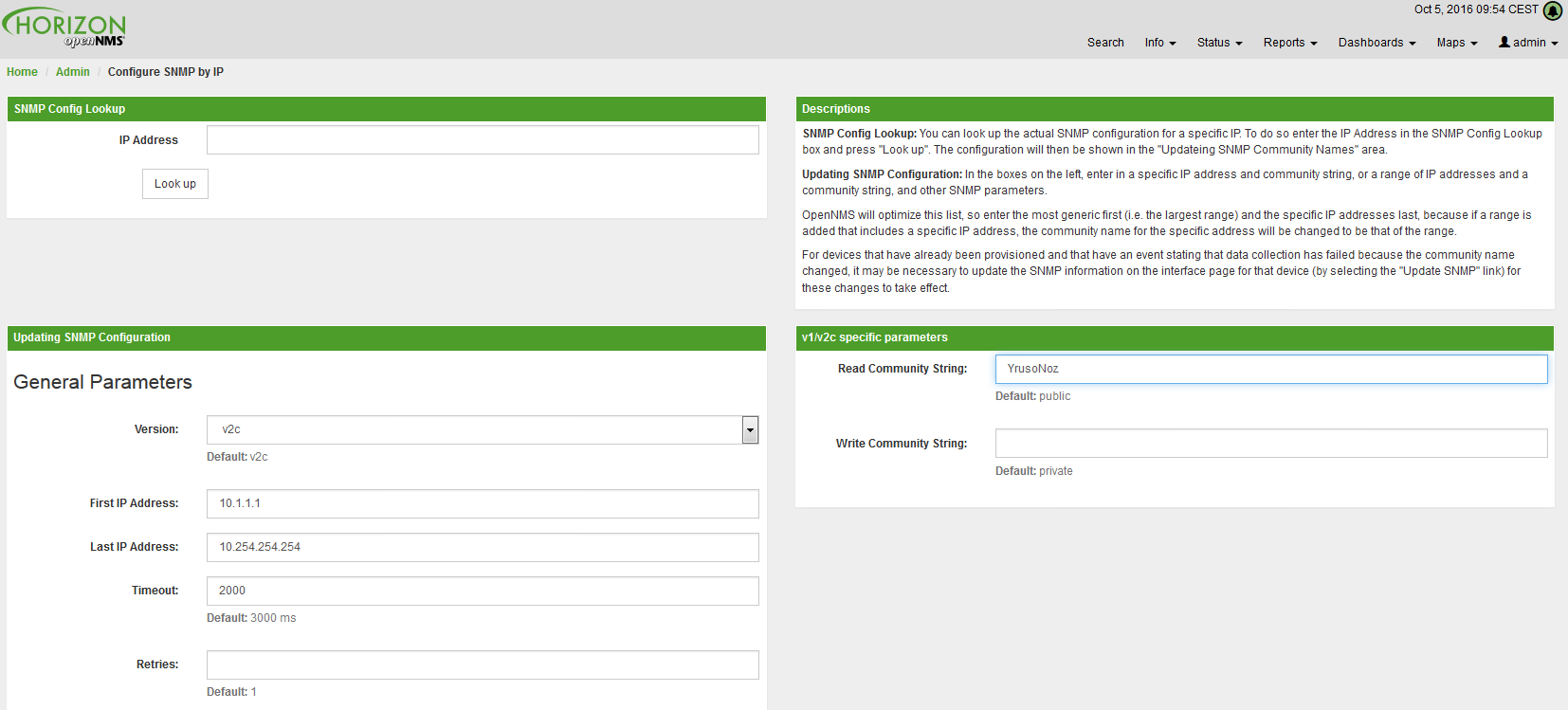

Allow user to use the Provisioning System and configure SNMP in OpenNMS Horizon to access management information from devices. |

ROLE_READONLY |

Limited to just read information in the Web User Interface and are no possibility to change Alarm states or Notifications. |

ROLE_REMOTING |

Permissions to allow access from a Remote Poller instance to exchange monitoring information. |

ROLE_REST |

Allow users interact with the whole ReST API of OpenNMS Horizon |

ROLE_RTC |

Exchange information with the OpenNMS Horizon Real-Time Console for availability calculations. |

ROLE_USER |

Default permissions of a new created user to interact with the Web User Interface which allow to escalate and acknowledge Alarms and Notifications. |

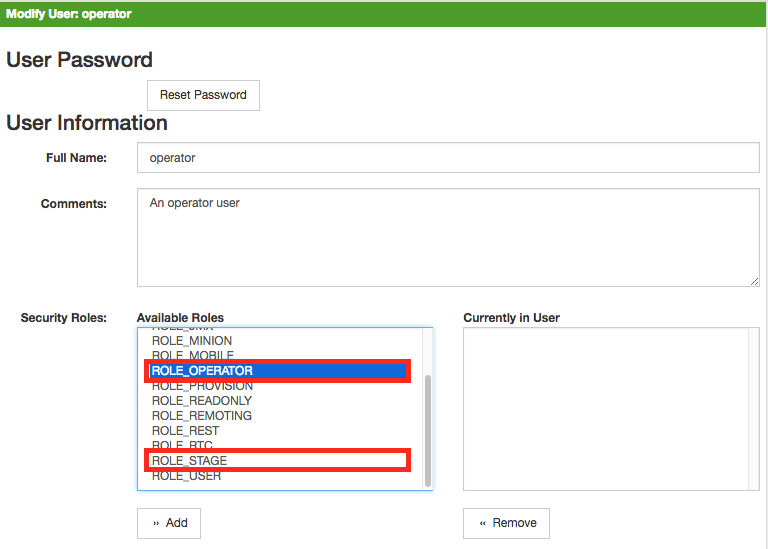

-

Login as a User with administrative permissions

-

Choose Configure OpenNMS from the user specific main navigation which is named as your login user name

-

Choose Configure Users, Groups and On-Call roles and select Configure Users

-

Modify an existing User by clicking the modify icon next to the User

-

Select the Role from Available Roles in the Security Roles section

-

Use Add and Remove to assign or remove the Security Role from the User

-

Click Finish to persist and apply the Changes

-

Logout and Login to apply the new Security Role settings

-

Create a file called

$OPENNMS_HOME/etc/security-roles.properties. -

Add a property called

roles, and for its value, a comma separated list of the custom roles, for example:

roles=operator,stage-

After following the procedure to associate the security roles with users, the new custom roles will be available as shown on the following image:

:imagesdir: ../../images

:imagesdir: ../../images

2.3. Web UI Pre-Authentication

It is possible to configure OpenNMS Horizon to run behind a proxy that provides authentication, and then pass the pre-authenticated user to the OpenNMS Horizon webapp using a header.

The pre-authentication configuration is defined in $OPENNMS_HOME/jetty-webapps/opennms/WEB-INF/spring-security.d/header-preauth.xml. This file is automatically included in the Spring Security context, but is not enabled by default.

| DO NOT configure OpenNMS Horizon in this manner unless you are certain the web UI is only accessible to the proxy and not to end-users. Otherwise, malicious attackers can craft queries that include the pre-authentication header and get full control of the web UI and ReST APIs. |

2.3.1. Enabling Pre-Authentication

Edit the header-preauth.xml file, and set the enabled property:

<beans:property name="enabled" value="true" />2.3.2. Configuring Pre-Authentication

There are a number of other properties that can be set to change the behavior of the pre-authentication plugin.

| Property | Description | Default |

|---|---|---|

|

Whether the pre-authentication plugin is active. |

|

|

If true, disallow login if the header is not set or the user does not exist. If false, fall through to other mechanisms (basic auth, form login, etc.) |

|

|

The HTTP header that will specify the user to authenticate as. |

|

|

A comma-separated list of additional credentials (roles) the user should have. |

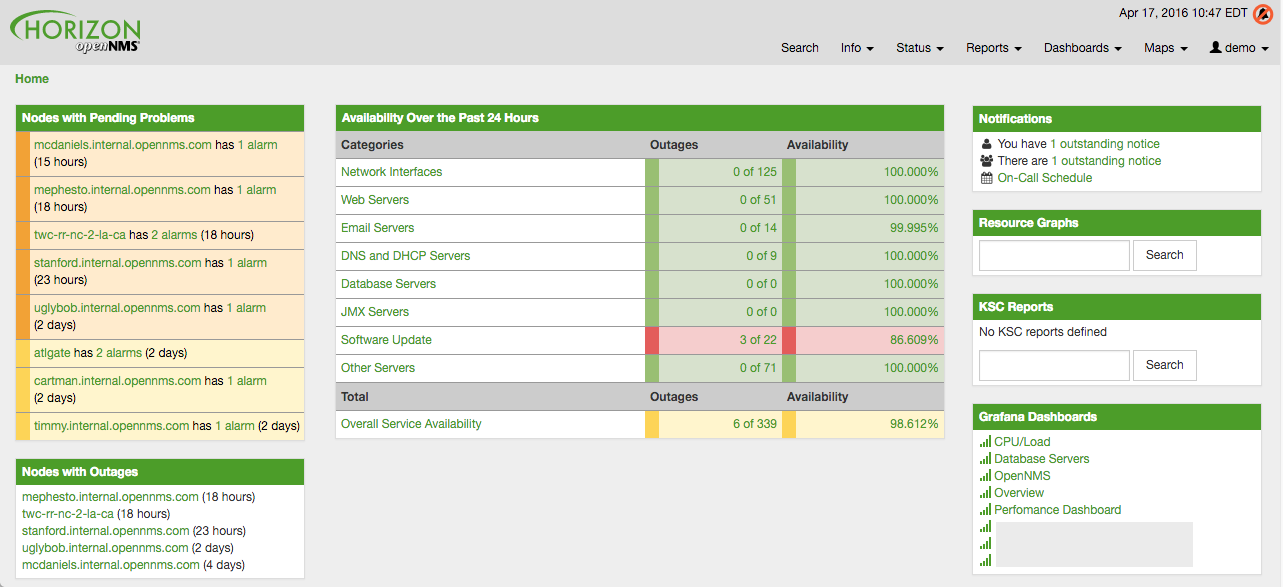

3. Administrative Webinterface

3.1. Grafana Dashboard Box

Grafana provides an API key which gives access for 3rd party application like OpenNMS Horizon. The Grafana Dashboard Box on the start page shows dashboards related to OpenNMS Horizon. To filter relevant dashboards, you can use a tag for dashboards and make them accessible. If no tag is provided all dashboards from Grafana will be shown.

The feature is by default deactivated and is configured through opennms.properties. Please note that this feature

works with the Grafana API v2.5.0.

| Name | Type | Description | Default |

|---|---|---|---|

|

Boolean |

This setting controls whether a grafana box showing the

available dashboards is placed on the landing page. The two

valid options for this are |

|

|

String |

If the box is enabled you also need to specify hostname of the Grafana server |

|

|

Integer |

The port of the Grafana server ReST API |

|

|

String |

The API key is needed for the ReST calls to work |

|

|

String |

When a tag is specified only dashboards with this given tag will be displayed. When no tag is given all dashboards will be displayed |

|

|

String |

The protocol for the ReST call can also be specified |

|

|

Integer |

Timeout in milliseconds for getting information from the Grafana server |

|

|

Integer |

Socket timeout |

|

|

Integer |

Maximum number of entries to be displayed (0 for unlimited) |

|

If you have Grafana behind a proxy it is important the org.opennms.grafanaBox.hostname is reachable.

This host name is used to generate links to the Grafana dashboards.

|

The process to generate an Grafana API Key can be found in the HTTP API documentation.

Copy the API Key to opennms.properties as org.opennms.grafanaBox.apiKey.

3.2. Operator Board

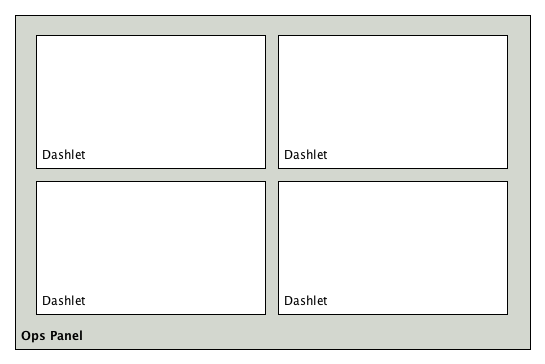

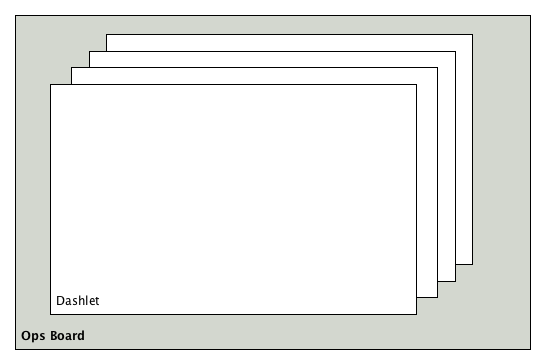

In a network operation center (NOC) the Ops Board can be used to visualize monitoring information. The monitoring information for various use-cases are arranged in configurable Dashlets. To address different user groups it is possible to create multiple Ops Boards.

There are two visualisation components to display Dashlets:

-

Ops Panel: Shows multiple Dashlets on one screen, e.g. on a NOC operators workstation

-

Ops Board: Shows one Dashlet at a time in rotation, e.g. for a screen wall in a NOC

3.2.1. Configuration

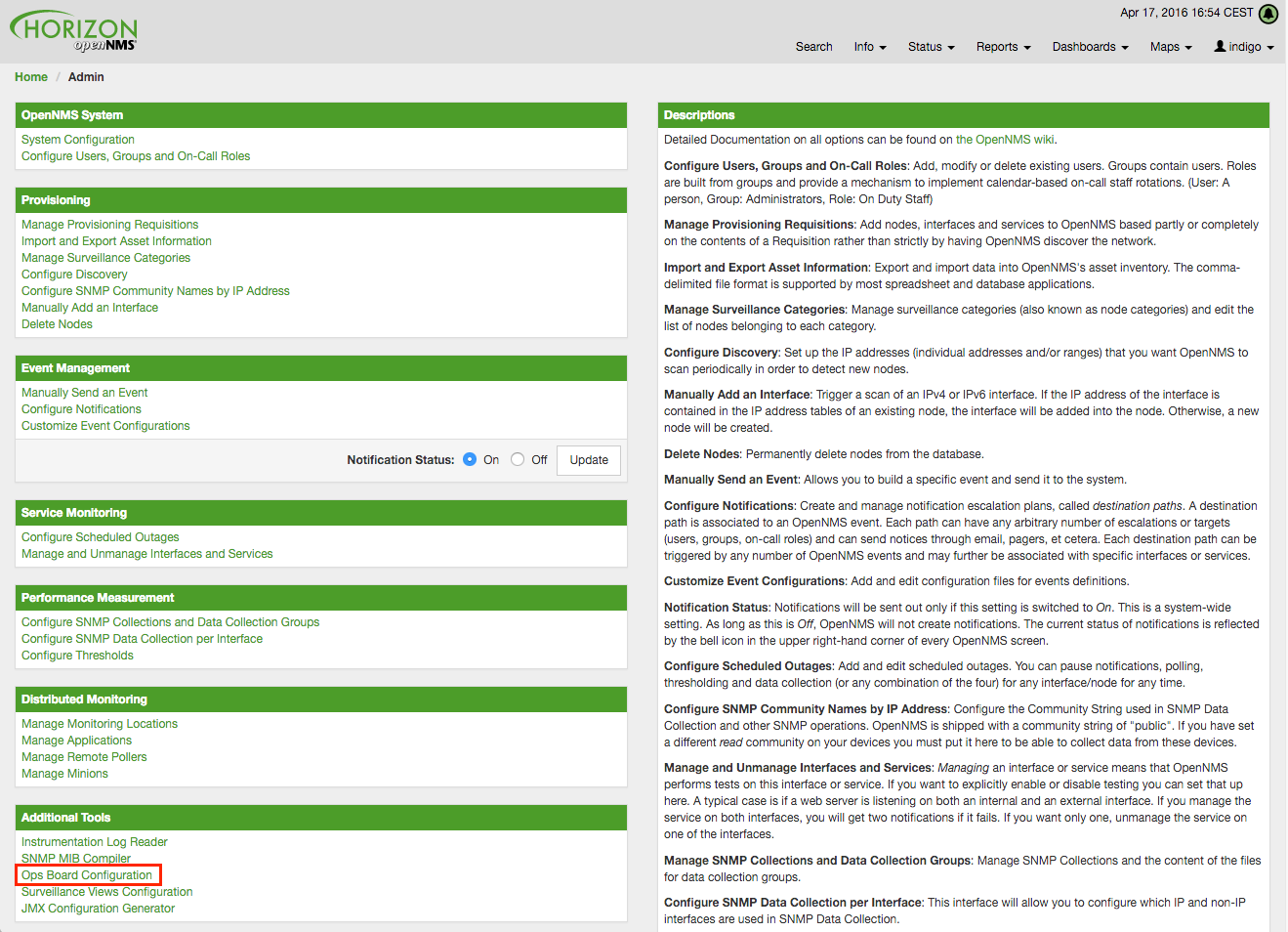

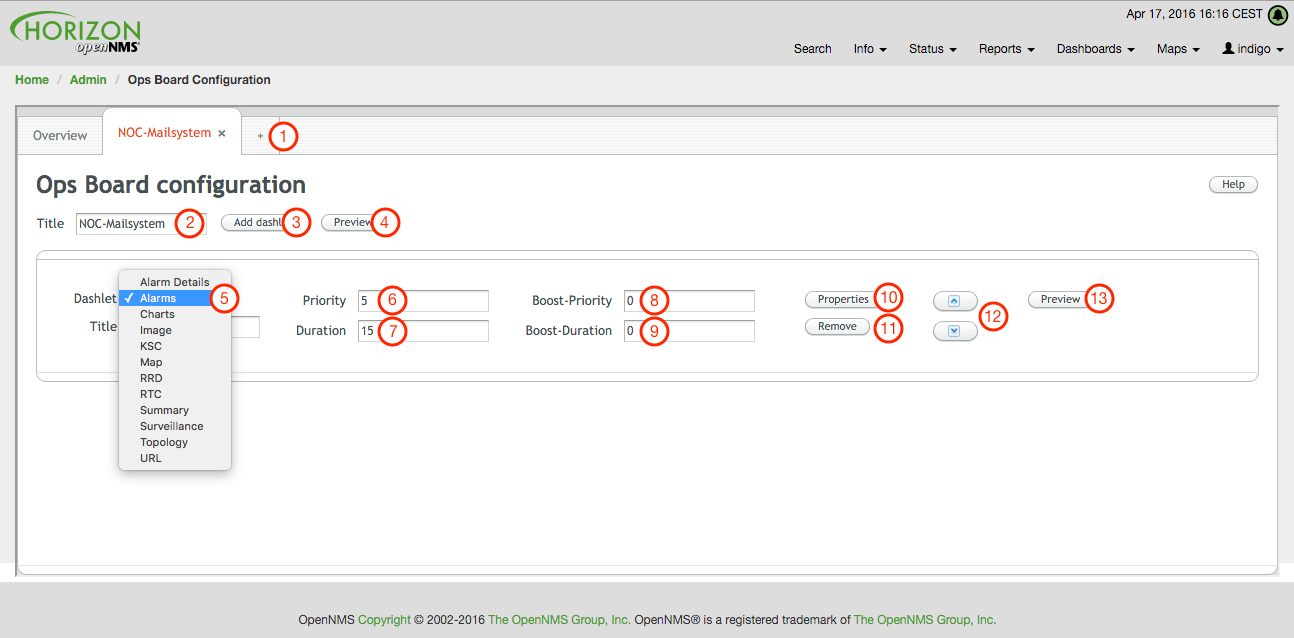

To create and configure Ops Boards administration permissions are required. The configuration section is in admin area of OpenNMS Horizon and named Ops Board Config Web Ui.

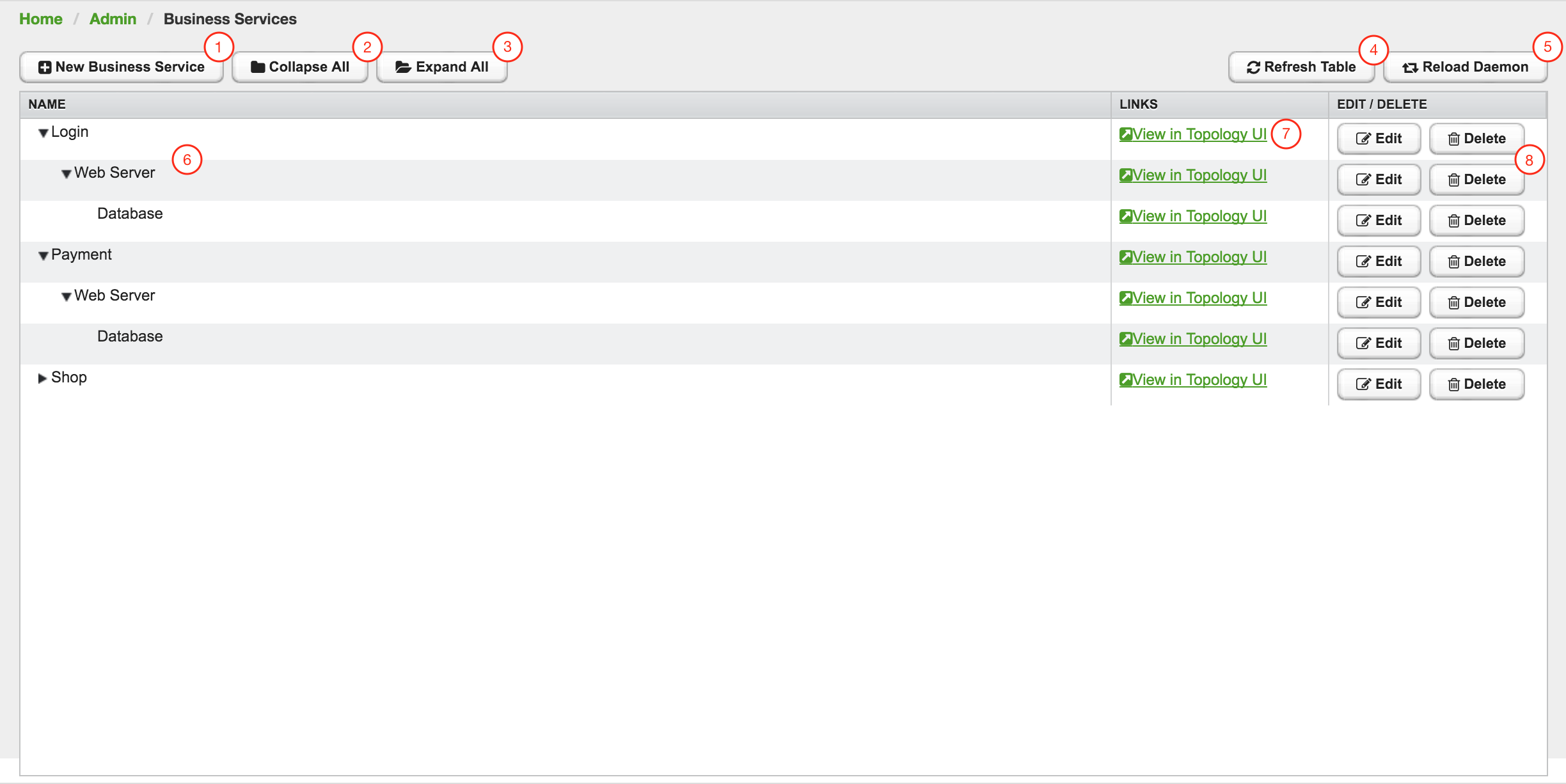

Create or modify Ops Boards is described in the following screenshot.

-

Create a new Ops Board to organize and arrange different Dashlets

-

The name to identify the Ops Board

-

Add a Dashlet to show OpenNMS Horizon monitoring information

-

Show a preview of the whole Ops Board

-

List of available Dashlets

-

Priority for this Dashlet in Ops Board rotation, lower priority means it will be displayed more often

-

Duration in seconds for this Dashlet in the Ops Board rotation

-

Change Priority if the Dashlet is in alert state, this is optional and maybe not available in all Dashlets

-

Change Duration if the Dashlet is in alert state, it is optional and maybe not available in all Dashlets

-

Configuration properties for this Dashlet

-

Remove this Dashlet from the Ops Board

-

Order Dashlets for the rotation on the Ops Board and the tile view in the Ops Panel

-

Show a preview for the whole Ops Board

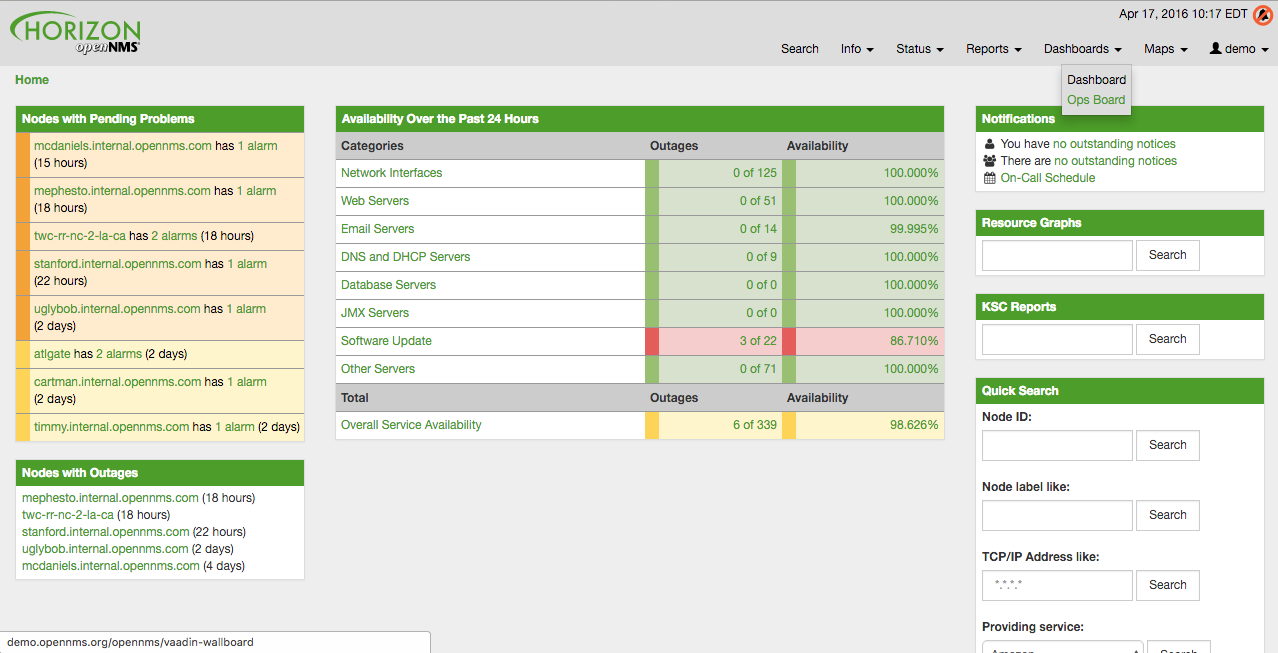

The configured Ops Board can be used by navigating in the main menu to Dashboard → Ops Board.

3.2.2. Dashlets

Visualization of information is implemented in Dashlets. The different Dashlets are described in this section with all available configuration parameter.

To allow filter information the Dashlet can be configured with a generic Criteria Builder.

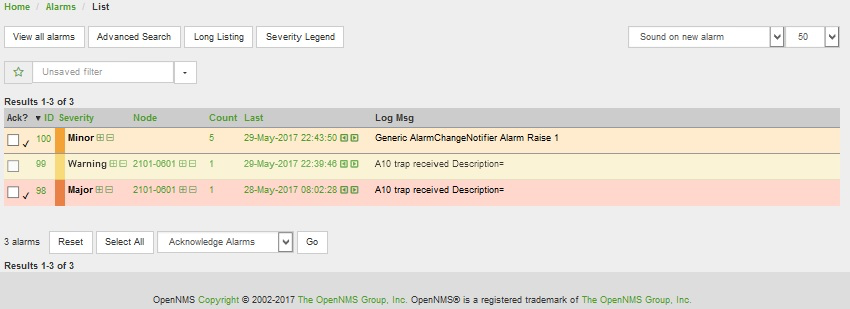

Alarm Details

This Alarm-Details Dashlet shows a table with alarms and some detailed information.

| Field | Description |

|---|---|

Alarm ID |

OpenNMS Horizon ID for the alarm |

Severity |

Alarm severity (Cleared, Indeterminate, Normal, Warning, Minor, Major, Critical) |

Node label |

Node label of the node where the alarm occurred |

Alarm count |

Alarm count based on reduction key for deduplication |

Last Event Time |

Last time the alarm occurred |

Log Message |

Reason and detailed log message of the alarm |

The Alarm Details Dashlet can be configured with the following parameters.

Boost support |

|

Configuration |

Alarms

This Alarms Dashlet shows a table with a short alarm description.

| Field | Description |

|---|---|

Time |

Absolute time since the alarm appeared |

Node label |

Node label of the node where the alarm occurred |

UEI |

OpenNMS Horizon Unique Event Identifier for this alarm |

The Alarms Dashlet can be configured with the following parameters.

Boost support |

|

Configuration |

Charts

This Dashlet displays an existing Chart.

Boost support |

false |

|

Name of the existing chart to display |

|

Rescale the image to fill display width |

|

Rescale the image to fill display height |

Grafana

This Dashlet shows a Grafana Dashboard for a given time range.

The Grafana Dashboard Box configuration defined in the opennms.properties file is used to access the Grafana instance.

Boost support |

false |

|

Title of the Grafana dashboard to be displayed |

|

URI to the Grafana Dashboard to be displayed |

|

Start of time range |

|

End of time range |

Image

This Dashlet displays an image by a given URL.

Boost support |

false |

|

URL with the location of the image to show in this Dashlet |

|

Rescale the image to fill display width |

|

Rescale the image to fill display height |

KSC

This Dashlet shows an existing KSC report. The view is exact the same as the KSC report is build regarding order, columns and time spans.

Boost support |

false |

|

Name of the KSC report to show in this Dashlet |

Map

This Dashlet displays the geographical map.

Boost support |

false |

|

Predefined search for a subset of nodes shown in the geographical map in this Dashlet |

RRD

This Dashlet shows one or multiple RRD graphs. It is possible to arrange and order the RRD graphs in multiple columns and rows. All RRD graphs are normalized with a given width and height.

Boost support |

false |

|

Number of columns within the Dashlet |

|

Number of rows with the Dashlet |

|

Import RRD graphs from an existing KSC report and re-arrange them. |

|

Generic width for all RRD graphs in this Dashlet |

|

Generic height for all RRD graphs in this Dashlet |

|

Number of the given |

|

Minute, Hour, Day, Week, Month and Year for all RRD graphs |

RTC

This Dashlet shows the configured SLA categories from the OpenNMS Horizon start page.

Boost support |

false |

|

- |

Summary

This Dashlet shows a trend of incoming alarms in given time frame.

Boost support |

|

|

Time slot in seconds to evaluate the trend for alarms by severity and UEI. |

Surveillance

This Dashlet shows a given Surveillance View.

Boost support |

false |

|

Name of the configured Surveillance View |

Topology

This Dashlet shows a Topology Map. The Topology Map can be configured with the following parameter.

Boost support |

false |

|

Which node(s) is in focus for the topology |

|

Which topology should be displayed, e.g. Linkd, VMware |

|

Set the zoom level for the topology |

URL

This Dashlet shows the content of a web page or other web application, e.g. other monitoring systems by a given URL.

Boost support |

false |

|

Optional password if a basic authentication is required |

|

URL to the web application or web page |

|

Optional username if a basic authentication is required |

3.2.3. Boosting Dashlet

The behavior to boost a Dashlet describes the behavior of a Dashlet showing critical monitoring information. It can raise the priority in the Ops Board rotation to indicate a problem. This behavior can be configured with the configuration parameter Boost Priority and Boost Duration. These to configuration parameter effect the behavior on the Ops Board in rotation.

-

Boost Priority: Absolute priority of the Dashlet with critical monitoring information.

-

Boost Duration: Absolute duration in seconds of the Dashlet with critical monitoring information.

3.2.4. Criteria Builder

The Criteria Builder is a generic component to filter information of a Dashlet. Some Dashlets use this component to filter the shown information on a Dashlet for certain use case. It is possible to combine multiple Criteria to display just a subset of information in a given Dashlet.

| Restriction | Property | Value 1 | Value 2 | Description |

|---|---|---|---|---|

|

- |

- |

- |

ascending order |

|

- |

- |

- |

descending order |

|

database attribute |

String |

String |

Subset of data between value 1 and value 2 |

|

database attribute |

String |

- |

Select all data which contains a given text string in a given database attribute |

|

database attribute |

- |

- |

Select a single instance |

|

database attribute |

String |

- |

Select data where attribute equals ( |

|

database attribute |

String |

- |

Select data where attribute is greater equals than ( |

|

database attribute |

String |

- |

Select data where attribute is greater than ( |

|

database attribute |

String |

- |

unknown |

|

database attribute |

String |

- |

unknown |

|

database attribute |

String |

- |

Select data where attribute matches an given IPLIKE expression |

|

database attribute |

- |

- |

Select data where attribute is null |

|

database attribute |

- |

- |

Select data where attribute is not null |

|

database attribute |

- |

- |

Select data where attribute is not null |

|

database attribute |

String |

- |

Select data where attribute is less equals than ( |

|

database attribute |

String |

- |

Select data where attribute is less than ( |

|

database attribute |

String |

- |

Select data where attribute is less equals than ( |

|

database attribute |

String |

- |

Select data where attribute is like a given text value similar to SQL |

|

- |

Integer |

- |

Limit the result set by a given number |

|

database attribute |

String |

- |

Select data where attribute is not equals ( |

|

database attribute |

String |

- |

unknown difference between |

|

database attribute |

- |

- |

Order the result set by a given attribute |

3.3. JMX Configuration Generator

OpenNMS Horizon implements the JMX protocol to collect long term performance data for Java applications. There are a huge variety of metrics available and administrators have to select which information should be collected. The JMX Configuration Generator Tools is build to help generating valid complex JMX data collection configuration and RRD graph definitions for OpenNMS Horizon.

This tool is available as CLI and a web based version.

3.3.1. Web based utility

Complex JMX data collection configurations can be generated from a web based tool. It collects all available MBean Attributes or Composite Data Attributes from a JMX enabled Java application.

The workflow of the tool is:

-

Connect with JMX or JMXMP against a MBean Server provided of a Java application

-

Retrieve all MBean and Composite Data from the application

-

Select specific MBeans and Composite Data objects which should be collected by OpenNMS Horizon

-

Generate JMX Collectd configuration file and RRD graph definitions for OpenNMS Horizon as downloadable archive

The following connection settings are supported:

-

Ability to connect to MBean Server with RMI based JMX

-

Authentication credentials for JMX connection

-

Optional: JMXMP connection

The web based configuration tool can be used in the OpenNMS Horizon Web Application in administration section Admin → JMX Configuration Generator.

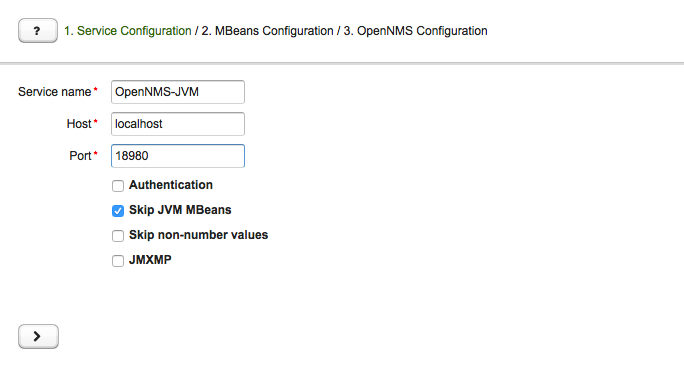

Configure JMX Connection

At the beginning the connection to an MBean Server of a Java application has to be configured.

-

Service name: The name of the service to bind the JMX data collection for Collectd

-

Host: IP address or FQDN connecting to the MBean Server to load MBeans and Composite Data into the generation tool

-

Port: Port to connect to the MBean Server

-

Authentication: Enable / Disable authentication for JMX connection with username and password

-

Skip non-number values: Skip attributes with non-number values

-

JMXMP: Enable / Disable JMX Messaging Protocol instead of using JMX over RMI

By clicking the arrow ( > ) the MBeans and Composite Data will be retrieved with the given connection settings. The data is loaded into the MBeans Configuration screen which allows to select metrics for the data collection configuration.

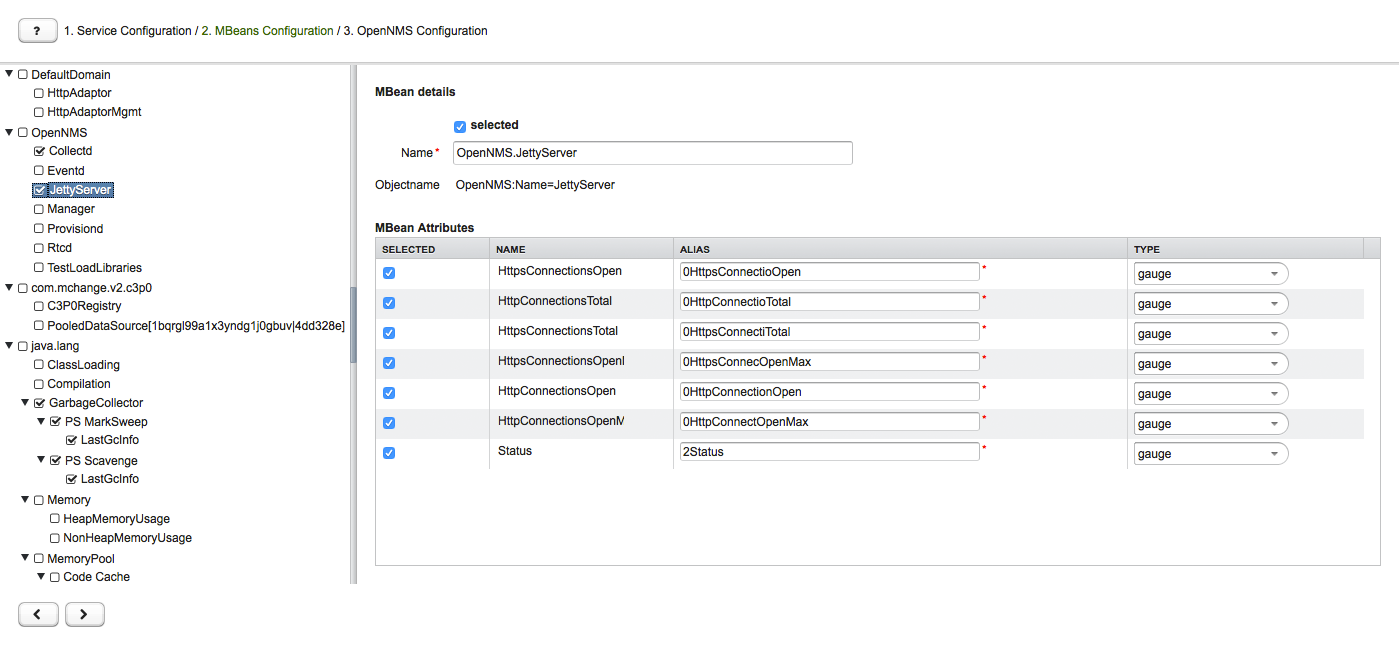

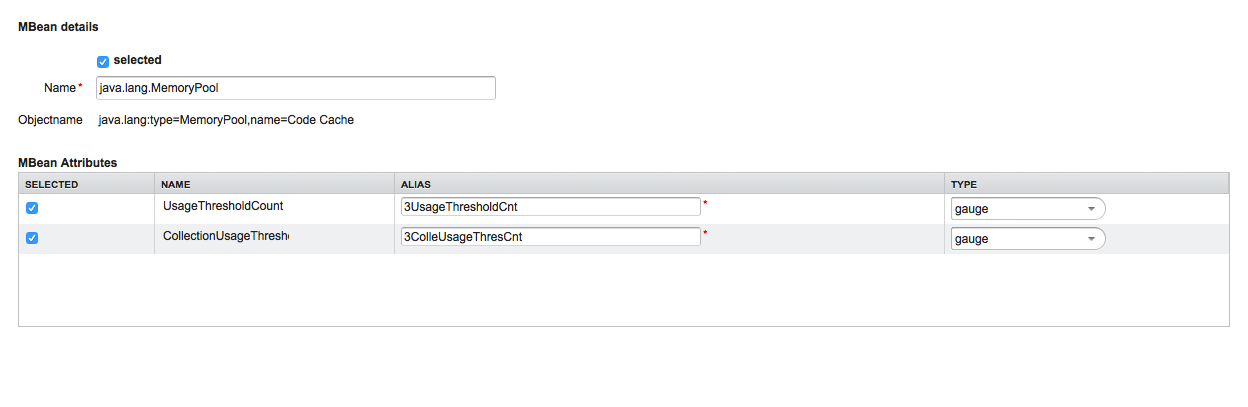

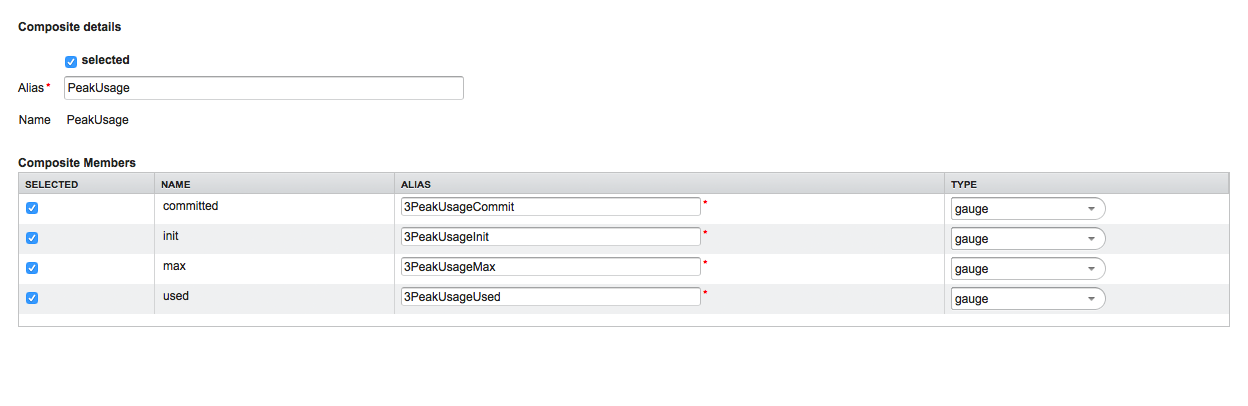

Select MBeans and Composite

The MBeans Configuration section is used to assign the MBean and Composite Data attributes to RRD domain specific data types and data source names.

The left sidebar shows the tree with the JMX Domain, MBeans and Composite Data hierarchy retrieved from the MBean Server. To select or deselect all attributes use Mouse right click → select/deselect.

The right panel shows the MBean Attributes with the RRD specific mapping and allows to select or deselect specific MBean Attriubtes or Composite Data Attributes for the data collection configuration.

-

MBean Name or Composite Alias: Identifies the MBean or the Composite Data object

-

Selected: Enable/Disable the MBean attribute or Composite Member to be included in the data collection configuration

-

Name: Name of the MBean attribute or Composite Member

-

Alias: the data source name for persisting measurements in RRD or JRobin file

-

Type: Gauge or Counter data type for persisting measurements in RRD or JRobin file

The MBean Name, Composite Alias and Name are validated against special characters. For the Alias inputs are validated to be not longer then 19 characters and have to be unique in the data collection configuration.

Download and include configuration

The last step is generating the following configuration files for OpenNMS Horizon:

-

collectd-configuration.xml: Generated sample configuration assigned to a service with a matching data collection group

-

jmx-datacollection-config.xml: Generated JMX data collection configuration with the selected MBeans and Composite Data

-

snmp-graph.properties: Generated default RRD graph definition files for all selected metrics

The content of the configuration files can be copy & pasted or can be downloaded as ZIP archive.

| If the content of the configuration file exceeds 2,500 lines, the files can only be downloaded as ZIP archive. |

3.3.2. CLI based utility

The command line (CLI) based tool is not installed by default. It is available as Debian and RPM package in the official repositories.

Installation

yum install opennms-jmx-config-generatorapt-get install opennms-jmx-config-generatorIt is required to have the Java 8 Development Kit with Apache Maven installed.

The mvn binary has to be in the path environment.

After cloning the repository you have to enter the source folder and compile an executable JAR.

cd opennms/features/jmx-config-generator

mvn packageInside the newly created target folder a file named jmxconfiggenerator-<VERSION>-onejar.jar is present.

This file can be invoked by:

java -jar target/jmxconfiggenerator-21.0.5-onejar.jarUsage

After installing the the JMX Config Generator the tool’s wrapper script is located in the ${OPENNMS_HOME}/bin directory.

$ cd /path/to/opennms/bin

$ ./jmx-config-generator| When invoked without parameters the usage and help information is printed. |

The JMX Config Generator uses sub-commands for the different configuration generation tasks. Each of these sub-commands provide different options and parameters. The command line tool accepts the following sub-commands.

| Sub-command | Description |

|---|---|

|

Queries a MBean Server for certain MBeans and attributes. |

|

Generates a valid |

|

Generates a RRD graph definition file with matching graph definitions for a given |

The following global options are available in each of the sub-commands of the tool:

| Option/Argument | Description | Default |

|---|---|---|

|

Show help and usage information. |

false |

|

Enables verbose mode for debugging purposes. |

false |

Sub-command: query

This sub-command is used to query a MBean Server for it’s available MBean objects.

The following example queries the server myserver with the credentials myusername/mypassword on port 7199 for MBean objects in the java.lang domain.

./jmx-config-generator query --host myserver --username myusername --password mypassword --port 7199 "java.lang:*"

java.lang:type=ClassLoading

description: Information on the management interface of the MBean

class name: sun.management.ClassLoadingImpl

attributes: (5/5)

TotalLoadedClassCount

id: java.lang:type=ClassLoading:TotalLoadedClassCount

description: TotalLoadedClassCount

type: long

isReadable: true

isWritable: false

isIs: false

LoadedClassCount

id: java.lang:type=ClassLoading:LoadedClassCount

description: LoadedClassCount

type: int

isReadable: true

isWritable: false

isIs: false

<output omitted>The following command line options are available for the query sub-command.

| Option/Argument | Description | Default |

|---|---|---|

|

A filter criteria to query the MBean Server for.

The format is |

- |

|

Hostname or IP address of the remote JMX host. |

- |

|

Only show the ids of the attributes. |

false |

|

Set |

- |

|

Include attribute values. |

false |

|

Use JMXMP and not JMX over RMI. |

false |

|

Password for JMX authentication. |

- |

|

Port of JMX service. |

- |

|

Only lists the available domains. |

true |

|

Includes MBeans, even if they do not have attributes.

Either due to the |

false |

|

Custom connection URL |

- |

|

Username for JMX authentication. |

- |

|

Show help and usage information. |

false |

|

Enables verbose mode for debugging purposes. |

false |

Sub-command: generate-conf

This sub-command can be used to generate a valid jmx-datacollection-config.xml for a given set of MBean objects queried from a MBean Server.

The following example generate a configuration file myconfig.xml for MBean objects in the java.lang domain of the server myserver on port 7199 with the credentials myusername/mypassword.

You have to define either an URL or a hostname and port to connect to a JMX server.

jmx-config-generator generate-conf --host myserver --username myusername --password mypassword --port 7199 "java.lang:*" --output myconfig.xml

Dictionary entries loaded: '18'The following options are available for the generate-conf sub-command.

| Option/Argument | Description | Default |

|---|---|---|

|

A list of attribute Ids to be included for the generation of the configuration file. |

- |

|

Path to a dictionary file for replacing attribute names and part of MBean attributes. The file should have for each line a replacement, e.g. Auxillary:Auxil. |

- |

|

Hostname or IP address of JMX host. |

- |

|

Use JMXMP and not JMX over RMI. |

false |

|

Output filename to write generated |

- |

|

Password for JMX authentication. |

- |

|

Port of JMX service |

- |

|

Prints the used dictionary to STDOUT.

May be used with |

false |

|

The Service Name used as JMX data collection name. |

anyservice |

|

Skip default JavaVM Beans. |

false |

|

Skip attributes with non-number values |

false |

|

Custom connection URL |

- |

|

Username for JMX authentication |

- |

|

Show help and usage information. |

false |

|

Enables verbose mode for debugging purposes. |

false |

The option --skipDefaultVM offers the ability to ignore the MBeans provided as standard by the JVM and just create configurations for the MBeans provided by the Java Application itself.

This is particularly useful if an optimized configuration for the JVM already exists.

If the --skipDefaultVM option is not set the generated configuration will include the MBeans of the JVM and the MBeans of the Java Application.

|

Check the file and see if there are alias names with more than 19 characters.

This errors are marked with NAME_CRASH_AS_19_CHAR_VALUE

|

Sub-command: generate-graph

This sub-command generates a RRD graph definition file for a given configuration file.

The following example generates a graph definition file mygraph.properties using the configuration in file myconfig.xml.

./jmx-config-generator generate-graph --input myconfig.xml --output mygraph.properties

reports=java.lang.ClassLoading.MBeanReport, \

java.lang.ClassLoading.0TotalLoadeClassCnt.AttributeReport, \

java.lang.ClassLoading.0LoadedClassCnt.AttributeReport, \

java.lang.ClassLoading.0UnloadedClassCnt.AttributeReport, \

java.lang.Compilation.MBeanReport, \

<output omitted>The following options are available for this sub-command.

| Option/Argument | Description | Default |

|---|---|---|

|

Configuration file to use as input to generate the graph properties file |

- |

|

Output filename for the generated graph properties file. |

- |

|

Prints the default template. |

false |

|

Template file using Apache Velocity template engine to be used to generate the graph properties. |

- |

|

Show help and usage information. |

false |

|

Enables verbose mode for debugging purposes. |

false |

Graph Templates

The JMX Config Generator uses a template file to generate the graphs.

It is possible to use a user-defined template.

The option --template followed by a file lets the JMX Config Generator use the external template file as base for the graph generation.

The following example illustrates how a custom template mytemplate.vm is used to generate the graph definition file mygraph.properties using the configuration in file myconfig.xml.

./jmx-config-generator generate-graph --input myconfig.xml --output mygraph.properties --template mytemplate.vmThe template file has to be an Apache Velocity template. The following sample represents the template that is used by default:

reports=#foreach( $report in $reportsList )

${report.id}#if( $foreach.hasNext ), \

#end

#end

#foreach( $report in $reportsBody )

#[[###########################################]]#

#[[##]]# $report.id

#[[###########################################]]#

report.${report.id}.name=${report.name}

report.${report.id}.columns=${report.graphResources}

report.${report.id}.type=interfaceSnmp

report.${report.id}.command=--title="${report.title}" \

--vertical-label="${report.verticalLabel}" \

#foreach($graph in $report.graphs )

DEF:${graph.id}={rrd${foreach.count}}:${graph.resourceName}:AVERAGE \

AREA:${graph.id}#${graph.coloreB} \

LINE2:${graph.id}#${graph.coloreA}:"${graph.description}" \

GPRINT:${graph.id}:AVERAGE:" Avg \\: %8.2lf %s" \

GPRINT:${graph.id}:MIN:" Min \\: %8.2lf %s" \

GPRINT:${graph.id}:MAX:" Max \\: %8.2lf %s\\n" \

#end

#endThe JMX Config Generator generates different types of graphs from the jmx-datacollection-config.xml.

The different types are listed below:

| Type | Description |

|---|---|

AttributeReport |

For each attribute of any MBean a graph will be generated. Composite attributes will be ignored. |

MbeanReport |

For each MBean a combined graph with all attributes of the MBeans is generated. Composite attributes will be ignored. |

CompositeReport |

For each composite attribute of every MBean a graph is generated. |

CompositeAttributeReport |

For each composite member of every MBean a combined graph with all composite attributes is generated. |

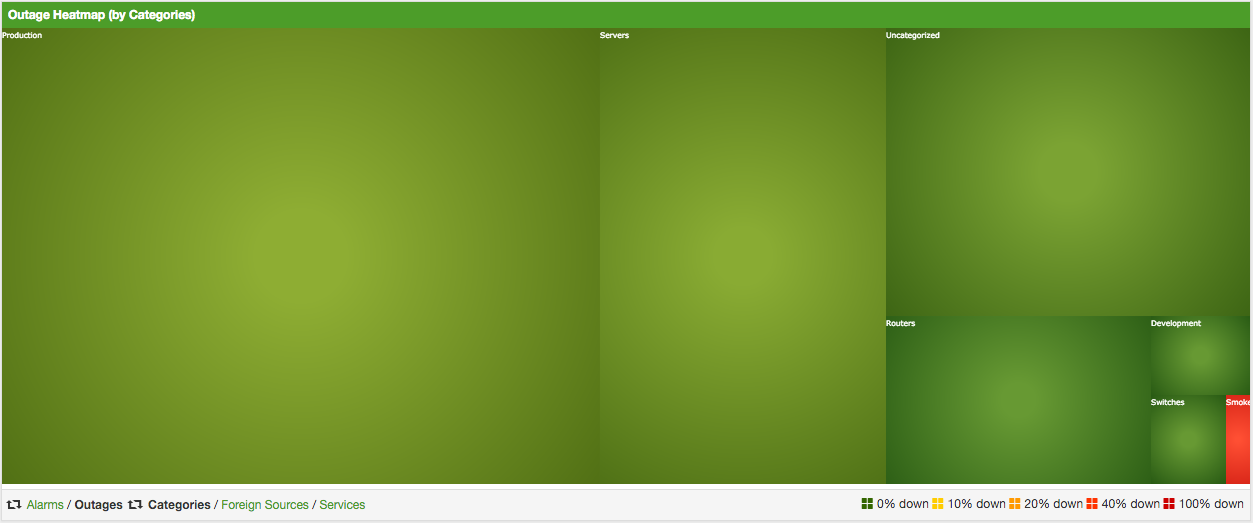

3.4. Heatmap

The Heatmap can be either be used to display unacknowledged alarms or to display ongoing outages of nodes. Each of this visualizations can be applied on categories, foreign sources or services of nodes. The sizing of an entity is calculated by counting the services inside the entity. Thus, a node with fewer services will appear in a smaller box than a node with more services.

The feature is by default deactivated and is configured through opennms.properties.

| Name | Type | Description | Default |

|---|---|---|---|

|

String |

There exist two options for using the heatmap: |

|

|

String |

This option defines which Heatmap is displayed by default.

Valid options are |

|

|

String |

The following option is used to filter for categories to be

displayed in the Heatmap. This option uses the Java regular

expression syntax. The default is |

|

|

String |

The following option is used to filter for foreign sources

to be displayed in the Heatmap. This option uses the Java

regular expression syntax. The default is |

|

|

String |

The following option is used to filter for services to be

displayed in the Heatmap. This option uses the Java regular

expression syntax. The default is |

|

|

Boolean |

This option configures whether only unacknowledged alarms will be taken into account when generating the alarm-based version of the Heatmap. |

|

|

String |

You can also place the Heatmap on the landing page by

setting this option to |

|

You can use negative lookahead expressions for excluding categories you wish not to be displayed in the heatmap,

e.g. by using an expression like ^(?!XY).* you can filter out entities with names starting with XY.

|

3.5. Trend

The Trend feature allows to display small inline charts of database-based statistics.

These chart are accessible in the Status menu of the OpenNMS' web application.

Furthermore it is also possible to configure these charts to be displayed on the OpenNMS' landing page.

To achieve this alter the org.opennms.web.console.centerUrl property to also include the entry /trend/trend-box.htm.

These charts can be configured and defined in the trend-configuration.xml file in your OpenNMS' etc directory.

The following sample defines a Trend chart for displaying nodes with ongoing outages.

<trend-definition name="nodes">

<title>Nodes</title> (1)

<subtitle>w/ Outages</subtitle> (2)

<visible>true</visible> (3)

<icon>glyphicon-fire</icon> (4)

<trend-attributes> (5)

<trend-attribute key="sparkWidth" value="100%"/>

<trend-attribute key="sparkHeight" value="35"/>

<trend-attribute key="sparkChartRangeMin" value="0"/>

<trend-attribute key="sparkLineColor" value="white"/>

<trend-attribute key="sparkLineWidth" value="1.5"/>

<trend-attribute key="sparkFillColor" value="#88BB55"/>

<trend-attribute key="sparkSpotColor" value="white"/>

<trend-attribute key="sparkMinSpotColor" value="white"/>

<trend-attribute key="sparkMaxSpotColor" value="white"/>

<trend-attribute key="sparkSpotRadius" value="3"/>

<trend-attribute key="sparkHighlightSpotColor" value="white"/>

<trend-attribute key="sparkHighlightLineColor" value="white"/>

</trend-attributes>

<descriptionLink>outage/list.htm?outtype=current</descriptionLink> (6)

<description>${intValue[23]} NODES WITH OUTAGE(S)</description> (7)

<query> (8)

<![CDATA[

select (

select

count(distinct nodeid)

from

outages o, events e

where

e.eventid = o.svclosteventid

and iflostservice < E

and (ifregainedservice is null

or ifregainedservice > E)

) from (

select

now() - interval '1 hour' * (O + 1) AS S,

now() - interval '1 hour' * O as E

from

generate_series(0, 23) as O

) I order by S;

]]>

</query>

</trend-definition>| 1 | title of the Trend chart, see below for supported variable substitutions |

| 2 | subtitle of the Trend chart, see below for supported variable substitutions |

| 3 | defines whether the chart is visible by default |

| 4 | icon for the chart, see Glyphicons for viable options |

| 5 | options for inline chart, see jQuery Sparklines for viable options |

| 6 | the description link |

| 7 | the description text, see below for supported variable substitutions |

| 8 | the SQL statement for querying the chart’s values |

| Don’t forget to limit the SQL query’s return values! |

It is possible to use values or aggregated values in the title, subtitle and description fields. The following table describes the available variable substitutions.

| Name | Type | Description |

|---|---|---|

|

Integer |

integer maximum value |

|

Double |

maximum value |

|

Integer |

integer minimum value |

|

Double |

minimum value |

|

Integer |

integer average value |

|

Double |

average value |

|

Integer |

integer sum of values |

|

Double |

sum of value |

|

Integer |

array of integer result values for the given SQL query |

|

Double |

array of result values for the given SQL query |

|

Integer |

array of integer value changes for the given SQL query |

|

Double |

array of value changes for the given SQL query |

|

Integer |

last integer value |

|

Double |

last value |

|

Integer |

last integer value change |

|

Double |

last value change |

You can also display a single graph in your JSP files by including the file /trend/single-trend-box.jsp and specifying the name parameter.

<jsp:include page="/trend/single-trend-box.jsp" flush="false">

<jsp:param name="name" value="example"/>

</jsp:include>4. Service Assurance

In OpenNMS Horizon the daemon to measures service availability and latency is done by Pollerd. To run these tests Service Monitors are scheduled and run in parallel in a Thread Pool. The behavior of Pollerd uses the following files for configuration and logging. Functionalities and general concepts are described in the User Documentation of OpenNMS. This section describes how to configure Pollerd for service assurance with all available Service Monitors coming with OpenNMS.

4.1. Pollerd Configuration

| File | Description |

|---|---|

|

Configuration file for monitors and global daemon configuration |

|

Log file for all monitors and the global Pollerd |

|

RRD graph definitions for service response time measurements |

|

Event definitions for Pollerd, i.e. nodeLostService, interfaceDown or nodeDown |

To change the behavior for service monitoring, the poller-configuration.xml can be modified.

The configuration file is structured in the following parts:

-

Global daemon config: Define the size of the used Thread Pool to run Service Monitors in parallel. Define and configure the Critical Service for Node Event Correlation.

-

Polling packages: Package to allow grouping of configuration parameters for Service Monitors.

-

Downtime Model: Configure the behavior of Pollerd to run tests in case of an Outage is detected.

-

Monitor service association: Based on the name of the service, the implementation for application or network management protocols are assigned.

<poller-configuration threads="30" (1)

pathOutageEnabled="false" (2)

serviceUnresponsiveEnabled="false"> (3)| 1 | Size of the Thread Pool to run Service Monitors in parallel |

| 2 | Enable or Disable Path Outage functionality based on a Critical Node in a network path |

| 3 | In case of unresponsive service services a serviceUnresponsive event is generated and not an outage. It prevents to apply the Downtime Model to retest the service after 30 seconds and prevents false alarms. |

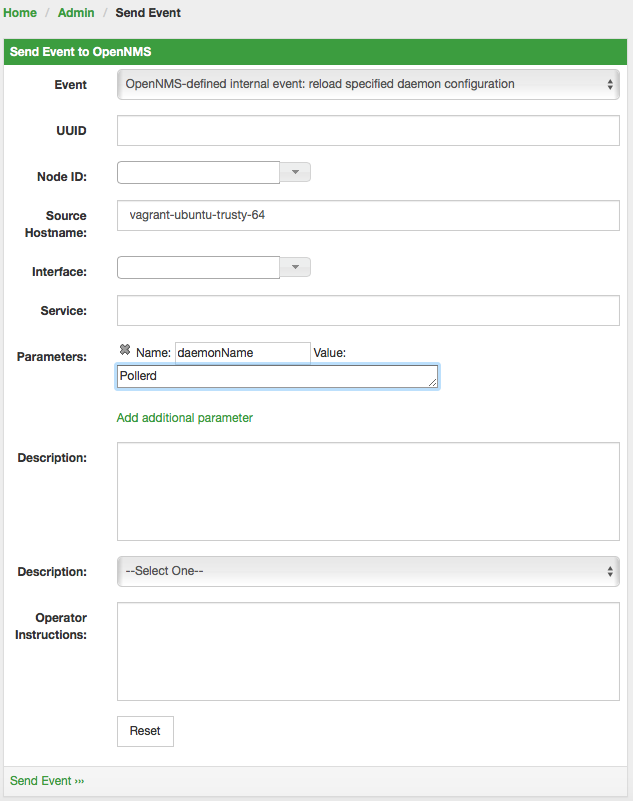

Configuration changes are applied by restarting OpenNMS and Pollerd. It is also possible to send an Event to Pollerd reloading the configuration. An Event can be sent on the CLI or the Web User Interface.

cd $OPENNMS_HOME/bin

./send-event.pl uei.opennms.org/internal/reloadDaemonConfig --parm 'daemonName Pollerd'

If you define new services in poller-configuration.xml a service restart of OpenNMS is necessary.

|

4.2. Critical Service

The Critical Service is used to correlate outages from Services to a nodeDown or interfaceDown event.

It is a global configuration of Pollerd defined in poller-configuration.xml.

The OpenNMS default configuration enables this behavior.

<poller-configuration threads="30"

pathOutageEnabled="false"

serviceUnresponsiveEnabled="false">

<node-outage status="on" (1)

pollAllIfNoCriticalServiceDefined="true"> (2)

<critical-service name="ICMP" /> (3)

</node-outage>| 1 | Enable Node Outage correlation based on a Critical Service |

| 2 | Optional: In case of nodes without a Critical Service this option controls the behavior.

If set to true then all services will be polled.

If set to false then the first service in the package that exists on the node will be polled until service is restored, and then polling will resume for all services. |

| 3 | Define Critical Service for Node Outage correlation |

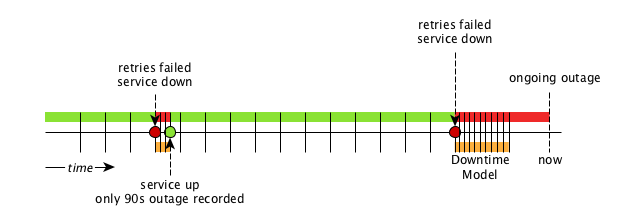

4.3. Downtime Model

By default the monitoring interval for a service is 5 minutes. To detect also short services outages, caused for example by automatic network rerouting, the downtime model can be used. On a detected service outage, the interval is reduced to 30 seconds for 5 minutes. If the service comes back within 5 minutes, a shorter outage is documented and the impact on service availability can be less than 5 minutes. This behavior is called Downtime Model and is configurable.

In figure Outages and Downtime Model there are two outages. The first outage shows a short outage which was detected as up after 90 seconds. The second outage is not resolved now and the monitor has not detected an available service and was not available in the first 5 minutes (10 times 30 second polling). The scheduler changed the polling interval back to 5 minutes.

<downtime interval="30000" begin="0" end="300000" />(1)

<downtime interval="300000" begin="300000" end="43200000" />(2)

<downtime interval="600000" begin="43200000" end="432000000" />(3)

<downtime begin="432000000" delete="true" />(4)| 1 | from 0 seconds after an outage is detected until 5 minutes the polling interval will be set to 30 seconds |

| 2 | after 5 minutes of an ongoing outage until 12 hours the polling interval will be set to 5 minutes |

| 3 | after 12 hours of an ongoing outage until 5 days the polling interval will be set to 10 minutes |

| 4 | after 5 days of an ongoing outage the service will be deleted from the monitoring system |

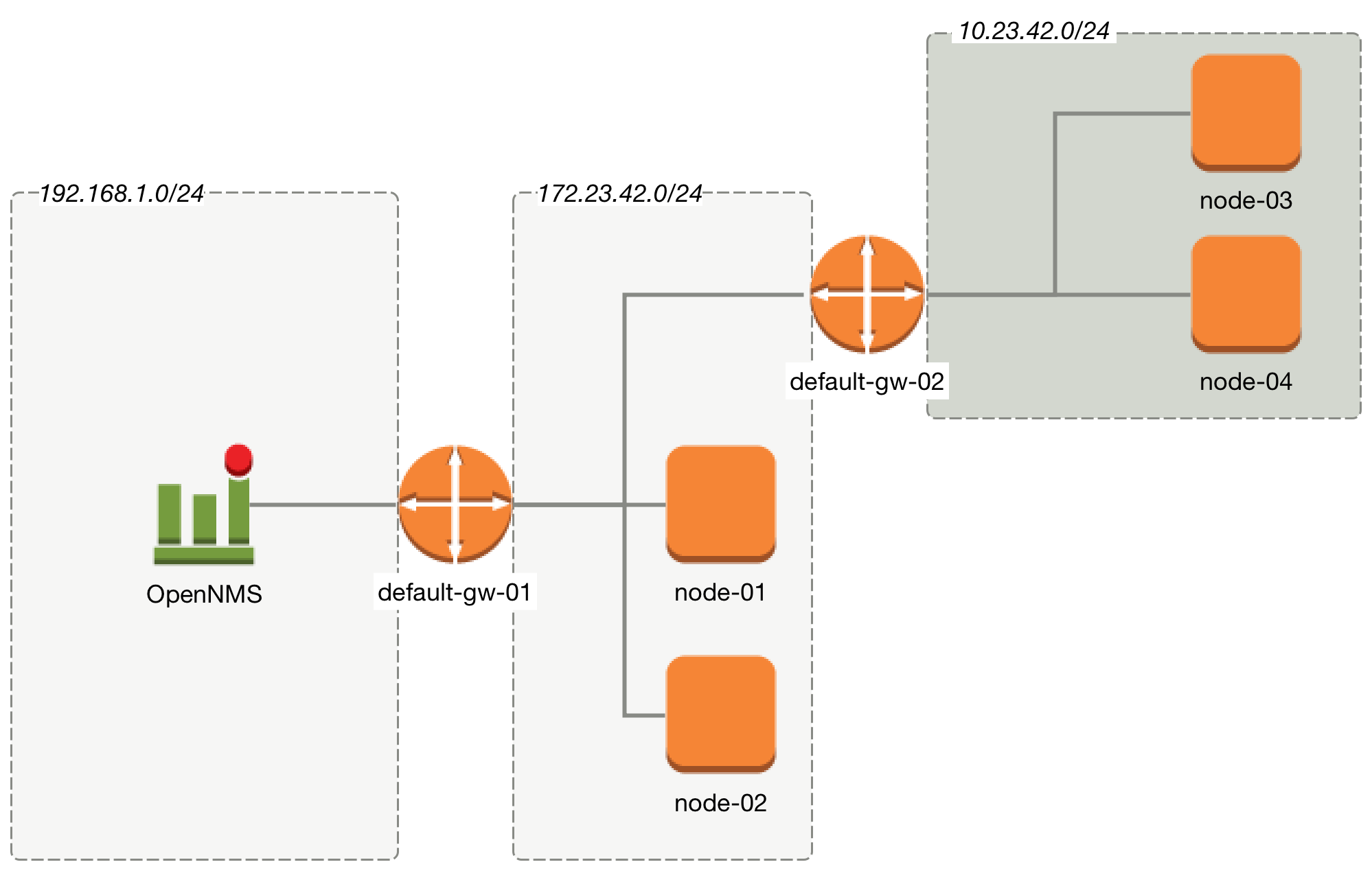

4.4. Path Outages

To reduce the amount of alarms and notifications a Path Outage can be configured. This functionality is used to suppress Notifications based on the node depending on each other in the network path. The dependency is modeled in the Node Provisioning in Path Outage.

By default the Path Outage feature is disabled and has to be enabled in the pollerd-configuration.xml.

|

It requires the following information:

-

Parent Foreign Source: The Foreign Source where the parent node is defined.

-

Parent Foreign ID: The Foreign ID of the parent Node where this node depends on.

-

The IP Interface selected as Primary is used as Critical IP

Additionally it is possible to define generic rules for Path Outages. For example there is a whole IP Subnet behind a Router and this Router is the Critical Path to this IP Subnet.

The configuration can be made in Admin → Configure Notifications → Configure Path Outages. It requires to specify a Critical IP of the Router and allows to specify the IP Subnet by defining a Rule/Filter. They are specified in Rules/Filters in the OpenNMS Wiki. In this case, the Router with all Nodes on the IP Subnet are down, but only one Notification is sent. All other Node Down notifications are suppressed matching the Rule/Filter defined in the Path Outage.

To configure a Path Outage based on the example in figure Topology for Path Outage, the configuration has to be defined as the following.

This example expects all Nodes are defined in the same Foreign Source named Network-ACME and the Foreign ID is the same as the Node Label.

|

| Parent Foreign Source | Parent Foreign ID | Provisioned Node |

|---|---|---|

not defined |

not defined |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| The IP Interface which is set to Primary is selected as the Critical IP. In this example it is important the IP interface on default-gw-01 in the network 192.168.1.0/24 is set as Primary interface. The IP interface in the network 172.23.42.0/24 on default-gw-02 is set as Primary interface. |

4.5. Poller Packages

To define more complex monitoring configuration it is possible to group Service configurations into Polling Packages. They allow to assign to Nodes different Service Configurations. To assign a Polling Package to nodes the Rules/Filters syntax can be used. Each Polling Package can have its own Downtime Model configuration.

Multiple packages can be configured, and an interface can exist in more than one package. This gives great flexibility to how the service levels will be determined for a given device.

<package name="example1">(1)

<filter>IPADDR != '0.0.0.0'</filter>(2)

<include-range begin="1.1.1.1" end="254.254.254.254" />(3)

<include-range begin="::1" end="ffff:ffff:ffff:ffff:ffff:ffff:ffff:ffff" />(3)| 1 | Unique name of the polling package. |

| 2 | Filter can be based on IP address, categories or asset attributes of Nodes based on Rules/Filters. The filter is evaluated first and is required. This package is used for all IP Interfaces which don’t have 0.0.0.0 as an assigned IP address and is required. |

| 3 | Allow to specify if the configuration of Services is applied on a range of IP Interfaces (IPv4 or IPv6). |

Instead of the include-range it is possible to add one or more specific IP-Interfaces with:

<specific>192.168.1.59</specific>It is also possible to exclude IP Interfaces with:

<exclude-range begin="192.168.0.100" end="192.168.0.104"/>4.5.1. Response Time Configuration

The definition of Polling Packages allows to configure similar services with different polling intervals. All the response time measurements are persisted in RRD Files and require a definition. Each Polling Package contains a RRD definition

<package name="example1">

<filter>IPADDR != '0.0.0.0'</filter>

<include-range begin="1.1.1.1" end="254.254.254.254" />

<include-range begin="::1" end="ffff:ffff:ffff:ffff:ffff:ffff:ffff:ffff" />

<rrd step="300">(1)

<rra>RRA:AVERAGE:0.5:1:2016</rra>(2)

<rra>RRA:AVERAGE:0.5:12:1488</rra>(3)

<rra>RRA:AVERAGE:0.5:288:366</rra>(4)

<rra>RRA:MAX:0.5:288:366</rra>(5)

<rra>RRA:MIN:0.5:288:366</rra>(6)

</rrd>| 1 | Polling interval for all services in this Polling Package is reflected in the step of size 300 seconds. All services in this package have to polled in 5 min interval, otherwise response time measurements are not correct persisted. |

| 2 | 1 step size is persisted 2016 times: 1 * 5 min * 2016 = 7 d, 5 min accuracy for 7 d. |

| 3 | 12 steps average persisted 1488 times: 12 * 5 min * 1488 = 62 d, aggregated to 60 min for 62 d. |

| 4 | 288 steps average persisted 366 times: 288 * 5 min * 366 = 366 d, aggregated to 24 h for 366 d. |

| 5 | 288 steps maximum from 24 h persisted for 366 d. |

| 6 | 288 steps minimum from 24 h persisted for 366 d. |

| The RRD configuration and the service polling interval has to be aligned. In other cases the persisted response time data is not correct displayed in the response time graph. |

| If the polling interval is changed afterwards, existing RRD files needs to be recreated with the new definitions. |

4.5.2. Overlapping Services

With the possibility of specifying multiple Polling Packages it is possible to use the same Service like ICMP multiple times.

The order how Polling Packages in the poller-configuration.xml are defined is important when IP Interfaces match multiple Polling Packages with the same Service configuration.

The following example shows which configuration is applied for a specific service:

<package name="less-specific">

<filter>IPADDR != '0.0.0.0'</filter>

<include-range begin="1.1.1.1" end="254.254.254.254" />

<include-range begin="::1" end="ffff:ffff:ffff:ffff:ffff:ffff:ffff:ffff" />

<rrd step="300">(1)

<rra>RRA:AVERAGE:0.5:1:2016</rra>

<rra>RRA:AVERAGE:0.5:12:1488</rra>

<rra>RRA:AVERAGE:0.5:288:366</rra>

<rra>RRA:MAX:0.5:288:366</rra>

<rra>RRA:MIN:0.5:288:366</rra>

</rrd>

<service name="ICMP" interval="300000" user-defined="false" status="on">(2)

<parameter key="retry" value="5" />(3)

<parameter key="timeout" value="10000" />(4)

<parameter key="rrd-repository" value="/var/lib/opennms/rrd/response" />

<parameter key="rrd-base-name" value="icmp" />

<parameter key="ds-name" value="icmp" />

</service>

<downtime interval="30000" begin="0" end="300000" />

<downtime interval="300000" begin="300000" end="43200000" />

<downtime interval="600000" begin="43200000" end="432000000" />

</package>

<package name="more-specific">

<filter>IPADDR != '0.0.0.0'</filter>

<include-range begin="192.168.1.1" end="192.168.1.254" />

<include-range begin="2600::1" end="2600:::ffff" />

<rrd step="30">(1)

<rra>RRA:AVERAGE:0.5:1:20160</rra>

<rra>RRA:AVERAGE:0.5:12:14880</rra>

<rra>RRA:AVERAGE:0.5:288:3660</rra>

<rra>RRA:MAX:0.5:288:3660</rra>

<rra>RRA:MIN:0.5:288:3660</rra>

</rrd>

<service name="ICMP" interval="30000" user-defined="false" status="on">(2)

<parameter key="retry" value="2" />(3)

<parameter key="timeout" value="3000" />(4)

<parameter key="rrd-repository" value="/var/lib/opennms/rrd/response" />

<parameter key="rrd-base-name" value="icmp" />

<parameter key="ds-name" value="icmp" />

</service>

<downtime interval="10000" begin="0" end="300000" />

<downtime interval="300000" begin="300000" end="43200000" />

<downtime interval="600000" begin="43200000" end="432000000" />

</package>| 1 | Polling interval in the packages are 300 seconds and 30 seconds |

| 2 | Different polling interval for the service ICMP |

| 3 | Different retry settings for the service ICMP |

| 4 | Different timeout settings for the service ICMP |

The last Polling Package on the service will be applied. This can be used to define a less specific catch all filter for a default configuration. A more specific Polling Package can be used to overwrite the default setting. In the example above all IP Interfaces in 192.168.1/24 or 2600:/64 will be monitored with ICMP with different polling, retry and timeout settings.

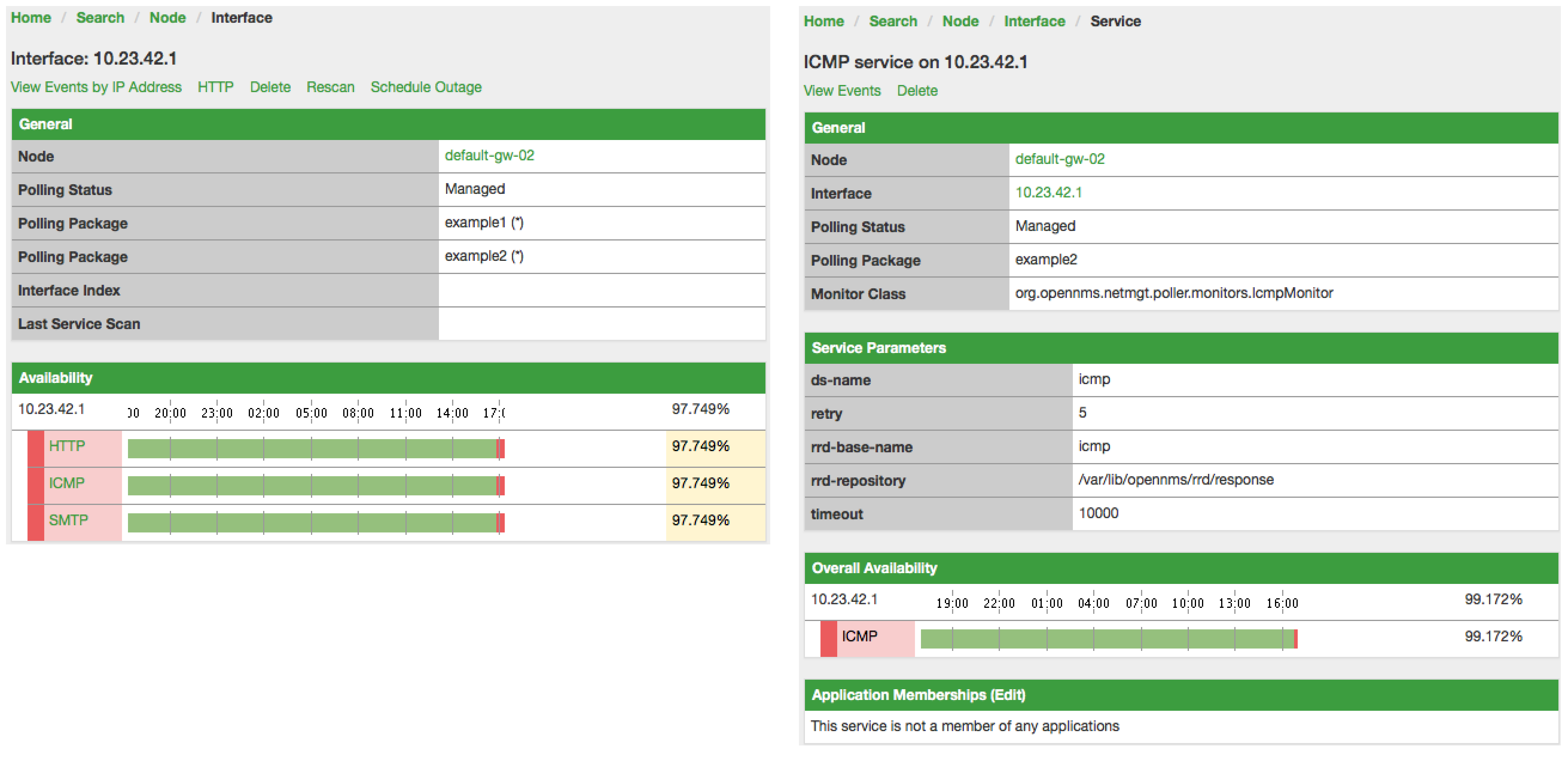

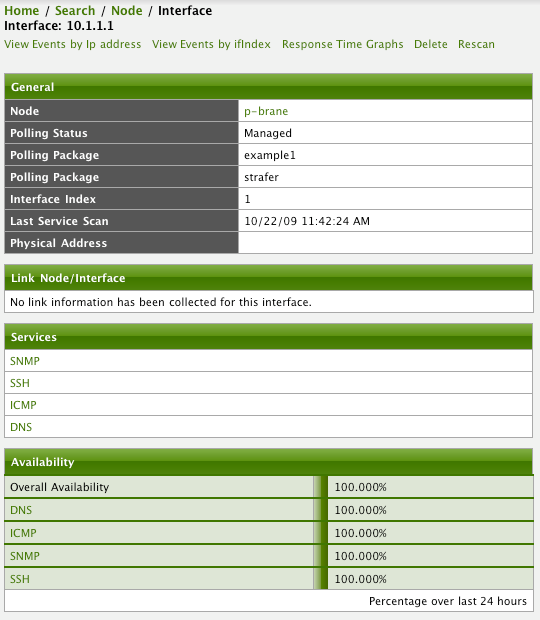

Which Polling Packages are applied to the IP Interface and Service can be found in the Web User Interface. The IP Interface and Service page show which Polling Package and Service configuration is applied for this specific service.

4.5.3. Test Services on manually

For troubleshooting it is possible to run a test via the Karaf Shell:

ssh -p 8101 admin@localhostOnce in the shell, you can print show the commands help as follows:

opennms> poller:test --help

DESCRIPTION

poller:test

Execute a poller test from the command line using current settings from poller-configuration.xml

SYNTAX

poller:test [options]

OPTIONS

-s, --service

Service name

-p, --param

Service parameter ~ key=value

-i, --ipaddress

IP Address to test

-P, --package

Poller Package

-c, --class

Monitor Class

--help

Display this help messageThe following example runs the ICMP monitor on a specific IP Interface.

opennms> poller:test -i 10.23.42.1 -s ICMP -P example1The output is verbose which allows debugging of Monitor configurations. Important output lines are shown as the following:

Checking service ICMP on IP 10.23.42.1 (1)

Package: example1 (2)

Monitor: org.opennms.netmgt.poller.monitors.IcmpMonitor (3)

Parameter ds-name : icmp (4)

Parameter rrd-base-name : icmp (4)

Parameter rrd-repository : /var/lib/opennms/rrd/response (4)

Parameter retry : 2 (5)

Parameter timeout : 3000 (5)

Available ? true (status Up[1])| 1 | Service and IP Interface to run the test |

| 2 | Applied Service configuration from Polling Package for this test |

| 3 | Service Monitor used for this test |

| 4 | RRD configuration for response time measurement |

| 5 | Retry and timeout settings for this test |

4.6. Service monitors

To support several specific applications and management agents, Pollerd executes Service Monitors. This section describes all available built-in Service Monitors which are available and can be configured to allow complex monitoring. For information how these can be extended, see Development Guide of the OpenNMS documentation.

4.6.1. Common Configuration Parameters

Application or Device specific Monitors are based on a generic API which provide common configuration parameters. These minimal configuration parameters are available in all Monitors and describe the behavior for timeouts, retries, etc.

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of attempts to test a Service to be up or down. |

optional |

|

|

Timeout for the isReachable method, in milliseconds. |

optional |

|

|

Invert the up/down behavior of the monitor |

optional |

|

In case the Monitor is using the SNMP Protocol the default configuration for timeout and retry are used from the SNMP Configuration (snmp-config.xml).

|

Minion Configuration Parameters

When nodes are configured with a non-default location, the associated Service Monitors are executed on a Minion configured with that same location. If there are many Minions at a given location, the Service Monitor may be executed on any of the Minions that are currently available. Users can choose to execute a Service Monitor on a specific Minion, by specifying the System ID of the Minion. This mechanism is used for monitoring the Minions individually.

The following parameters can be used to override this behavior and control where the Service Monitors are executed.

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Specify the location at which the Service Monitor should be executed. |

optional |

(The location of the associated node) |

|

Specify the System ID on which the Service Monitor should be executed |

optional |

(None) |

|

Use the foreign id of the associated node as the System ID |

optional |

|

| When specifying a System ID the location should also be set to the corresponding location for that system. |

4.6.2. AvailabilityMonitor

This monitor tests reachability of a node by using the isReachable method of the InetAddress java class. The service is considered available if isReachable returns true. See Oracle’s documentation for more details.

| This monitor is deprecated in favour of the IcmpMonitor monitor. You should only use this monitor on remote pollers running on unusual configurations (See below for more details). |

Monitor facts

Class Name |

|

Remote Enabled |

true |

Configuration and Usage

This monitor implements the Common Configuration Parameters.

Examples

<service name="AVAIL" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="5000"/>

</service>

<monitor service="AVAIL" class-name="org.opennms.netmgt.poller.monitors.AvailabilityMonitor"/>IcmpMonitor vs AvailabilityMonitor

This monitor has been developped in a time when the IcmpMonitor monitor wasn’t remote enabled, to circumvent this limitation. Now, with the JNA ICMP implementation, the IcmpMonitor monitor is remote enabled under most configurations and this monitor shouldn’t be needed -unless you’re running your remote poller on such an unusual configuration (See also issue NMS-6735 for more information)-.

4.6.3. BgpSessionMonitor

This monitor checks if a BGP-Session to a peering partner (peer-ip) is functional. To monitor the BGP-Session the RFC1269 SNMP MIB is used and test the status of the session using the following OIDs is used:

BGP_PEER_STATE_OID = .1.3.6.1.2.1.15.3.1.2.<peer-ip> BGP_PEER_ADMIN_STATE_OID = .1.3.6.1.2.1.15.3.1.3.<peer-ip> BGP_PEER_REMOTEAS_OID = .1.3.6.1.2.1.15.3.1.9.<peer-ip> BGP_PEER_LAST_ERROR_OID = .1.3.6.1.2.1.15.3.1.14.<peer-ip> BGP_PEER_FSM_EST_TIME_OID = .1.3.6.1.2.1.15.3.1.16.<peer-ip>

The <peer-ip> is the far end IP address of the BGP session end point.

A SNMP get request for BGP_PEER_STATE_OID returns a result between 1 to 6.

The servicestates for OpenNMS Horizon are mapped as follows:

| Result | State description | Monitor state in OpenNMS Horizon |

|---|---|---|

|

Idle |

DOWN |

|

Connect |

DOWN |

|

Active |

DOWN |

|

OpenSent |

DOWN |

|

OpenConfirm |

DOWN |

|

Established |

UP |

Monitor facts

Class Name |

|

Remote Enabled |

false |

To define the mapping I used the description from RFC1771 BGP Finite State Machine.

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

IP address of the far end BGP peer session |

required |

|

This monitor implements the Common Configuration Parameters.

Examples

To monitor the session state Established it is necessary to add a service to your poller configuration in '$OPENNMS_HOME/etc/poller-configuration.xml', for example:

<!-- Example configuration poller-configuration.xml -->

<service name="BGP-Peer-99.99.99.99-AS65423" interval="300000"

user-defined="false" status="on">

<parameter key="retry" value="2" />

<parameter key="timeout" value="3000" />

<parameter key="port" value="161" />

<parameter key="bgpPeerIp" value="99.99.99.99" />

</service>

<monitor service="BGP-Peer-99.99.99.99-AS65423" class-name="org.opennms.netmgt.poller.monitors.BgpSessionMonitor" />Error code mapping

The BGP_PEER_LAST_ERROR_OID gives an error in HEX-code. To make it human readable a codemapping table is implemented:

| Error code | Error Message |

|---|---|

|

Message Header Error |

|

Message Header Error - Connection Not Synchronized |

|

Message Header Error - Bad Message Length |

|

Message Header Error - Bad Message Type |

|

OPEN Message Error |

|

OPEN Message Error - Unsupported Version Number |

|

OPEN Message Error - Bad Peer AS |

|

OPEN Message Error - Bad BGP Identifier |

|

OPEN Message Error - Unsupported Optional Parameter |

|

OPEN Message Error (deprecated) |

|

OPEN Message Error - Unacceptable Hold Time |

|

UPDATE Message Error |

|

UPDATE Message Error - Malformed Attribute List |

|

UPDATE Message Error - Unrecognized Well-known Attribute |

|

UPDATE Message Error - Missing Well-known Attribute |

|

UPDATE Message Error - Attribute Flags Error |

|

UPDATE Message Error - Attribute Length Error |

|

UPDATE Message Error - Invalid ORIGIN Attribute |

|

UPDATE Message Error (deprecated) |

|

UPDATE Message Error - Invalid NEXT_HOP Attribute |

|

UPDATE Message Error - Optional Attribute Error |

|

UPDATE Message Error - Invalid Network Field |

|

UPDATE Message Error - Malformed AS_PATH |

|

Hold Timer Expired |

|

Finite State Machine Error |

|

Cease |

|

Cease - Maximum Number of Prefixes Reached |

|

Cease - Administrative Shutdown |

|

Cease - Peer De-configured |

|

Cease - Administrative Reset |

|

Cease - Connection Rejected |

|

Cease - Other Configuration Change |

|

Cease - Connection Collision Resolution |

|

Cease - Out of Resources |

Instead of HEX-Code the error message will be displayed in the service down logmessage. To give some additional informations the logmessage contains also

BGP-Peer Adminstate BGP-Peer Remote AS BGP-Peer established time in seconds

Debugging

If you have problems to detect or monitor the BGP Session you can use the following command to figure out where the problem come from.

snmpwalk -v 2c -c <myCommunity> <myRouter2Monitor> .1.3.6.1.2.1.15.3.1.2.99.99.99.99Replace 99.99.99.99 with your BGP-Peer IP.

The result should be an Integer between 1 and 6.

4.6.4. BSFMonitor

This monitor runs a Bean Scripting Framework BSF compatible script to determine the status of a service. Users can write scripts to perform highly custom service checks. This monitor is not optimised for scale. It’s intended for a small number of custom checks or prototyping of monitors.

BSFMonitor vs SystemExecuteMonitor

The BSFMonitor avoids the overhead of fork(2) that is used by the SystemExecuteMonitor. BSFMonitor also grants access to a selection of OpenNMS Horizon internal methods and classes that can be used in the script.

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Path to the script file. |

required |

|

|

The BSF Engine to run the script in different languages like |

required |

|

|

one of |

optional |

|

|

The BSF language class, like |

optional |

file-name extension is interpreted by default |

|

comma-separated list |

optional |

|

This monitor implements the Common Configuration Parameters.

| Variable | Type | Description |

|---|---|---|

|

Map<String, Object> |

The map contains all various parameters passed to the monitor

from the service definition it the |

|

String |

The IP address that is currently being polled. |

|

int |

The Node ID of the node the |

|

String |

The Node Label of the node the |

|

String |

The name of the service that is being polled. |

|

BSFMonitor |

The instance of the BSFMonitor object calling the script. Useful for logging via its log(String sev, String fmt, Object... args) method. |

|

HashMap<String, String> |

The script is expected to put its results into this object.

The status indication should be set into the entry with key |

|

LinkedHashMap<String, Number> |

The script is expected to put one or more response times into this object. |

Additionally every parameter added to the service definition in poller-configuration.xml is available as a String object in the script.

The key attribute of the parameter represents the name of the String object and the value attribute represents the value of the String object.

| Please keep in mind, that these parameters are also accessible via the map bean. |

| Avoid non-character names for parameters to avoid problems in the script languages. |

Response Codes

The script has to provide a status code that represents the status of the associated service. The following status codes are defined:

| Code | Description |

|---|---|

OK |

Service is available |

UNK |

Service status unknown |

UNR |

Service is unresponsive |

NOK |

Service is unavailable |

Response time tracking

By default the BSFMonitor tracks the whole time the script file consumes as the response time. If the response time should be persisted the response time add the following parameters:

poller-configuration.xml<!-- where in the filesystem response times are stored -->

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response" />

<!-- name of the rrd file -->

<parameter key="rrd-base-name" value="minimalbshbase" />

<!-- name of the data source in the rrd file -->

<!-- by default "response-time" is used as ds-name -->

<parameter key="ds-name" value="myResponseTime" />It is also possible to return one or many response times directly from the script.

To add custom response times or override the default one, add entries to the times object.

The entries are keyed with a String that names the datasource and have as values a number that represents the response time.

To override the default response time datasource add an entry into times named response-time.

Timeout and Retry

The BSFMonitor does not perform any timeout or retry processing on its own. If retry and or timeout behaviour is required, it has to be implemented in the script itself.

Requirements for the script (run-types)

Depending on the run-type the script has to provide its results in different ways.

For minimal scripts with very simple logic run-type eval is the simple option.

Scripts running in eval mode have to return a String matching one of the status codes.

If your script is more than a one-liner, run-type exec is essentially required.

Scripts running in exec mode need not return anything, but they have to add a status entry with a status code to the results object.

Additionally, the results object can also carry a "reason":"message" entry that is used in non OK states.

Commonly used language settings

The BSF supports many languages, the following table provides the required setup for commonly used languages.

| Language | lang-class | bsf-engine | required library |

|---|---|---|---|

beanshell |

|

supported by default |

|

groovy |

|

groovy-all-[version].jar |

|

jython |

|

jython-[version].jar |

Example Bean Shell

poller-configuration.xml<service name="MinimalBeanShell" interval="300000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalBeanShell.bsh"/>

<parameter key="bsf-engine" value="bsh.util.BeanShellBSFEngine"/>

</service>

<monitor service="MinimalBeanShell" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />MinimalBeanShell.bsh script filebsf_monitor.log("ERROR", "Starting MinimalBeanShell.bsf", null);

File testFile = new File("/tmp/TestFile");

if (testFile.exists()) {

return "OK";

} else {

results.put("reason", "file does not exist");

return "NOK";

}Example Groovy

To use the Groovy language an additional library is required.

Copy a compatible groovy-all.jar into to opennms/lib folder and restart OpenNMS Horizon.

That makes Groovy available for the BSFMonitor.

poller-configuration.xml with default run-type set to eval<service name="MinimalGroovy" interval="300000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalGroovy.groovy"/>

<parameter key="bsf-engine" value="org.codehaus.groovy.bsf.GroovyEngine"/>

</service>

<monitor service="MinimalGroovy" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />MinimalGroovy.groovy script file for run-type evalbsf_monitor.log("ERROR", "Starting MinimalGroovy.groovy", null);

File testFile = new File("/tmp/TestFile");

if (testFile.exists()) {

return "OK";

} else {

results.put("reason", "file does not exist");

return "NOK";

}poller-configuration.xml with run-type set to exec<service name="MinimalGroovy" interval="300000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalGroovy.groovy"/>

<parameter key="bsf-engine" value="org.codehaus.groovy.bsf.GroovyEngine"/>

<parameter key="run-type" value="exec"/>

</service>

<monitor service="MinimalGroovy" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />MinimalGroovy.groovy script file for run-type set to execbsf_monitor.log("ERROR", "Starting MinimalGroovy", null);

def testFile = new File("/tmp/TestFile");

if (testFile.exists()) {

results.put("status", "OK")

} else {

results.put("reason", "file does not exist");

results.put("status", "NOK");

}Example Jython

To use the Jython (Java implementation of Python) language an additional library is required.

Copy a compatible jython-x.y.z.jar into the opennms/lib folder and restart OpenNMS Horizon.

That makes Jython available for the BSFMonitor.

poller-configuration.xml with run-type exec<service name="MinimalJython" interval="300000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalJython.py"/>

<parameter key="bsf-engine" value="org.apache.bsf.engines.jython.JythonEngine"/>

<parameter key="run-type" value="exec"/>

</service>

<monitor service="MinimalJython" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />MinimalJython.py script file for run-type set to execfrom java.io import File

bsf_monitor.log("ERROR", "Starting MinimalJython.py", None);

if (File("/tmp/TestFile").exists()):

results.put("status", "OK")

else:

results.put("reason", "file does not exist")

results.put("status", "NOK")

We have to use run-type exec here because Jython chokes on the import keyword in eval mode.

|

| As profit that this is really Python, notice the substitution of Python’s None value for Java’s null in the log call. |

Advanced examples

The following example references all beans that are exposed to the script, including a custom parameter.

poller-configuration.xml<service name="MinimalGroovy" interval="30000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalGroovy.groovy"/>

<parameter key="bsf-engine" value="org.codehaus.groovy.bsf.GroovyEngine"/>

<!-- custom parameters (passed to the script) -->

<parameter key="myParameter" value="Hello Groovy" />

<!-- optional for response time tracking -->

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response" />

<parameter key="rrd-base-name" value="minimalgroovybase" />

<parameter key="ds-name" value="minimalgroovyds" />

</service>

<monitor service="MinimalGroovy" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />bsf_monitor.log("ERROR", "Starting MinimalGroovy", null);

//list of all available objects from the BSFMonitor

Map<String, Object> map = map;

bsf_monitor.log("ERROR", "---- map ----", null);

bsf_monitor.log("ERROR", map.toString(), null);

String ip_addr = ip_addr;

bsf_monitor.log("ERROR", "---- ip_addr ----", null);

bsf_monitor.log("ERROR", ip_addr, null);

int node_id = node_id;

bsf_monitor.log("ERROR", "---- node_id ----", null);

bsf_monitor.log("ERROR", node_id.toString(), null);

String node_label = node_label;

bsf_monitor.log("ERROR", "---- node_label ----", null);

bsf_monitor.log("ERROR", node_label, null);

String svc_name = svc_name;

bsf_monitor.log("ERROR", "---- svc_name ----", null);

bsf_monitor.log("ERROR", svc_name, null);

org.opennms.netmgt.poller.monitors.BSFMonitor bsf_monitor = bsf_monitor;

bsf_monitor.log("ERROR", "---- bsf_monitor ----", null);

bsf_monitor.log("ERROR", bsf_monitor.toString(), null);

HashMap<String, String> results = results;

bsf_monitor.log("ERROR", "---- results ----", null);

bsf_monitor.log("ERROR", results.toString(), null);

LinkedHashMap<String, Number> times = times;

bsf_monitor.log("ERROR", "---- times ----", null);

bsf_monitor.log("ERROR", times.toString(), null);

// reading a parameter from the service definition

String myParameter = myParameter;

bsf_monitor.log("ERROR", "---- myParameter ----", null);

bsf_monitor.log("ERROR", myParameter, null);

// minimal example

def testFile = new File("/tmp/TestFile");

if (testFile.exists()) {

bsf_monitor.log("ERROR", "Done MinimalGroovy ---- OK ----", null);

return "OK";

} else {

results.put("reason", "file does not exist");

bsf_monitor.log("ERROR", "Done MinimalGroovy ---- NOK ----", null);

return "NOK";

}4.6.5. CiscoIpSlaMonitor

This monitor can be used to monitor IP SLA configurations on your Cisco devices. This monitor supports the following SNMP OIDS from CISCO-RTT-MON-MIB:

RTT_ADMIN_TAG_OID = .1.3.6.1.4.1.9.9.42.1.2.1.1.3 RTT_OPER_STATE_OID = .1.3.6.1.4.1.9.9.42.1.2.9.1.10 RTT_LATEST_OPERSENSE_OID = .1.3.6.1.4.1.9.9.42.1.2.10.1.2 RTT_ADMIN_THRESH_OID = .1.3.6.1.4.1.9.9.42.1.2.1.1.5 RTT_ADMIN_TYPE_OID = .1.3.6.1.4.1.9.9.42.1.2.1.1.4 RTT_LATEST_OID = .1.3.6.1.4.1.9.9.42.1.2.10.1.1