1. Service Assurance

1.1. Service monitors

1.1.1. AvailabilityMonitor

This monitor tests reachability of a node by using the isReachable method of the InetAddress java class. The service is considered available if isReachable returns true. See Oracle’s documentation for more details.

| This monitor is deprecated in favour of the IcmpMonitor monitor. You should only use this monitor on remote pollers running on unusual configurations (See below for more details). |

Monitor facts

Class Name |

|

Remote Enabled |

true |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of attempts to have the isReachable method return true. |

optional |

|

|

Timeout for the isReachable method, in milliseconds. |

optional |

|

Examples

<service name="AVAIL" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="5000"/>

</service>

<monitor service="AVAIL" class-name="org.opennms.netmgt.poller.monitors.AvailabilityMonitor"/>IcmpMonitor vs AvailabilityMonitor

This monitor has been developped in a time when the IcmpMonitor monitor wasn’t remote enabled, to circumvent this limitation. Now, with the JNA ICMP implementation, the IcmpMonitor monitor is remote enabled under most configurations and this monitor shouldn’t be needed -unless you’re running your remote poller on such an unusual configuration (See also issue NMS-6735 for more information)-.

1.1.2. BgpSessionMonitor

This monitor checks if a BGP-Session to a peering partner (peer-ip) is functional. To monitor the BGP-Session the RFC1269 SNMP MIB is used and test the status of the session using the following OIDs is used:

BGP_PEER_STATE_OID = .1.3.6.1.2.1.15.3.1.2.<peer-ip> BGP_PEER_ADMIN_STATE_OID = .1.3.6.1.2.1.15.3.1.3.<peer-ip> BGP_PEER_REMOTEAS_OID = .1.3.6.1.2.1.15.3.1.9.<peer-ip> BGP_PEER_LAST_ERROR_OID = .1.3.6.1.2.1.15.3.1.14.<peer-ip> BGP_PEER_FSM_EST_TIME_OID = .1.3.6.1.2.1.15.3.1.16.<peer-ip>

The <peer-ip> is the far end IP address of the BGP session end point.

A SNMP get request for BGP_PEER_STATE_OID returns a result between 1 to 6.

The servicestates for OpenNMS are mapped as follows:

| Result | State description | Monitor state in OpenNMS |

|---|---|---|

|

Idle |

DOWN |

|

Connect |

DOWN |

|

Active |

DOWN |

|

OpenSent |

DOWN |

|

OpenConfirm |

DOWN |

|

Established |

UP |

Monitor facts

Class Name |

|

Remote Enabled |

false |

To define the mapping I used the description from RFC1771 BGP Finite State Machine.

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

IP address of the far end BGP peer session |

required |

|

|

Amount of attempts to get the BGP peer state with SNMP |

required |

|

|

Time to wait for the SNMP agents response before trying a next attempt. |

required |

|

Examples

To monitor the session state Established it is necessary to add a service to your poller configuration in '$OPENNMS_HOME/etc/poller-configuration.xml', for example:

<!-- Example configuration poller-configuration.xml -->

<service name="BGP-Peer-99.99.99.99-AS65423" interval="300000"

user-defined="false" status="on">

<parameter key="retry" value="2" />

<parameter key="timeout" value="3000" />

<parameter key="port" value="161" />

<parameter key="bgpPeerIp" value="99.99.99.99" />

</service>

<monitor service="BGP-Peer-99.99.99.99-AS65423" class-name="org.opennms.netmgt.poller.monitors.BgpSessionMonitor" />Error code mapping

The BGP_PEER_LAST_ERROR_OID gives an error in HEX-code. To make it human readable a codemapping table is implemented:

| Error code | Error Message |

|---|---|

|

Message Header Error |

|

Message Header Error - Connection Not Synchronized |

|

Message Header Error - Bad Message Length |

|

Message Header Error - Bad Message Type |

|

OPEN Message Error |

|

OPEN Message Error - Unsupported Version Number |

|

OPEN Message Error - Bad Peer AS |

|

OPEN Message Error - Bad BGP Identifier |

|

OPEN Message Error - Unsupported Optional Parameter |

|

OPEN Message Error (deprecated) |

|

OPEN Message Error - Unacceptable Hold Time |

|

UPDATE Message Error |

|

UPDATE Message Error - Malformed Attribute List |

|

UPDATE Message Error - Unrecognized Well-known Attribute |

|

UPDATE Message Error - Missing Well-known Attribute |

|

UPDATE Message Error - Attribute Flags Error |

|

UPDATE Message Error - Attribute Length Error |

|

UPDATE Message Error - Invalid ORIGIN Attribute |

|

UPDATE Message Error (deprecated) |

|

UPDATE Message Error - Invalid NEXT_HOP Attribute |

|

UPDATE Message Error - Optional Attribute Error |

|

UPDATE Message Error - Invalid Network Field |

|

UPDATE Message Error - Malformed AS_PATH |

|

Hold Timer Expired |

|

Finite State Machine Error |

|

Cease |

|

Cease - Maximum Number of Prefixes Reached |

|

Cease - Administrative Shutdown |

|

Cease - Peer De-configured |

|

Cease - Administrative Reset |

|

Cease - Connection Rejected |

|

Cease - Other Configuration Change |

|

Cease - Connection Collision Resolution |

|

Cease - Out of Resources |

Instead of HEX-Code the error message will be displayed in the service down logmessage. To give some additional informations the logmessage contains also

BGP-Peer Adminstate BGP-Peer Remote AS BGP-Peer established time in seconds

Debugging

If you have problems to detect or monitor the BGP Session you can use the following command to figure out where the problem come from.

snmpwalk -v 2c -c <myCommunity> <myRouter2Monitor> .1.3.6.1.2.1.15.3.1.2.99.99.99.99Replace 99.99.99.99 with your BGP-Peer IP.

The result should be an Integer between 1 and 6.

1.1.3. BSFMonitor

This monitor runs a Bean Scripting Framework BSF compatible script to determine the status of a service. Users can write scripts to perform highly custom service checks. This monitor is not optimised for scale. It’s intended for a small number of custom checks or prototyping of monitors.

BSFMonitor vs SystemExecuteMonitor

The BSFMonitor avoids the overhead of fork(2) that is used by the SystemExecuteMonitor. BSFMonitor also grants access to a selection of OpenNMS internal methods and classes that can be used in the script.

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Path to the script file. |

required |

|

|

The BSF Engine to run the script in different languages like |

required |

|

|

one of |

optional |

|

|

The BSF language class, like |

optional |

file-name extension is interpreted by default |

|

comma-separated list |

optional |

|

| Variable | Type | Description |

|---|---|---|

|

Map<String, Object> |

The map contains all various parameters passed to the monitor from the service definition it the 'poller-configuration.xml' file. |

|

String |

The IP address that is currently being polled. |

|

int |

The Node ID of the node the |

|

String |

The Node Label of the node the |

|

String |

The name of the service that is being polled. |

|

BSFMonitor |

The instance of the BSFMonitor object calling the script. Useful for logging via its log(String sev, String fmt, Object... args) method. |

|

HashMap<String, String> |

The script is expected to put its results into this object.

The status indication should be set into the entry with key |

|

LinkedHashMap<String, Number> |

The script is expected to put one or more response times into this object. |

Additionally every parameter added to the service definition in 'poller-configuration.xml' is available as a String object in the script. The key attribute of the parameter represents the name of the String object and the value attribute represents the value of the String object.

| Please keep in mind, that these parameters are also accessible via the map bean. |

| Avoid non-character names for parameters to avoid problems in the script languages. |

Response Codes

The script has to provide a status code that represents the status of the associated service. The following status codes are defined:

| Code | Description |

|---|---|

|

Service is available |

|

Service status unknown |

|

Service is unresponsive |

|

Service is unavailable |

Response time tracking

By default the BSFMonitor tracks the whole time the script file consumes as the response time. If the response time should be persisted the response time add the following parameters:

<!-- where in the filesystem response times are stored -->

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response" />

<!-- name of the rrd file -->

<parameter key="rrd-base-name" value="minimalbshbase" />

<!-- name of the data source in the rrd file -->

<!-- by default "response-time" is used as ds-name -->

<parameter key="ds-name" value="myResponseTime" />It is also possible to return one or many response times directly from the script.

To add custom response times or override the default one, add entries to the times object.

The entries are keyed with a String that names the datasource and have as values a number that represents the response time.

To override the default response time datasource add an entry into times named response-time.

Timeout and Retry

The BSFMonitor does not perform any timeout or retry processing on its own. If retry and or timeout behaviour is required, it has to be implemented in the script itself.

Requirements for the script (run-types)

Depending on the run-type the script has to provide its results in different ways.

For minimal scripts with very simple logic run-type eval is the simple option.

Scripts running in eval mode have to return a String matching one of the status codes.

If your script is more than a one-liner, run-type exec is essentially required.

Scripts running in exec mode need not return anything, but they have to add a status entry with a status code to the results object.

Additionally, the results object can also carry a "reason":"message" entry that is used in non OK states.

Commonly used language settings

The BSF supports many languages, the following table provides the required setup for commonly used languages.

| Language | lang-class | bsf-engine | required library |

|---|---|---|---|

beanshell |

|

supported by default |

|

groovy |

|

groovy-all-[version].jar |

|

jython |

|

jython-[version].jar |

Example Bean Shell

<service name="MinimalBeanShell" interval="300000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalBeanShell.bsh"/>

<parameter key="bsf-engine" value="bsh.util.BeanShellBSFEngine"/>

</service>

<monitor service="MinimalBeanShell" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />bsf_monitor.log("ERROR", "Starting MinimalBeanShell.bsf", null);

File testFile = new File("/tmp/TestFile");

if (testFile.exists()) {

return "OK";

} else {

results.put("reason", "file does not exist");

return "NOK";

}Example Groovy

To use the Groovy language an additional library is required. Copy a compatible groovy-all.jar into to 'opennms/lib' folder and restart OpenNMS. That makes Groovy available for the BSFMonitor.

run-type set to eval<service name="MinimalGroovy" interval="300000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalGroovy.groovy"/>

<parameter key="bsf-engine" value="org.codehaus.groovy.bsf.GroovyEngine"/>

</service>

<monitor service="MinimalGroovy" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />run-type evalbsf_monitor.log("ERROR", "Starting MinimalGroovy.groovy", null);

File testFile = new File("/tmp/TestFile");

if (testFile.exists()) {

return "OK";

} else {

results.put("reason", "file does not exist");

return "NOK";

}run-type set to exec<service name="MinimalGroovy" interval="300000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalGroovy.groovy"/>

<parameter key="bsf-engine" value="org.codehaus.groovy.bsf.GroovyEngine"/>

<parameter key="run-type" value="exec"/>

</service>

<monitor service="MinimalGroovy" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />run-type set to execbsf_monitor.log("ERROR", "Starting MinimalGroovy", null);

def testFile = new File("/tmp/TestFile");

if (testFile.exists()) {

results.put("status", "OK")

} else {

results.put("reason", "file does not exist");

results.put("status", "NOK");

}Example Jython

To use the Jython (Java implementation of Python) language an additional library is required.

Copy a compatible jython-x.y.z.jar into the 'opennms/lib' folder and restart OpenNMS.

That makes Jython available for the BSFMonitor.

run-type exec<service name="MinimalJython" interval="300000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalJython.py"/>

<parameter key="bsf-engine" value="org.apache.bsf.engines.jython.JythonEngine"/>

<parameter key="run-type" value="exec"/>

</service>

<monitor service="MinimalJython" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />run-type set to execfrom java.io import File

bsf_monitor.log("ERROR", "Starting MinimalJython.py", None);

if (File("/tmp/TestFile").exists()):

results.put("status", "OK")

else:

results.put("reason", "file does not exist")

results.put("status", "NOK")

We have to use run-type exec here because Jython chokes on the import keyword in eval mode.

|

| As profit that this is really Python, notice the substitution of Python’s None value for Java’s null in the log call. |

Advanced examples

The following example references all beans that are exposed to the script, including a custom parameter.

<service name="MinimalGroovy" interval="30000" user-defined="true" status="on">

<parameter key="file-name" value="/tmp/MinimalGroovy.groovy"/>

<parameter key="bsf-engine" value="org.codehaus.groovy.bsf.GroovyEngine"/>

<!-- custom parameters (passed to the script) -->

<parameter key="myParameter" value="Hello Groovy" />

<!-- optional for response time tracking -->

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response" />

<parameter key="rrd-base-name" value="minimalgroovybase" />

<parameter key="ds-name" value="minimalgroovyds" />

</service>

<monitor service="MinimalGroovy" class-name="org.opennms.netmgt.poller.monitors.BSFMonitor" />bsf_monitor.log("ERROR", "Starting MinimalGroovy", null);

//list of all available objects from the BSFMonitor

Map<String, Object> map = map;

bsf_monitor.log("ERROR", "---- map ----", null);

bsf_monitor.log("ERROR", map.toString(), null);

String ip_addr = ip_addr;

bsf_monitor.log("ERROR", "---- ip_addr ----", null);

bsf_monitor.log("ERROR", ip_addr, null);

int node_id = node_id;

bsf_monitor.log("ERROR", "---- node_id ----", null);

bsf_monitor.log("ERROR", node_id.toString(), null);

String node_label = node_label;

bsf_monitor.log("ERROR", "---- node_label ----", null);

bsf_monitor.log("ERROR", node_label, null);

String svc_name = svc_name;

bsf_monitor.log("ERROR", "---- svc_name ----", null);

bsf_monitor.log("ERROR", svc_name, null);

org.opennms.netmgt.poller.monitors.BSFMonitor bsf_monitor = bsf_monitor;

bsf_monitor.log("ERROR", "---- bsf_monitor ----", null);

bsf_monitor.log("ERROR", bsf_monitor.toString(), null);

HashMap<String, String> results = results;

bsf_monitor.log("ERROR", "---- results ----", null);

bsf_monitor.log("ERROR", results.toString(), null);

LinkedHashMap<String, Number> times = times;

bsf_monitor.log("ERROR", "---- times ----", null);

bsf_monitor.log("ERROR", times.toString(), null);

// reading a parameter from the service definition

String myParameter = myParameter;

bsf_monitor.log("ERROR", "---- myParameter ----", null);

bsf_monitor.log("ERROR", myParameter, null);

// minimal example

def testFile = new File("/tmp/TestFile");

if (testFile.exists()) {

bsf_monitor.log("ERROR", "Done MinimalGroovy ---- OK ----", null);

return "OK";

} else {

results.put("reason", "file does not exist");

bsf_monitor.log("ERROR", "Done MinimalGroovy ---- NOK ----", null);

return "NOK";

}1.1.4. CiscoIpSlaMonitor

This monitor can be used to monitor IP SLA configurations on your Cisco devices. This monitor supports the following SNMP OIDS from CISCO-RTT-MON-MIB:

RTT_ADMIN_TAG_OID = .1.3.6.1.4.1.9.9.42.1.2.1.1.3 RTT_OPER_STATE_OID = .1.3.6.1.4.1.9.9.42.1.2.9.1.10 RTT_LATEST_OPERSENSE_OID = .1.3.6.1.4.1.9.9.42.1.2.10.1.2 RTT_ADMIN_THRESH_OID = .1.3.6.1.4.1.9.9.42.1.2.1.1.5 RTT_ADMIN_TYPE_OID = .1.3.6.1.4.1.9.9.42.1.2.1.1.4 RTT_LATEST_OID = .1.3.6.1.4.1.9.9.42.1.2.10.1.1

The monitor can be run in two scenarios. The first one tests the RTT_LATEST_OPERSENSE which is a sense code for the completion status of the latest RTT operation. If the RTT_LATEST_OPERSENSE returns ok(1) the service is marked as up.

The second scenario is to monitor the configured threshold in the IP SLA config. If the RTT_LATEST_OPERSENSE returns with overThreshold(3) the service is marked down.

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of retries to get the information from the SNMP agent before the service is marked as down. |

optional |

from 'snmp-config.xml' |

|

Time in milliseconds to wait for the result from the SNMP agent before making the next attempt. |

optional |

from 'snmp-config.xml' |

|

The |

required |

|

|

Boolean indicates if just the status or configured threshold should be monitored. |

required |

`` |

Example for HTTP and ICMP echo reply

In this example we configure an IP SLA entry to monitor Google’s website with HTTP GET from the Cisco device.

We use 8.8.8.8 as our DNS resolver.

In our example our SLA says we should reach Google’s website within 200ms.

To advise co-workers that this monitor entry is used for monitoring, I set the owner to OpenNMS.

The tag is used to identify the entry later in the SNMP table for monitoring.

ip sla monitor 1

type http operation get url http://www.google.de name-server 8.8.8.8

timeout 3000

threshold 200

owner OpenNMS

tag Google Website

ip sla monitor schedule 3 life forever start-time nowIn the second example we configure a IP SLA to test if the IP address from www.opennms.org is reachable with ICMP from the perspective of the Cisco device. Like the example above we have a threshold and a timeout.

ip sla 1

icmp-echo 64.146.64.212

timeout 3000

threshold 150

owner OpenNMS

tag OpenNMS Host

ip sla schedule 1 life forever start-time now| It´s not possible to reconfigure an IP SLA entry. If you want to change parameters, you have to delete the whole configuration and reconfigure it with your new parameters. Backup your Cisco configuration manually or take a look at RANCID. |

To monitor both of the entries the configuration in 'poller-configuration.xml' requires two service definition entries:

<service name="IP-SLA-WEB-Google" interval="300000"

user-defined="false" status="on">

<parameter key="retry" value="2" />

<parameter key="timeout" value="3000" />

<parameter key="admin-tag" value="Google Website" />

<parameter key="ignore-thresh" value="false" />(1)

</service>

<service name="IP-SLA-PING-OpenNMS" interval="300000"

user-defined="false" status="on">

<parameter key="retry" value="2" />

<parameter key="timeout" value="3000" />

<parameter key="admin-tag" value="OpenNMS Host" />

<parameter key="ignore-thresh" value="true" />(2)

</service>

<monitor service="IP-SLA-WEB-Google" class-name="org.opennms.netmgt.poller.monitors.CiscoIpSlaMonitor" />

<monitor service="IP-SLA-PING-OpenNMS" class-name="org.opennms.netmgt.poller.monitors.CiscoIpSlaMonitor" />| 1 | Service is up if the IP SLA state is ok(1) |

| 2 | Service is down if the IP SLA state is overThreshold(3) |

1.1.5. CiscoPingMibMonitor

This poller monitor’s purpose is to create conceptual rows (entries) in the ciscoPingTable on Cisco IOS devices that support the CISCO-PING-MIB. These entries direct the remote IOS device to ping an IPv4 or IPv6 address with a configurable set of parameters. After the IOS device has completed the requested ping operations, the poller monitor queries the IOS device to determine the results. If the results indicate success according to the configured parameters in the service configuration, then the monitored service is reported as available and the results are available for optional time-series (RRD) storage. If the results indicate failure, the monitored service is reported unavailable with a descriptive reason code. If something goes wrong during the setup of the entry or the subsequent querying of its status, the monitored service is reported to be in an unknown state.

Unlike most poller monitors, the CiscoPingMibMonitor does not interpret the timeout and retries parameters to determine when a poll attempt has timed out or whether it should be attempted again.

The packet-count and packet-timeout parameters instead service this purpose from the perspective of the remote IOS device.

|

ciscoPingEntry 1.3.6.1.4.1.9.9.16.1.1.1

ciscoPingSerialNumber 1.3.6.1.4.1.9.9.16.1.1.1.1

ciscoPingProtocol 1.3.6.1.4.1.9.9.16.1.1.1.2

ciscoPingAddress 1.3.6.1.4.1.9.9.16.1.1.1.3

ciscoPingPacketCount 1.3.6.1.4.1.9.9.16.1.1.1.4

ciscoPingPacketSize 1.3.6.1.4.1.9.9.16.1.1.1.5

ciscoPingPacketTimeout 1.3.6.1.4.1.9.9.16.1.1.1.6

ciscoPingDelay 1.3.6.1.4.1.9.9.16.1.1.1.7

ciscoPingTrapOnCompletion 1.3.6.1.4.1.9.9.16.1.1.1.8

ciscoPingSentPackets 1.3.6.1.4.1.9.9.16.1.1.1.9

ciscoPingReceivedPackets 1.3.6.1.4.1.9.9.16.1.1.1.10

ciscoPingMinRtt 1.3.6.1.4.1.9.9.16.1.1.1.11

ciscoPingAvgRtt 1.3.6.1.4.1.9.9.16.1.1.1.12

ciscoPingMaxRtt 1.3.6.1.4.1.9.9.16.1.1.1.13

ciscoPingCompleted 1.3.6.1.4.1.9.9.16.1.1.1.14

ciscoPingEntryOwner 1.3.6.1.4.1.9.9.16.1.1.1.15

ciscoPingEntryStatus 1.3.6.1.4.1.9.9.16.1.1.1.16

ciscoPingVrfName 1.3.6.1.4.1.9.9.16.1.1.1.17Prerequisites

-

One or more Cisco devices running an IOS image of recent vintage; any 12.2 or later image is probably fine. Even very low-end devices appear to support the CISCO-PING-MIB.

-

The IOS devices that will perform the remote pings must be configured with an SNMP write community string whose source address access-list includes the address of the OpenNMS server and whose MIB view (if any) includes the OID of the ciscoPingTable.

-

The corresponding SNMP write community string must be specified in the

write-communityattribute of either the top-level<snmp-config>element of 'snmp-config.xml' or a<definition>child element that applies to the SNMP-primary interface of the IOS device(s) that will perform the remote pings.

Scalability concerns

This monitor spends a fair amount of time sleeping while it waits for the remote IOS device to complete the requested ping operations.

The monitor is pessimistic in calculating the delay between creation of the ciscoPingTable entry and its first attempt to retrieve the results of that entry’s ping operations — it will always wait at least (packet-count * (packet-timeout + packet-delay)) milliseconds before even checking whether the remote pings have completed.

It’s therefore prone to hogging poller threads if used with large values for the packet-count, packet-timeout, and/or packet-delay parameters.

Keep these values as small as practical to avoid tying up poller threads unnecessarily.

This monitor always uses the current time in whole seconds since the UNIX epoch as the instance identifier of the ciscoPingTable entries that it creates. The object that holds this identifier is a signed 32-bit integer type, precluding a finer resolution. It’s probably a good idea to mix in the least-significant byte of the millisecond-accurate time as a substitute for that of the whole-second-accurate value to avoid collisions. IOS seems to clean up entries in this table within a manner of minutes after their ping operations have completed.

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

A timeout, in milliseconds, that should override the SNMP timeout specified in 'snmp-config.xml'. Do not use without a very good reason to do so. |

optional |

from 'snmp-config.xml' |

|

Number of retries to attempt if the initial attempt times out. Overrides the equivalent value from 'snmp-config.xml'. Do not use unless really needed. |

optional |

from 'snmp-config.xml' |

|

SNMP protocol version (1, 2c, or 3) to use for operations performed by this service monitor. Do not use with out a very good reason to do so. |

optional |

from 'snmp-config.xml' |

|

Number of ping packets that the remote IOS device should send. |

optional |

|

|

Size, in bytes, of each ping packet that the remote IOS device should send. |

optional |

|

|

Timeout, in milliseconds, of each ping packet sent by the remote IOS device. |

optional |

|

|

Delay, in milliseconds, between ping packets sent by the remote IOS device. |

optional |

|

|

String value to set as the value of ciscoPingEntryOwner of entries created for this service. |

optional |

|

|

String value to set as the VRF (VLAN) name in whose context the remote IOS device should perform the pings for this service. |

optional |

empty String |

|

Numeric database identifier of the node whose primary SNMP interface should be used

as the proxy for this service. If specified along with the related

|

optional |

|

|

|

optional |

|

|

IP address of the interface that should be used as the proxy for this service.

Effective only if none of |

optional |

|

|

IP address that the remote IOS device should ping. A value of |

optional |

|

|

A whole-number percentage of pings that must succeed (from the perspective of the

remote IOS device) in order for this service to be considered available. As an

example, if |

optional |

|

|

Base directory of an RRD repository in which to store this service monitor’s response-time samples |

optional |

|

|

Name of the RRD datasource (DS) name in which to store this service monitor’s

response-time samples; rrd-base-name Base name of the RRD file (minus the |

optional |

|

This is optional just if you can use variables in the configuration

| Variable | Description |

|---|---|

|

This value will be substituted with the IP address of the interface on which the monitored service appears. |

Example: Ping the same non-routable address from all routers of customer Foo

A service provider’s client, Foo Corporation, has network service at multiple locations. At each Foo location, a point-of-sale system is statically configured at IPv4 address 192.168.255.1. Foo wants to be notified any time a point-of-sale system becomes unreachable. Using an OpenNMS remote location monitor is not feasible. All of Foo Corporation’s CPE routers must be Cisco IOS devices in order to achieve full coverage in this scenario.

One approach to this requirement is to configure all of Foo Corporation’s premise routers to be in the surveillance categories Customer_Foo, CPE, and Routers, and to use a filter to create a poller package that applies only to those routers.

We will use the special value ${ipaddr} for the proxy-ip-addr parameter so that the remote pings will be provisioned on each Foo CPE router.

Since we want each Foo CPE router to ping the same IP address 192.168.255.1, we statically list that value for the target-ip-addr address.

<package name="ciscoping-foo-pos">

<filter>catincCustomer_Foo & catincCPE & catincRouters & nodeSysOID LIKE '.1.3.6.1.4.1.9.%'</filter>

<include-range begin="0.0.0.0" end="254.254.254.254" />

<rrd step="300">

<rra>RRA:AVERAGE:0.5:1:2016</rra>

<rra>RRA:AVERAGE:0.5:12:1488</rra>

<rra>RRA:AVERAGE:0.5:288:366</rra>

<rra>RRA:MAX:0.5:288:366</rra>

<rra>RRA:MIN:0.5:288:366</rra>

</rrd>

<service name="FooPOS" interval="300000" user-defined="false" status="on">

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response" />

<parameter key="rrd-base-name" value="ciscoping" />

<parameter key="ds-name" value="ciscoping" />

<parameter key="proxy-ip-addr" value="${ipaddr}" />

<parameter key="target-ip-addr" value="192.168.255.1" />

</service>

<downtime interval="30000" begin="0" end="300000" /><!-- 30s, 0, 5m -->

<downtime interval="300000" begin="300000" end="43200000" /><!-- 5m, 5m, 12h -->

<downtime interval="600000" begin="43200000" end="432000000" /><!-- 10m, 12h, 5d -->

<downtime begin="432000000" delete="true" /><!-- anything after 5 days delete -->

</package>

<monitor service="FooPOS" class-name="org.opennms.netmgt.poller.monitors.CiscoPingMibMonitor" />Example: Ping from a single IOS device routable address of each router of customer Bar

A service provider’s client, Bar Limited, has network service at multiple locations. While OpenNMS' world-class service assurance is generally sufficient, Bar also wants to be notified any time a premise router at one of their locations unreachable from the perspective of an IOS device in Bar’s main data center. Some or all of the Bar Limited CPE routers may be non-Cisco devices in this scenario.

To meet this requirement, our approach is to configure Bar Limited’s premise routers to be in the surveillance categories Customer_Bar, CPE, and Routers, and to use a filter to create a poller package that applies only to those routers.

This time, though, we will use the special value ${ipaddr} not in the proxy-ip-addr parameter but in the target-ip-addr parameter so that the remote pings will be performed for each Bar CPE router.

Since we want the same IOS device 20.11.5.11 to ping the CPE routers, we statically list that value for the proxy-ip-addr address.

Example 'poller-configuration.xml' additions

<package name="ciscoping-bar-cpe">

<filter>catincCustomer_Bar & catincCPE & catincRouters</filter>

<include-range begin="0.0.0.0" end="254.254.254.254" />

<rrd step="300">

<rra>RRA:AVERAGE:0.5:1:2016</rra>

<rra>RRA:AVERAGE:0.5:12:1488</rra>

<rra>RRA:AVERAGE:0.5:288:366</rra>

<rra>RRA:MAX:0.5:288:366</rra>

<rra>RRA:MIN:0.5:288:366</rra>

</rrd>

<service name="BarCentral" interval="300000" user-defined="false" status="on">

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response" />

<parameter key="rrd-base-name" value="ciscoping" />

<parameter key="ds-name" value="ciscoping" />

<parameter key="proxy-ip-addr" value="20.11.5.11" />

<parameter key="target-ip-addr" value="${ipaddr}" />

</service>

<downtime interval="30000" begin="0" end="300000" /><!-- 30s, 0, 5m -->

<downtime interval="300000" begin="300000" end="43200000" /><!-- 5m, 5m, 12h -->

<downtime interval="600000" begin="43200000" end="432000000" /><!-- 10m, 12h, 5d -->

<downtime begin="432000000" delete="true" /><!-- anything after 5 days delete -->

</package>

<monitor service="BarCentral" class-name="org.opennms.netmgt.poller.monitors.CiscoPingMibMonitor" />1.1.6. CitrixMonitor

This monitor is used to test if a Citrix® Server or XenApp Server® is providing the ICA protocol on TCP 1494.

The monitor opens a TCP socket and tests the greeting banner returns with ICA, otherwise the service is unavailable.

Monitor facts

Class Name |

|

Remote Enabled |

true |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Amount of attempts opening a connection and try to get the greeting banner before the service goes down |

optional |

|

|

Time to wait retrieving the greeting banner |

optional |

|

|

TCP port where ICA is listening. |

optional |

|

If you have configure the Metaframe Presentation Server Client using Session Reliability, the TCP port is 2598 instead of 1494.

You can find additional information on CTX104147.

It is not verified if the monitor works in this case.

|

Examples

The following example configures OpenNMS to monitor the ICA protocol on TCP 1494 with 2 retries and waiting 5 seconds for each retry.

<service name="Citrix-TCP-ICA" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2" />

<parameter key="timeout" value="5000" />

</service>

<monitor service="Citrix-TCP-ICA" class-name="org.opennms.netmgt.poller.monitors.CitrixMonitor" />1.1.7. DhcpMonitor

This monitor is used to monitor the availability and functionality of DHCP servers.

This monitor has two parts, the first one is the monitor class DhcpMonitor executed by Pollerd and the second part is a background daemon Dhcpd running inside the OpenNMS JVM and listening for DHCP responses.

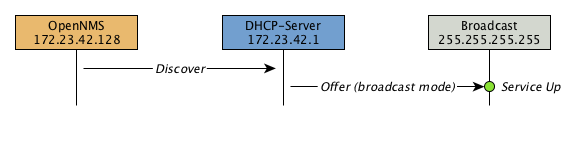

A DHCP server is tested by sending a DISCOVER message.

If the DHCP server responds with an OFFER the service is marked as up.

The Dhcpd background daemon is disabled by default and has to be activated in 'service-configuration.xml' in OpenNMS by setting service enabled="true".

The behavior for testing the DHCP server can be modified in the 'dhcp-configuration.xml' configuration file.

It is required to install the opennms-plugin-protocol-dhcp before you can use this feature.

|

{apt-get,yum} install opennms-plugin-protocol-dhcp

If you try to start OpenNMS without the opennms-plugin-protocol-dhcp you will see the following error message in 'output.log':

An error occurred while attempting to start the "OpenNMS:Name=Dhcpd" service (class org.opennms.netmgt.dhcpd.jmx.Dhcpd). Shutting down and exiting. java.lang.ClassNotFoundException: org.opennms.netmgt.dhcpd.jmx.Dhcpd

Make sure no DHCP client is running on the OpenNMS server and using port UDP/68.

If UDP/68 is already in use, you will find an error message in the manager.log.

You can test if a process is listening on udp/68 with sudo ss -lnpu sport = :68.

|

Monitor facts

Class Name |

|

Remote Enabled |

false |

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of retries before the service is marked as down |

optional |

|

|

Time in milliseconds to wait for the DHCP response from the server |

optional |

|

|

The location to write RRD data. Generally, you will not want to change this from default |

optional |

|

|

The name of the RRD file to write (minus the extension, .rrd or .jrb) |

optional |

|

|

This is the name as reference for this particular data source in the RRD file |

optional |

|

Dhcpd configuration

Parameter |

Description |

Required |

Default value |

|

Defines the port your dhcp server is using |

required |

|

|

The MAC address which OpenNMS uses for a dhcp request |

required |

|

|

This parameter will usually be set to the IP address of the OpenNMS server,

which puts the DHCP poller in |

required |

|

|

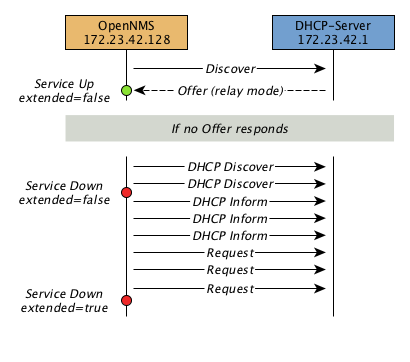

When extendedMode is false, the DHCP poller will send a DISCOVER and expect an

OFFER in return. When extendedMode is true, the DHCP poller will first send a

DISCOVER. If no valid response is received it will send an INFORM. If no valid

response is received it will then send a REQUEST. OFFER, ACK, and NAK are all

considered valid responses in extendedMode.

Usage: |

required |

|

|

This parameter only applies to REQUEST queries sent to the DHCP server when

extendedMode is true. If an IP address is specified, that IP address will be

requested in the query. If |

required |

|

Example testing DHCP server in the same subnet

Example configuration how to configure the monitor in the 'poller-configuration.xml'. The monitor will try to send in maximum 3 DISCOVER messages and waits 3 seconds for the DHCP server OFFER message.

<service name="DHCP" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2" />

<parameter key="timeout" value="3000" />

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response" />

<parameter key="rrd-base-name" value="dhcp" />

<parameter key="ds-name" value="dhcp" />

</service>

<monitor service="DHCP" class-name="org.opennms.protocols.dhcp.monitor.DhcpMonitor"/><service enabled="true">

<name>OpenNMS:Name=Dhcpd</name>

<class-name>org.opennms.netmgt.dhcpd.jmx.Dhcpd</class-name>

<invoke method="start" pass="1" at="start"/>

<invoke method="status" pass="0" at="status"/>

<invoke method="stop" pass="0" at="stop"/>

</service><DhcpdConfiguration

port="5818"

macAddress="00:06:0D:BE:9C:B2"

myIpAddress="broadcast

extendedMode="false"

requestIpAddress="127.0.0.1">

</DhcpdConfiguration>Example testing DHCP server in a different subnet in extended mode

You can use the same monitor in 'poller-configuration.xml' as in the example above.

myIpAddress.<DhcpdConfiguration

port="5818"

macAddress="00:06:0D:BE:9C:B2"

myIpAddress="10.4.1.234"

extendedMode="true"

requestIpAddress="targetSubnet">

</DhcpdConfiguration>

If in extendedMode, the time required to complete the poll for an unresponsive node is increased by a factor of 3.

Thus it is a good idea to limit the number of retries to a small number.

|

1.1.8. DiskUsageMonitor

The DiskUsageMonitor monitor can be used to test the amount of free space available on certain storages of a node.

The monitor gets information about the available free storage spaces available by inspecting the hrStorageTable of the HOST-RESOURCES-MIB.

A storage’s description (as found in the corresponding hrStorageDescr object) must match the criteria specified by the disk and match-type parameters to be monitored.

A storage’s available free space is calculated using the corresponding hrStorageSize and hrStorageUsed objects.

This monitor uses SNMP to accomplish its work. Therefore systems against which it is to be used must have an SNMP agent supporting the HOST-RESOURCES-MIB installed and configured. Most modern SNMP agents, including most distributions of the Net-SNMP agent and the SNMP service that ships with Microsoft Windows, support this MIB. Out-of-box support for HOST-RESOURCES-MIB among commercial Unix operating systems may be somewhat spotty.

Monitor facts

Class Name |

|

Remote Enabled |

false, relies on SNMP configuration. |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

A pattern that a storage’s description (hrStorageDescr) must match to be taken into account. |

required |

|

|

The minimum amount of free space that storages matching the criteria must have available. This parameter is evaluated as a percent of the storage’s reported maximum capacity. |

optional |

|

|

The way how the pattern specified by the |

optional |

|

|

Destination port where the SNMP requests shall be sent. |

optional |

`from snmp-config.xml' |

|

Deprecated.

Same as |

optional |

|

|

Number of polls to attempt. |

optional |

|

|

Timeout in milliseconds for retrieving the values. |

optional |

|

Examples

<!-- Make sure there's at least 5% of free space available on storages ending with "/home" -->

<service name="DiskUsage-home" interval="300000" user-defined="false" status="on">

<parameter key="timeout" value="3000" />

<parameter key="retry" value="2" />

<parameter key="disk" value="/home" />

<parameter key="match-type" value="endsWith" />

<parameter key="free" value="5" />

</service>

<monitor service="DiskUsage-home" class-name="org.opennms.netmgt.poller.monitors.DiskUsageMonitor" />DiskUsageMonitor vs thresholds

Storages' available free space can also be monitored using thresholds if you are already collecting these data.

1.1.9. DnsMonitor

This monitor is build to test the availability of the DNS service on remote IP interfaces. The monitor tests the service availability by sending a DNS query for A resource record types against the DNS server to test.

The monitor is marked as up if the DNS Server is able to send a valid response to the monitor. For multiple records it is possible to test if the number of responses are within a given boundary.

The monitor can be simulated with the command line tool host:

~ % host -v -t a www.google.com 8.8.8.8

Trying "www.google.com"

Using domain server:

Name: 8.8.8.8

Address: 8.8.8.8#53

Aliases:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 9324

;; flags: qr rd ra; QUERY: 1, ANSWER: 5, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;www.google.com. IN A

;; ANSWER SECTION:

www.google.com. 283 IN A 74.125.232.17

www.google.com. 283 IN A 74.125.232.20

www.google.com. 283 IN A 74.125.232.19

www.google.com. 283 IN A 74.125.232.16

www.google.com. 283 IN A 74.125.232.18

Received 112 bytes from 8.8.8.8#53 in 41 msTIP: This monitor is intended for testing the availability of a DNS service. If you want to monitor the DNS resolution of some of your nodes from a client’s perspective, please use the DNSResolutionMonitor.

Monitor facts

Class Name |

|

Remote Enabled |

true |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of retries before the service is marked as down |

optional |

|

|

Time in milliseconds to wait for the A Record response from the server |

optional |

|

|

UDP Port for the DNS server |

optional |

|

|

DNS A Record for lookup test |

optional |

|

|

A comma-separated list of numeric DNS response codes that will be considered fatal if

present in the server’s response. Default value is |

optional |

|

|

Minmal number of records in the DNS server respone for the given lookup |

optional |

|

|

Maximal number of records in the DNS server respone for the given lookup |

optional |

|

Examples

The given examples shows how to monitor if the IP interface from a given DNS server resolves a DNS request.

This service should be bound to a DNS server which should be able to give a valid DNS respone for DNS request www.google.com.

The service is up if the DNS server gives between 1 and 10 A record responses.

<service name="DNS-www.google.com" interval="300000" user-defined="false" status="on">

<parameter key="lookup" value="www.google.com" />

<parameter key="fatal-response-code" value="2" />

<parameter key="min-answers" value="1" />

<parameter key="max-answers" value="10" />

</service>

<monitor service="DNS-www.google.com" class-name="org.opennms.netmgt.poller.monitors.DnsMonitor" />1.1.10. DNSResolutionMonitor

The DNS resolution monitor, tests if the node label of an OpenNMS node can be resolved. This monitor uses the name resolver configuration from the operating system where OpenNMS is running on. It can be used to test a client behavior for a given host name. For example: Create a node with the node label www.google.com and an IP interface. Assigning the DNS resolution monitor on the IP interface will test if www.google.com can be resolved using the DNS configuration of the underlying operating system. The response from the A record lookup can be any address, it is not verified with the IP address on the OpenNMS IP interface where the monitor is assigned to.

Monitor facts

Class Name |

|

Remote Enabled |

true |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Type of record for the node label test. |

optional |

|

|

Amount of attempts to resolve the node label before the service goes down |

required |

|

|

Time to wait for a A and/or AAAA record from the system configured DNS server before trying a next attempt. |

required |

|

Examples

The following example shows the possibilities monitoring IPv4 and/or IPv6 for the service configuration:

<!-- Assigned service test if the node label is resolved for an A record -->

<service name="DNS-Resolution-v4" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="2000"/>

<parameter key="resolution-type" value="v4"/>

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response"/>

<parameter key="rrd-base-name" value="dns-res-v4"/>

<parameter key="ds-name" value="dns-res-v4"/>

</service>

<!-- Assigned service test if the node label is resolved for an AAAA record -->

<service name="DNS-Resolution-v6" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="2000"/>

<parameter key="resolution-type" value="v6"/>

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response"/>

<parameter key="rrd-base-name" value="dns-res-v6"/>

<parameter key="ds-name" value="dns-res-v6"/>

</service>

<!-- Assigned service test if the node label is resolved for an AAAA record AND A record -->

<service name="DNS-Resolution-v4-and-v6" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="2000"/>

<parameter key="resolution-type" value="both"/>

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response"/>

<parameter key="rrd-base-name" value="dns-res-both"/>

<parameter key="ds-name" value="dns-res-both"/>

</service>

<!-- Assigned service test if the node label is resolved for an AAAA record OR A record -->

<service name="DNS-Resolution-v4-or-v6" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="2000"/>

<parameter key="resolution-type" value="either"/>

<parameter key="rrd-repository" value="/opt/opennms/share/rrd/response"/>

<parameter key="rrd-base-name" value="dns-res-either"/>

<parameter key="ds-name" value="dns-res-either"/>

</service>

<monitor service="DNS-Resolution-v4" class-name="org.opennms.netmgt.poller.monitors.DNSResolutionMonitor" />

<monitor service="DNS-Resolution-v6" class-name="org.opennms.netmgt.poller.monitors.DNSResolutionMonitor" />

<monitor service="DNS-Resolution-v4-and-v6" class-name="org.opennms.netmgt.poller.monitors.DNSResolutionMonitor" />

<monitor service="DNS-Resolution-v4-or-v6" class-name="org.opennms.netmgt.poller.monitors.DNSResolutionMonitor" />To have response time graphs for the name resolution you have to configure RRD graphs for the given ds-names (dns-res-v4, dns-res-v6, dns-res-both, dns-res-either) in '$OPENNMS_HOME/etc/response-graph.properties'.

DNSResolutionMonitor vs DnsMonitor

The DNSResolutionMonitor is used to measure the availability and record outages of a name resolution from client perspective. The service is mainly used for websites or similar public available resources. It can be used in combination with the Page Sequence Monitor to give a hint if a website isn’t available for DNS reasons.

The DnsMonitor on the other hand is a test against a specific DNS server. In OpenNMS the DNS server is the node and the DnsMonitor will send a lookup request for a given A record to the DNS server IP address. The service goes down if the DNS server doesn’t have a valid A record in his zone database or as some other issues resolving A records.

1.1.11. FtpMonitor

The FtpMonitor is able to validate ftp connection dial-up processes. The monitor can test ftp server on multiple ports and specific login data.

The service using the FtpMonitor is up if the FTP server responds with return codes between 200 and 299. For special cases the service is also marked as up for 425 and 530.

Monitor facts

Class Name |

|

Remote Enabled |

|

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of attempts to get a valid FTP response/response-text |

optional |

|

|

Timeout in milliseconds for TCP connection establishment. |

optional |

|

|

A list of TCP ports to which connection shall be tried. |

optional |

|

|

This parameter is meant to be used together with the |

optional |

|

|

This parameter is meant to be used together with the |

optional |

|

Examples

Some example configuration how to configure the monitor in the 'poller-configuration.xml'

<service name="FTP" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="1"/>

<parameter key="timeout" value="3000"/>

<parameter key="port" value="21"/>

<parameter key="userid" value=""/>

<parameter key="password" value=""/>

</service>

<service name="FTP-Customer" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="1"/>

<parameter key="timeout" value="3000"/>

<parameter key="port" value="21"/>

<parameter key="userid" value="Customer"/>

<parameter key="password" value="MySecretPassword"/>

</service>

<monitor service="FTP" class-name="org.opennms.netmgt.poller.monitors.FtpMonitor"/>

<monitor service="FTP-Customer" class-name="org.opennms.netmgt.poller.monitors.FtpMonitor"/>Hint

Comment from FtpMonitor source

Also want to accept the following ERROR message generated by some FTP servers following a QUIT command without a previous successful login: "530 QUIT : User not logged in. Please login with USER and PASS first."

Also want to accept the following ERROR message generated by some FTP servers following a QUIT command without a previously successful login: "425 Session is disconnected."

See also: http://tools.ietf.org/html/rfc959

1.1.12. HostResourceSwRunMonitor

This monitor test the running state of one or more processes. It does this via SNMP by inspecting the hrSwRunTable of the HOST-RESOURCES-MIB. The test is done by matching a given process as hrSwRunName against the numeric value of the hrSwRunState.

This monitor uses SNMP to accomplish its work. Therefore systems against which it is to be used must have an SNMP agent installed and configured. Furthermore, the SNMP agent on the system must support the HOST-RESOURCES-MIB. Most modern SNMP agents, including most distributions of the Net-SNMP agent and the SNMP service that ships with Microsoft Windows, support this MIB. Out-of-box support for HOST-RESOURCES-MIB among commercial Unix operating systems may be somewhat spotty.

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

The port of the SNMP agent of the server to test. |

optional |

|

|

Number of attempts to get a valid response before marking the service as down. |

optional |

|

|

Timeout in milliseonds wating for the SNMP response for the process run state from the agent. |

optional |

|

|

The name of the process to be monitored. This parameter’s value is case-sensitive and is evaluated as an exact match. |

required |

|

|

If the process name appears multiple times in the hrSwRunTable, and this parameter is set to

|

optional |

|

|

The maximum allowable value of hrSWRunStatus among |

optional |

|

|

The numeric object identifier (OID) from which process names are queried. Defaults to

hrSwRunName and should never be changed under normal

circumstances. That said, changing it to hrSwRunParameters ( |

optional |

|

|

The numeric object identifier (OID) from which run status is queried. Defaults to hrSwRunStatus and should never be changed under normal circumstances. |

optional |

|

Examples

The following example shows how to monitor the process called httpd running on a server using this monitor. The configuration in 'poller-configuration.xml' has to be defined as the following:

<service name="Process-httpd" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="3"/>

<parameter key="timeout" value="3000"/>

<parameter key="service-name" value="httpd"/>(1)

<parameter key="run-level" value="3"/>(2)

<parameter key="match-all" value="true"/>(3)

</service>

<monitor service="Process-httpd" class-name="org.opennms.netmgt.poller.monitors.HostResourceSwRunMonitor"/>| 1 | Name of the process on the system |

| 2 | Test the state if the process is in a valid state, i.e. have a run-level no higher than notRunnable(3) |

| 3 | If the httpd process runs multiple times the test is done for each instance of the process. |

1.1.13. HttpMonitor

The HTTP monitor tests the response of an HTTP server on a specific HTTP 'GET' command. During the poll, an attempt is made to connect on the specified port(s). The monitor can test web server on multiple ports. By default the a test is made against port 80, 8080 and 8888. If the connection request is successful, an HTTP 'GET' command is sent to the interface. The response is parsed and a return code extracted and verified.

Monitor facts

Class Name |

|

Remote Enabled |

true |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Authentication credentials to perform basic authentication. |

optional |

|

|

Additional headers to be sent along with the request. |

optional |

|

|

Specify the Host header’s value. |

optional |

|

|

If the |

optional |

|

|

This parameter is meant to be used together with the |

optional |

|

|

A list of TCP ports to which connection shall be tried. |

optional |

|

|

Number of attempts to get a valid HTTP response/response-text |

optional |

|

|

If the |

optional |

|

|

A comma-separated list of acceptable HTTP response code ranges.

Example: |

optional |

If the |

|

Text to look for in the response body. This will be matched against every line, and it |

optional |

|

|

Timeout in milliseconds for TCP connection establishment. |

optional |

|

|

URL to be retrieved via the HTTP 'GET' command |

optional |

|

|

This parameter is meant to be used together with the |

optional |

|

|

Allows you to set the User-Agent HTTP header (see also RFC2616 section 14.43). |

optional |

|

|

When set to true, full communication between client and the webserver will be logged |

optional |

|

Examples

<!-- Test HTTP service on port 80 only -->

<service name="HTTP" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="3000"/>

<parameter key="port" value="80"/>

<parameter key="url" value="/"/>

</service>

<!-- Test for virtual host opennms.com running -->

<service name="OpenNMSdotCom" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="1"/>

<parameter key="timeout" value="3000"/>

<parameter key="port" value="80"/>

<parameter key="host-name" value="opennms.com"/>

<parameter key="url" value="/solutions"/>

<parameter key="response" value="200-202,299"/>

<parameter key="response-text" value="~.*[Cc]onsulting.*"/>

</service>

<!-- Test for instance of OpenNMS 1.2.9 running -->

<service name="OpenNMS-129" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="1"/>

<parameter key="timeout" value="3000"/>

<parameter key="port" value="8080"/>

<parameter key="url" value="/opennms/event/list"/>

<parameter key="basic-authentication" value="admin:admin"/>

<parameter key="response" value="200"/>

</service>

<monitor service="HTTP" class-name="org.opennms.netmgt.poller.monitors.HttpMonitor" />

<monitor service="OpenNMSdotCom" class-name="org.opennms.netmgt.poller.monitors.HttpMonitor" />

<monitor service="OpenNMS-129" class-name="org.opennms.netmgt.poller.monitors.HttpMonitor" />Testing filtering proxies with HttpMonitor

If you have a filtering proxy server that is supposed to allow retrieval of some URLs but deny others, you can use the HttpMonitor to verify this behavior.

Let’s say that our proxy server is running on TCP port 3128, and that we should always be able to retrieve http://www.opennms.org/ but never http://www.myspace.com/ (hey, this is a workplace after all!). To test this behaviour, one could create the following service monitors:

<service name="HTTP-Allow-opennms.org" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="1"/>

<parameter key="timeout" value="3000"/>

<parameter key="port" value="3128"/>

<parameter key="url" value="http://www.opennms.org/"/>

<parameter key="response" value="200-399"/>

</service>

<service name="HTTP-Block-myspace.com" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="1"/>

<parameter key="timeout" value="3000"/>

<parameter key="port" value="3128"/>

<parameter key="url" value="http://www.myspace.com/"/>

<parameter key="response" value="400-599"/>

</service>

<monitor service="HTTP-Allow-opennms.org" class-name="org.opennms.netmgt.poller.monitors.HttpMonitor"/>

<monitor service="HTTP-Block-myspace.com" class-name="org.opennms.netmgt.poller.monitors.HttpMonitor"/>1.1.14. HttpPostMonitor

If it is required to HTTP POST any arbitrary content to a remote URI, the HttpPostMonitor can be used. A use case is to HTTP POST to a SOAP endpoint.

Monitor facts

Class Name |

|

Remote Enabled |

|

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

The body of the POST, for example properly escaped XML. |

required |

|

|

The password to use for HTTP BASIC auth. |

optional |

|

|

The username to use for HTTP BASIC auth. |

optional |

|

|

A string that is matched against the response of the HTTP POST.

If the output contains the banner, the service is determined as up.

Specify a regex by starting with |

optional |

|

|

Set the character set for the POST. |

optional |

|

|

Set the mimetype for the POST. |

optional |

|

|

The port for the web server where the POST is send to. |

optional |

|

|

The connection scheme to use. |

optional |

|

|

Enables or disables the SSL ceritificate validation. |

optional |

|

|

The uri to use during the POST. |

optional |

|

Examples

The following example would create a POST that contains the payload Word.

<service name="MyServlet" interval="300000" user-defined="false" status="on">

<parameter key="banner" value="Hello"/>

<parameter key="port" value="8080"/>

<parameter key="uri" value="/MyServlet">

<parameter key="payload" value="World"/>

<parameter key="retry" value="1"/>

<parameter key="timeout" value="30000"/>

</service>

<monitor service="MyServlet" class-name="org.opennms.netmgt.poller.monitors.HttpPostMonitor"/>The resulting POST looks like this:

POST /MyServlet HTTP/1.1

Content-Type: text/xml; charset=utf-8

Host: <ip_addr_of_interface>:8080

Connection: Keep-Alive

World1.1.15. HttpsMonitor

The HTTPS monitor tests the response of an SSL-enabled HTTP server. The HTTPS monitor is an SSL-enabled extension of the HTTP monitor with a default TCP port value of 443. All HttpMonitor parameters apply, so please refer to HttpMonitor’s documentation for more information.

Monitor facts

Class Name |

|

Remote Enabled |

true |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

A list of TCP ports to which connection shall be tried. |

optional |

|

Examples

<!-- Test HTTPS service on port 8443 -->

<service name="HTTPS" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="3000"/>

<parameter key="port" value="8443"/>

<parameter key="url" value="/"/>

</service>

<monitor service="HTTPS" class-name="org.opennms.netmgt.poller.monitors.HttpsMonitor" />1.1.16. IcmpMonitor

The ICMP monitor tests for ICMP service availability by sending echo request ICMP messages. The service is considered available when the node sends back an echo reply ICMP message within the specified amount of time.

Monitor facts

Class Name |

|

Remote Enabled |

true with some restrictions (see below) |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of bytes of the ICMP packet to send. |

optional |

|

|

Number of attempts to get a response. |

optional |

|

|

Time in milliseconds to wait for a response. |

optional |

|

Examples

<service name="ICMP" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="2"/>

<parameter key="timeout" value="3000"/>

<parameter key="rrd-repository" value="/var/lib/opennms/rrd/response"/>

<parameter key="rrd-base-name" value="icmp"/>

<parameter key="ds-name" value="icmp"/>

</service>

<monitor service="ICMP" class-name="org.opennms.netmgt.poller.monitors.IcmpMonitor"/>Note on Remote Poller

The IcmpMonitor needs the JNA ICMP implementation to function on remote poller. Though, corner cases exist where the IcmpMonitor monitor won’t work on remote poller. Examples of such corner cases are: Windows when the remote poller isn’t running has administrator, and Linux on ARM / Rasperry Pi. JNA is the default ICMP implementation used in the remote poller.

1.1.17. ImapMonitor

This monitor checks if an IMAP server is functional. The test is done by initializing a very simple IMAP conversation. The ImapMonitor establishes a TCP connection, sends a logout command and test the IMAP server responses.

The behavior can be simulated with telnet:

telnet mail.myserver.de 143 Trying 62.108.41.197... Connected to mail.myserver.de. Escape character is '^]'. * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE IDLE STARTTLS LOGINDISABLED] Dovecot ready. (1) ONMSPOLLER LOGOUT (2) * BYE Logging out (3) ONMSPOLLER OK Logout completed. Connection closed by foreign host.

| 1 | Test IMAP server banner, it has to start * OK to be up |

| 2 | Sending a ONMSPOLLER LOGOUT |

| 3 | Test server responds with, it has to start with * BYE to be up |

If one of the tests in the sample above fails the service is marked down.

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of attempts to get a valid IMAP response |

optional |

|

|

Time in milliseconds to wait retrieving the banner from TCP connection before trying a next attempt. |

optional |

|

|

The port of the IMAP server. |

optional |

|

Examples

Some example configuration how to configure the monitor in the poller-configuration.xml

<!-- Test IMAP service on port 143 only -->

<service name="IMAP" interval="300000" user-defined="false" status="on">

<parameter key="retry" value="1"/>

<parameter key="port" value="143"/>

<parameter key="timeout" value="3000"/>

</service>

<monitor service="IMAP" class-name="org.opennms.netmgt.poller.monitors.ImapMonitor" />1.1.18. JCifsMonitor

This monitor allows to test a file sharing service based on the CIFS/SMB protocol.

This monitor is not installed by default.

You have to install opennmms-plugin-protocol-cifs from your OpenNMS installation repository.

|

With the JCIFS monitor you have different possibilities to test the availability of the JCIFS service:

With the JCifsMonitor it is possible to run tests for the following use cases:

-

share is available in the network

-

a given file exists in the share

-

a given folder exists in the share

-

a given folder should contain at least one (1) file

-

a given folder folder should contain no (0) files

-

by testing on files and folders, you can use a regular expression to ignore specific file and folder names from the test

A network resource in SMB like a file or folder is addressed as a UNC Path.

\\server\share\folder\file.txt

The Java implementation jCIFS, which implements the CIFS/SMB network protocol, uses SMB URLs to access the network resource. The same resource as in our example would look like this as an SMB URL:

smb://workgroup;user:password@server/share/folder/file.txt

The JCifsMonitor can not test:

-

file contains specific content

-

a specific number of files in a folder, for example folder should contain exactly / more or less than x files

-

Age or modification time stamps of files or folders

-

Permissions or other attributes of files or folders

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

Number of retries before the service is marked as down. |

optional |

|

|

Time in milliseconds to wait for the SMB service. |

optional |

|

|

Windows domain where the user is located. You don’t have to use the domain parameter if you use local user accounts. |

optional |

empty String |

|

Username to access the resource over a network |

optional |

empty String |

|

Password for the user |

optional |

empty String |

|

Path to the resource you want to test |

required |

empty String |

|

The test mode which has the following options |

optional |

|

|

Override the IP address of the SMB url to check shares on different file servers. |

optional |

empty String |

|

Ignore specific files in folder with regular expression. This parameter will just be applied on

|

optional |

|

It makes little sense to have retries higher than 1.

It is a waste of resources during the monitoring.

|

Please consider, if you are accessing shares with Mac OSX you have some side effects with the hidden file '.DS_Store.'

It could give you false positives in monitoring, you can use then the folderIgnoreFiles parameter.

|

Example test existence of a file

This example shows how to configure the JCifsMonitor to test if a file share is available over a network. For this example we have access to a share for error logs and we want to get an outage if we have any error log files in our folder. The share is named 'log'. The service should go back to normal if the error log file is deleted and the folder is empty.

<service name="CIFS-ErrorLog" interval="30000" user-defined="true" status="on">

<parameter key="retry" value="1" />

<parameter key="timeout" value="3000" />

<parameter key="domain" value="contoso" />(1)

<parameter key="username" value="MonitoringUser" />(2)

<parameter key="password" value="MonitoringPassword" />(3)

<parameter key="path" value="/fileshare/log/" />(4)

<parameter key="mode" value="folder_empty" />(5)

</service>

<monitor service="CIFS-ErrorLog" class-name="org.opennms.netmgt.poller.monitors.JCifsMonitor" />| 1 | Name of the SMB or Microsoft Windows Domain |

| 2 | User for accessing the share |

| 3 | Password for accessing the share |

| 4 | Path to the folder inside of the share as part of the SMB URL |

| 5 | Mode is set to folder_empty |

1.1.19. JDBCMonitor

The JDBCMonitor checks that it is able to connect to a database and checks if it is able to get the database catalog from that database management system (DBMS). It is based on the JDBC technology to connect and communicate with the database.

Monitor facts

Class Name |

|

Remote Enabled |

true |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

JDBC driver class to use |

required |

|

|

JDBC Url to connect to. |

required |

|

|

Database user |

required |

|

|

Database password |

required |

|

|

Timeout in ms for the database connection |

optional |

|

|

How many retries should be performed before failing the test |

optional |

|

| The OPENNMS_JDBC_HOSTNAME is replaced in the url parameter the IP or resolved hostname of the interface the monitored service is assigned to. |

Provide the database driver

The JDBCMonitor is based on JDBC and requires a JDBC driver to communicate with any database.

Due to the fact that OpenNMS itself uses a PostgreSQL database, the PostgreSQL JDBC driver is available out of the box.

For all other database systems a compatible JDBC driver has to be provided to OpenNMS as a jar-file.

To provide a JDBC driver place the driver-jar in the opennms/lib folder of your OpenNMS.

To use the JDBCMonitor from a remote poller, the driver-jar has to be provided to the Remote Poller too.

This may be tricky or impossible when using the Java Webstart Remote Poller, because of code signing requirements.

Examples

The following example checks if the PostgreSQL database used by OpenNMS is available.

<service name="OpenNMS-DBMS" interval="30000" user-defined="true" status="on">

<parameter key="driver" value="org.postgresql.Driver"/>

<parameter key="url" value="jdbc:postgresql://OPENNMS_JDBC_HOSTNAME:5432/opennms"/>

<parameter key="user" value="opennms"/>

<parameter key="password" value="opennms"/>

</service>

<monitor service="OpenNMS-DBMS" class-name="org.opennms.netmgt.poller.monitors.JDBCMonitor" />1.1.20. JDBCStoredProcedureMonitor

The JDBCStoredProcedureMonitor checks the result of a stored procedure in a remote database. The result of the stored procedure has to be a boolean value (representing true or false). The service associated with this monitor is marked as up if the stored procedure returns true and it is marked as down in all other cases. It is based on the JDBC technology to connect and communicate with the database.

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

JDBC driver class to use |

required |

|

|

JDBC Url to connect to. |

required |

|

|

Database user |

required |

|

|

Database password |

required |

|

|

Timeout in ms for the database connection |

optional |

|

|

How many retries should be performed before failing the test |

optional |

|

|

Name of the database stored procedure to call |

required |

|

|

Name of the database schema in which the stored procedure is |

optional |

|

| The OPENNMS_JDBC_HOSTNAME is replaced in the url parameter the IP or resolved hostname of the interface the monitored service is assigned to. |

Provide the database driver

The JDBCStoredProcedureMonitor is based on JDBC and requires a JDBC driver to communicate with any database.

Due to the fact that OpenNMS itself uses a PostgreSQL database, the PostgreSQL JDBC driver is available out of the box.

For all other database systems a compatible JDBC driver has to be provided to OpenNMS as a jar-file.

To provide a JDBC driver place the driver-jar in the opennms/lib folder of your OpenNMS.

To use the JDBCStoredProcedureMonitor from a remote poller, the driver-jar has to be provided to the Remote Poller too.

This may be tricky or impossible when using the Java Webstart Remote Poller, because of code signing requirements.

Examples

The following example checks a stored procedure added to the PostgreSQL database used by OpenNMS. The stored procedure returns true as long as less than 250000 events are in the events table of OpenNMS.

Stored procedure

CREATE OR REPLACE FUNCTION eventlimit_sp() RETURNS boolean AS

$BODY$DECLARE

num_events integer;

BEGIN

SELECT COUNT(*) into num_events from events;

RETURN num_events > 250000;

END;$BODY$

LANGUAGE plpgsql VOLATILE NOT LEAKPROOF

COST 100;<service name="OpenNMS-DB-SP-Event-Limit" interval="300000" user-defined="true" status="on">

<parameter key="driver" value="org.postgresql.Driver"/>

<parameter key="url" value="jdbc:postgresql://OPENNMS_JDBC_HOSTNAME:5432/opennms"/>

<parameter key="user" value="opennms"/>

<parameter key="password" value="opennms"/>

<parameter key="stored-procedure" value="eventlimit_sp"/>

<parameter key="schema" value="public"/>

</service>

<monitor service="OpenNMS-DB-SP-Event-Limit" class-name="org.opennms.netmgt.poller.monitors.JDBCStoredProcedureMonitor"/>1.1.21. JDBCQueryMonitor

The JDBCQueryMonitor runs an SQL query against a database and is able to verify the result of the query. A read-only connection is used to run the SQL query, so the data in the database is not altered. It is based on the JDBC technology to connect and communicate with the database.

Monitor facts

Class Name |

|

Remote Enabled |

false |

Configuration and Usage

| Parameter | Description | Required | Default value |

|---|---|---|---|

|

JDBC driver class to use |

required |

|

|

JDBC URL to connect to. |

required |

|

|

Database user |

required |

|

|

Database password |

required |

|

|